The facial animation and deformation system is more advanced as well.

Both Beyond and the Sorcerer demo are using only bones to deform the face, driving them by a straight translation of facial marker 3D mocap data. This makes some of the deformations notoriusly hard to reproduce, especially the mouth and the eyelids, even with a large number of bones.

Basically they don't capture the performance itself, the intent of the actor, but just the surface of the face instead; and at a very rough level of detail, as the number of markers that can be placed on a face is very limited.

The result is that the facial deformations have good dynamics, but look very weird at times; and the more extreme the facial deformation has to be, the more obvious this issue becomes.

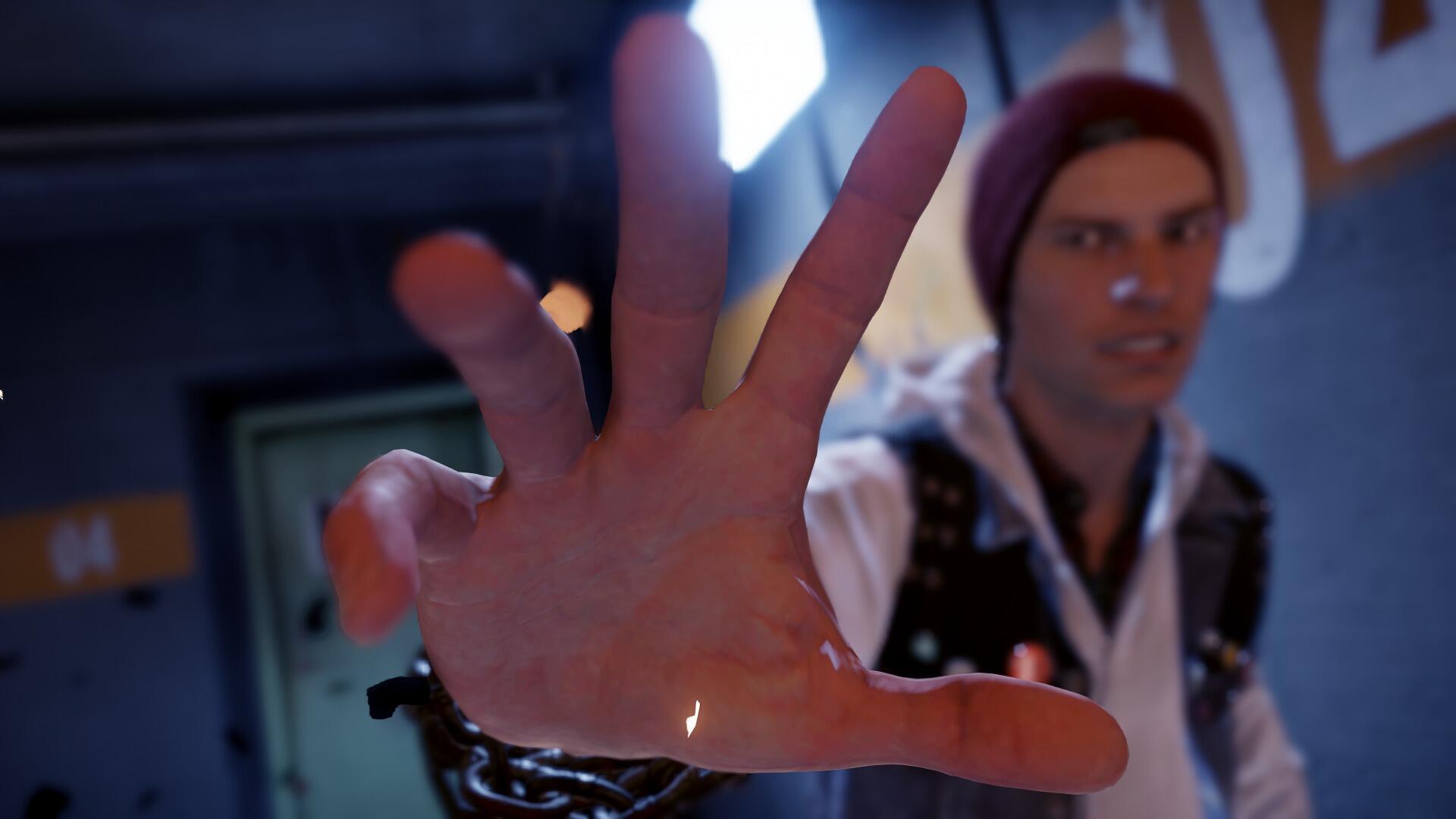

Quantum Break however is using a more advanced method where two different capture techniques are combined. This is also the approach used in games like Ryse or Infamous Second Son.

First each actor's base facial expressions are captured, things like raising an eyebrow, puckering the lips, or blinking an eye. The breakdown of these expressions is based on the Facial Action Coding System (FACS) developed by clinical psychologists in the `60s, and first adapted for 3D facial animation on the LOTR movies and most notably Gollum. Nowadays it's pretty much an industry standard in VFX and spreading very quickly in game development.

These expressions are created basically as full 3D scans at a very high level of detail, up to millions of polygons, and capture not only the general shape of the face but also the tiniest folds and wrinkles and skin sliding and all. One can also capture the skin color changes from compression where blood is retained or pushed out from certain areas of the face. The scanning itself is usually done by stereo photogrammetry, basically using 15-50 digital cameras to shoot pictures from many different angles; the software then generates a 3D point cloud based on matching millions of points in the images based on color changes. Human skin is fortunately detailed enough for that

The entire expression library usually has 40-80 individual scans.

These scans are used to build a facerig where each basic expression can be dialed in at any desired intensity, and the mix of various FACS Action Units can be corrected if they look wrong on their own, too. Games with this approach usually also use a bones based rig, finetuned by a set of corrective blendshapes or morfs (which can be turned off as the character moves away from the camera and accuracy becomes less important); and they also add various wrinkle maps on top of the normal map to create the creases and folds for stuff like raising the eyebrows. These wrinkle maps are also generated from the facial scans.

Also, correctives are only used when necessary, because blendshapes are computationally expensive on realtime systems (no real GPU support) and they also take up a lot of memory. The main facial movement is covered by up to 150+ bones (in Ryse) and the riggers are mostly trying to match the scans by manually adjusting the bones for each expression.

The second capture then is the actual performance, which is usually an imaged based approach, using a face camera hinged from a helmet worn by the actor during the mocap (or in this case P-cap).

Software called a solver analyzes the facial movements (usually with the help of painted dots on the actor's face) and translates it into animation data driving the various basic expressions (AUs). There's no need to capture the actual facial deformation itself during the performance as it's already stored in the face rig itself, generated from the facial scans.

The result is more accurate and better looking deformation, and it's also much easier to manually animate on top to correct or mix or replace, as the animators only need to work with the expressions.

I hope all the above is clear and easy to understand. Also note that I'm in no way trying to bash Quantic's team, but there's no other way to say it, their methodology is outdated and inferior, and it shows. It was easy to please the hardcore audience with their demo mostly because it was an early move, but even today we already have games using more advanced techniques and producing much better animation. Quantic's texture and shader work is still good, so it looks nice in stills, but once those faces start to move the illusion breaks very quickly. If I were them, I'd look into the new approaches ASAP.