It does quite a bit for "SSDs" (aka SATA SSDs) as my results are showing.

As I've said DS isn't just "BybassIO" and the latter isn't really even that important.

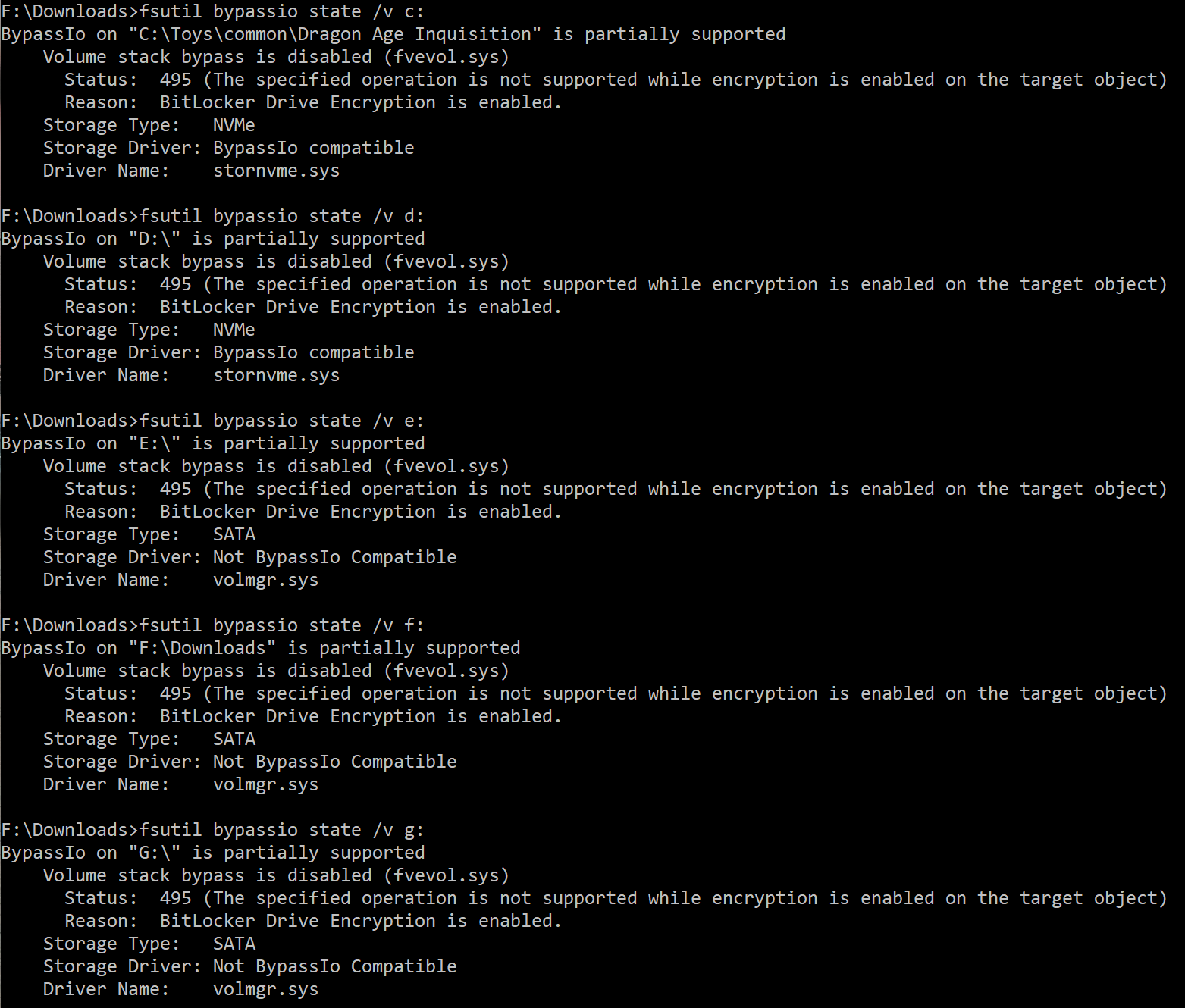

On a side note I think it hella fun that "BypassIO" is not compatible with BitLocker...

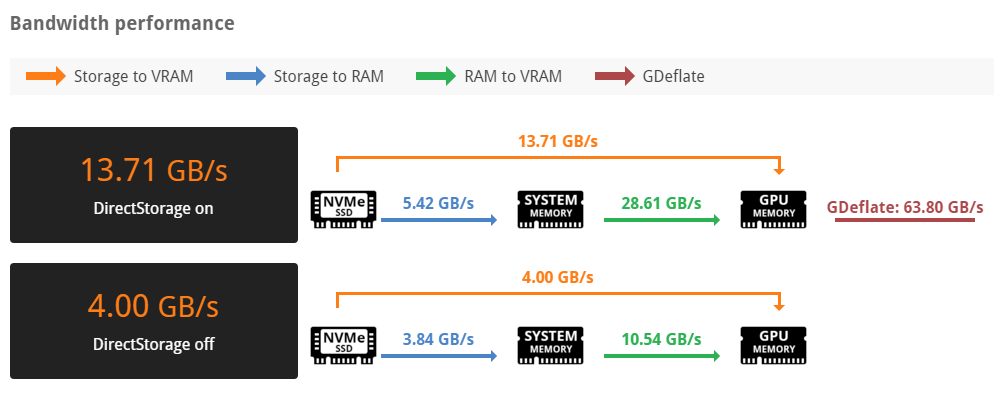

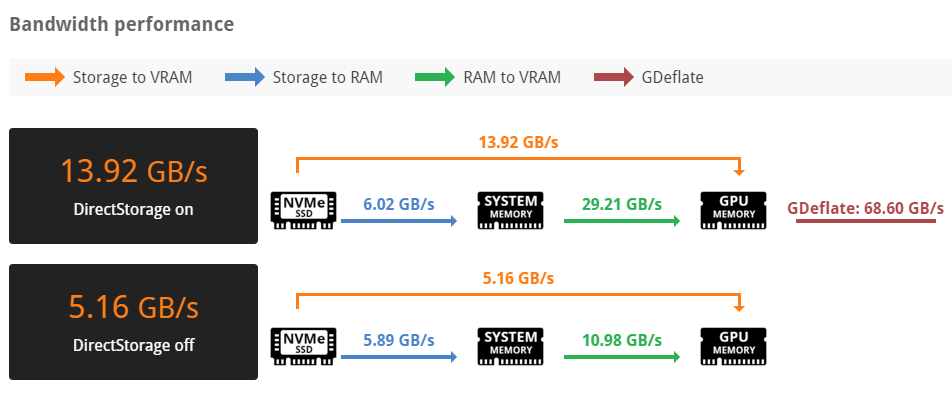

Not, it does something to the system AFTER the SSD, you own data shows this:

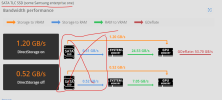

980 NVME(to the CPU), increase in bandwith:

960 MVME (Chipset attached), increase in bandwith:

(You should verify your motherboard bifurication, some NVME slots share bandwith with other units, such as SSD's/SATA etc. and thus never get full bandwith)

Now the real pudding:

SATA SSD (non-NVME)

NO bandwidth increase

NO bandwidth increase

All the inscrease is AFTER the data has entered the RAM and thus the drive is no longer a factor.

SSD/HDD's are not "supported", the system might do something after the data have left the drives, but the drives themself get nothing from DirectStorage, unlike NVME's

So I think we are have a miscommunication...but SDD's/HDD's get nothing for DirectStorage, if you look at your Windows GameBar, I bet it will tell you the drives are not supported.

You own data show this, quite clearly