D

Deleted member 2197

Guest

April 1, 2024

www.pugetsystems.com

www.pugetsystems.com

Stable Diffusion Linux vs. Windows

How does the choice of Operating System affect image generation performance in Stable Diffusion?

www.pugetsystems.com

www.pugetsystems.com

It comes as little surprise that Windows continues to be a popular choice for professional workstations, and in 2023, about 90% of Puget Systems customers purchased a Windows-based system. Today, we’ll discuss the benefits and drawbacks of Windows-based workstations compared to Linux-based systems, specifically with regard to Stable Diffusion workflows, and provide performance results from our testing across various Stable Diffusion front-end applications.

...

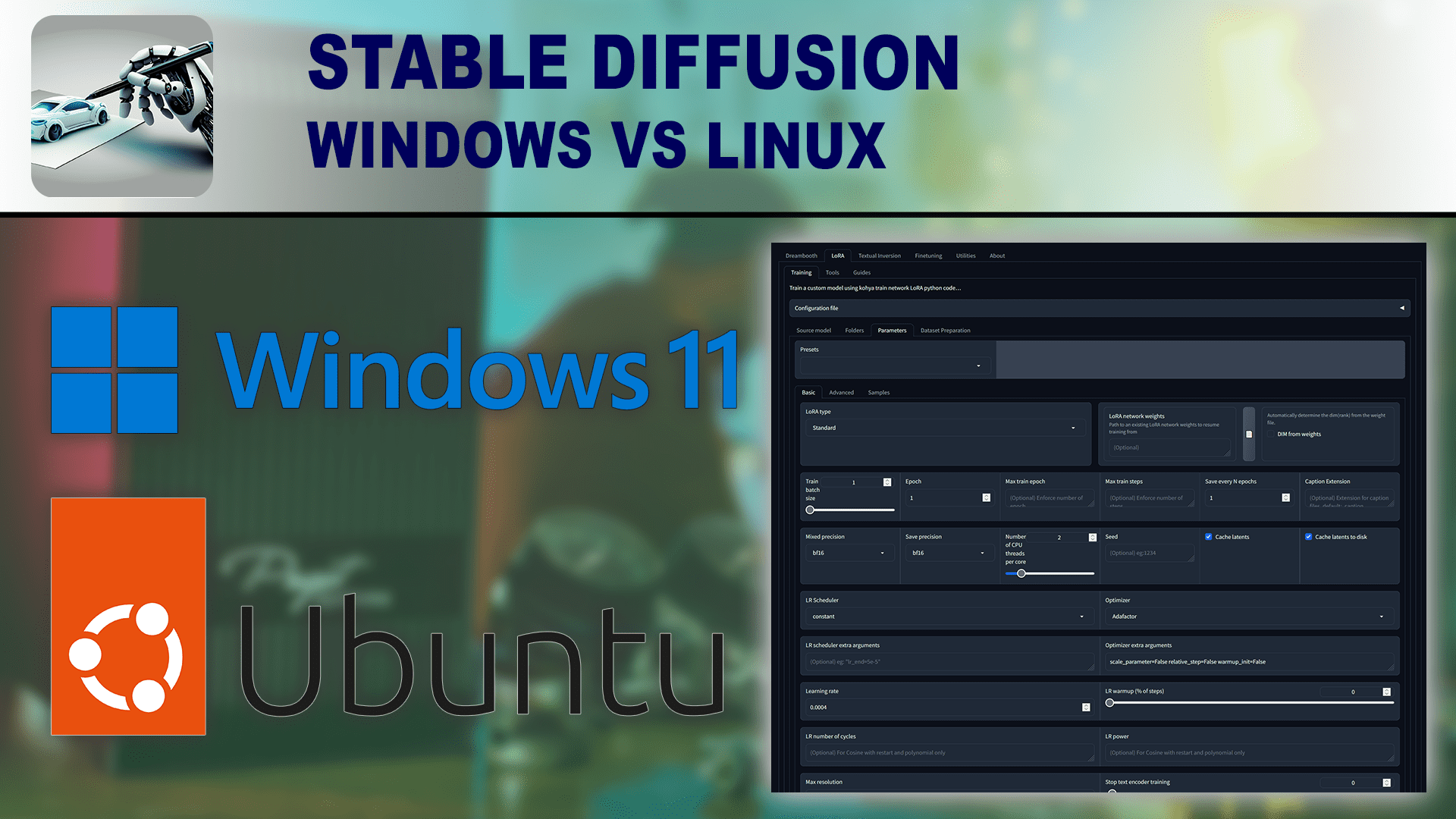

We will discuss some other considerations with regard to the choice of OS, but our focus will largely be on testing performance with both an NVIDIA and an AMD GPU across several popular SD image generation frontends, including three forks of the ever-popular Stable Diffusion WebUI by AUTOMATIC1111.

...

A new option for AMD GPUs is ZLUDA, which is a translation layer that allows unmodified CUDA applications on AMD GPUs. However, the future of ZLUDA is unclear as the CUDA EULA forbids reverse-engineering CUDA elements for translation targeting non-NVIDIA platforms.

We decided to run some tests, and surprisingly, we found several instances where ZLUDA within Windows outperformed ROCm 5.7 in Linux, such as within the DirectML fork of SD-WebUI. Compared to other options, ZLUDA does not appear to be meaningfully impacted by the presence of HAGS.