@Wesker I don't mind them releasing hardware early as long as they're up front about the software situation. If they say software will require recompile with a new sdk, system updates, development with new apis etc, then they're letting you know what you're getting on day one. I think most of us that went through the powerpc to intel transition kind of understand this is how it's going to be, but it would be good for them to be more up front with their customers. Overall I'd say I'm pretty excited about the direction Apple is going in with their devices. That's a 200W package with a lot of performance in it, that stays pretty cool and quiet in a small footprint. But if it takes them years to get the software side really optimized that's a big failing on their part.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Apple is an existential threat to the PC

- Thread starter MfA

- Start date

I think the CPU side of things have been incredibly smooth -- the results speak for themselves and Intel is getting humiliated.

But I think they either underestimated how bad the GPU situation was on macOS, or they didn't want to admit that the GPU situation was this bad on macOS. The reasons for either explanation are numerous: Betting big on OpenCL, then being hampered by AMD's poor GPU development cycle, abandoning OpenGL (and OpenCL), being thermally constrained by Intel's 14nm+++ products, the utter neglect of the Mac lineup after 2015, and then to finally going their own way with Metal ever since macOS Mojave; it's been a bumpy ride for GPUs on the Mac.

Hopefully with Metal (and Metal 2) and making use of their own graphics IP on Mac (which is just a direct port of their A-series GPUs), we will see the situation improve. But hey, they have to start somewhere. I am optimistic though. Things have been very smooth and impressive on the iOS side of things, and I think we will GPU acceleration and utilisation on macOS mirror that of iOS in a couple years.

My comment on them releasing Mac Studio and Studio Display too early were mostly in jest and in reference to the incredibly poor performance of the Studio Display's wide-angle camera:

https://arstechnica.com/gadgets/202...-update-to-fix-studio-display-webcam-quality/

https://daringfireball.net/linked/2022/03/17/three-updates-regarding-studio-display

But I think they either underestimated how bad the GPU situation was on macOS, or they didn't want to admit that the GPU situation was this bad on macOS. The reasons for either explanation are numerous: Betting big on OpenCL, then being hampered by AMD's poor GPU development cycle, abandoning OpenGL (and OpenCL), being thermally constrained by Intel's 14nm+++ products, the utter neglect of the Mac lineup after 2015, and then to finally going their own way with Metal ever since macOS Mojave; it's been a bumpy ride for GPUs on the Mac.

Hopefully with Metal (and Metal 2) and making use of their own graphics IP on Mac (which is just a direct port of their A-series GPUs), we will see the situation improve. But hey, they have to start somewhere. I am optimistic though. Things have been very smooth and impressive on the iOS side of things, and I think we will GPU acceleration and utilisation on macOS mirror that of iOS in a couple years.

My comment on them releasing Mac Studio and Studio Display too early were mostly in jest and in reference to the incredibly poor performance of the Studio Display's wide-angle camera:

https://arstechnica.com/gadgets/202...-update-to-fix-studio-display-webcam-quality/

https://daringfireball.net/linked/2022/03/17/three-updates-regarding-studio-display

ArtIsRight is finding anomalies as well compared to the M1 Max and M1 Pro MacBook Pro notebooks. The CPU and GPU isn’t getting fully maxed out in many applications.Ignore the clickbait title but this is an interesting look:

Tl;dw: Big time GPU utilisation issues. The GPU is rated for about 100-110w by Apple. But on the benchmarks where the M1 Ultra woefully underperforms, GPU utilisation is low (about 20-30%) and the GPU is only consuming about 30-40w; often times the GPU is underclocking. In apps and benchmarks where performance scales, the M1 Ultra is consuming its appropriate 100-110w power budget, and is running at high clocks.

Pretty strong sign that apps and benchmarks need to be written to scale up with Apple's scalable GPU architecture. A familiar tale: Performance is being hampered by poor software. Thankfully, software can be updated and rewritten.

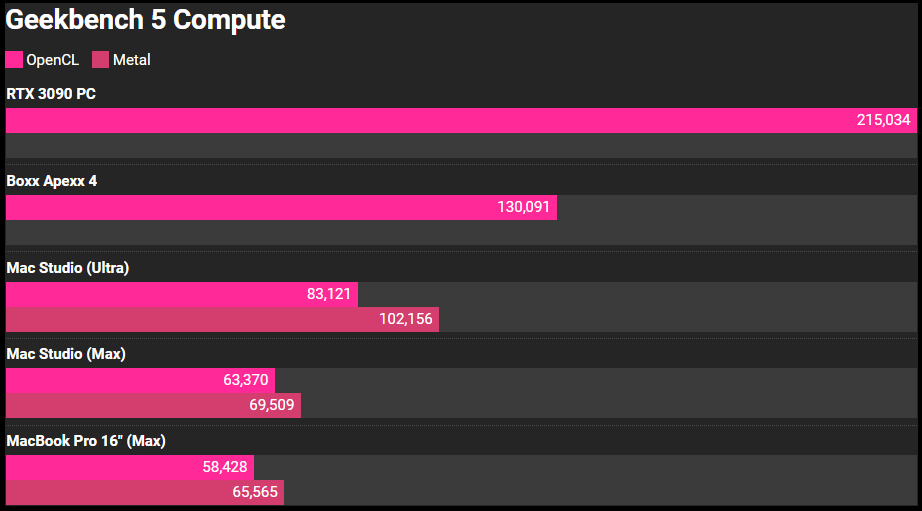

It also fits the description by Andrei F on why Geekbench failed to scale with the M1/Pro/Max. No surprise why scores didn't scale for the Ultra.

I suppose some software updates are in order for these new Mac Studios.

Tl;dw: Big time GPU utilisation issues. The GPU is rated for about 100-110w by Apple. But on the benchmarks where the M1 Ultra woefully underperforms, GPU utilisation is low (about 20-30%) and the GPU is only consuming about 30-40w; often times the GPU is underclocking. In apps and benchmarks where performance scales, the M1 Ultra is consuming its appropriate 100-110w power budget, and is running at high clocks.

Pretty strong sign that apps and benchmarks need to be written to scale up with Apple's scalable GPU architecture. A familiar tale: Performance is being hampered by poor software. Thankfully, software can be updated and rewritten.

It also fits the description by Andrei F on why Geekbench failed to scale with the M1/Pro/Max. No surprise why scores didn't scale for the Ultra.

Well, it has only been 6 months since M1 Pro/Max came out so GPU scaliability beyond basic M1 configuration probably is going to be all over the place. Expecting it will take another year until they see proper optimization.

On a bright side, Apple can keep bragging about how amazing its power consumption is under load.

Multiple GPUs have their own memory pools, the Ultra does not. Also even with TB/s of interconnect, those GPU cores are still more separated, higher latency. If they try to get smart and present them as one GPU it could simply scale worse than letting Blender treat it as two GPUs.

Frame parallel rendering for offline renderers which don't try to reuse previous frames is so simple it's going to be hard to beat.

First M1 Ultra results in Gfxbench show slightly lower results than the M1 Max; but while the latter comes along onscreen (vsynced) with 120 fps, the Ultra is stuck at only 59-60 fps.

M1 Max: https://gfxbench.com/device.jsp?benchmark=gfx50&os=OS X&api=metal&cpu-arch=ARM&hwtype=GPU&hwname=Apple M1 Max&did=101857300&D=Apple M1 Max

M1 Ultra: https://gfxbench.com/device.jsp?benchmark=gfx50&os=OS X&api=metal&D=Apple+M1+Ultra&testgroup=overall

Correct me if I'm wrong but it doesn't seem to me like currently the driver is seeing more than 1 GPU (and no I wouldn't trust Kishonti's current fillrate test results either, as it's doing if I remember correctly some alpha blending amongst others). If the low level fillrate test is enough bandwidth constrained then it could explain the >40% difference here for the Ultra against the Max which is the highest difference for all tests in that benchmark suite.

-----------------------------------------------------------

On another note for the previous multi-GPU scaling debate, I think a KISS approach would be that many would prefer NV's SLi for extreme levels of geometry and others a macro tiling scheme on a deferred renderer for extreme levels of pixel shading f.e., or else you can't have it all.

I don't expect Apple or anyone close to them to disclose how many rasterizer units the M1 Max has (and in extension the Ultra *2), but I have severe doubts that despite it being a monster GPU in area for a SoC to be able to process more than 1 triangle/clock and yes I'd gladly stand corrected. Either Apple has been cooking an in house 100% own more advanced architecture or IMHO they will end up licensing behind closed doors IMG's Photon architecture.

Last edited:

If hardware raytracing is the future then at some point industry will have to move to a scenegraph API which can do animation and LOD on the fly on the GPU ... this would also make parallelizing in the driver actually simple, not just seemingly simple for the developer while creating a mounting of fragile and hacky code inside the driver.

If hardware raytracing is the future then at some point industry will have to move to a scenegraph API which can do animation and LOD on the fly on the GPU ... this would also make parallelizing in the driver actually simple, not just seemingly simple for the developer while creating a mounting of fragile and hacky code inside the driver.

I wasn't thinking about hw RT to be honest; Apple may add it one way or another into future generations only for marketing purposes. We have heard that Apple has rewritten large parts of the IMG GPU IP however the base architecture still belongs IMHO to the Rogue era, meaning that I still can see 16 SIMD units/cluster capable of 2 FMACs each. Since Apple is using only some sort of architectural license from IMG for all its current solutions, it's about time to advance to a newer entirely new generation architecture with or without 3rd party IP and with or without hw RT. Samsung touting hw RT with its RDNA2 based GPU IP in the latest Exynos isn't exactly practical from a marketing point of view.

They might have moved the entire rasterization stage into unified shader programs, small change, it wouldn't look much different. As Epic proved, hardware triangle rasterizers are relics of the past which have become a boat anchor more than an asset.

UE5 recently got support for hw rt acceleration, it is both faster and visually looking better.

UE5 recently got support for hw rt acceleration, it is both faster and visually looking better.

For Lumen, as far as I know they aren't raytracing into an on the fly LOD'd nanite geometry representation quite yet. Not that raytracing wouldn't be suitable for it, just not the way it's implemented/exposed now.

Silent_Buddha

Legend

Ignore the clickbait title but this is an interesting look:

Tl;dw: Big time GPU utilisation issues. The GPU is rated for about 100-110w by Apple. But on the benchmarks where the M1 Ultra woefully underperforms, GPU utilisation is low (about 20-30%) and the GPU is only consuming about 30-40w; often times the GPU is underclocking. In apps and benchmarks where performance scales, the M1 Ultra is consuming its appropriate 100-110w power budget, and is running at high clocks.

Pretty strong sign that apps and benchmarks need to be written to scale up with Apple's scalable GPU architecture. A familiar tale: Performance is being hampered by poor software. Thankfully, software can be updated and rewritten.

ArtIsRight is finding anomalies as well compared to the M1 Max and M1 Pro MacBook Pro notebooks. The CPU and GPU isn’t getting fully maxed out in many applications.

I suppose some software updates are in order for these new Mac Studios.

That all kind of dovetails into what MfA was saying. Scaling software across multiple hardware dies (GPU) regardless of how they are stitched together is limited by the effort put into the software side of things. Scaling across multiple CPU dies seems to be mostly a solved problem (AMD Zen), so it's a bit strange that the M1 Ultra seems to have problems in that area. Although to be fair even there you need software that can intelligently spawn enough threads that will actually need the cores on the other dies as well as equally balancing the workload across all of those threads.

Oddly enough something that is just the way things are done on GPU (massively parallel workloads) but require significant effort on CPU (harder to parallelize CPU workloads). So, I guess not so odd that the CPU doesn't necessarily scale well, but goes back to MfA's point WRT how the GPU isn't necessarily going to scale well just because there's a massive interconnect.

Regards,

SB

Last edited:

D

Deleted member 11852

Guest

It's not always the software that dictates it's tolerance for parellization, it's often the nature of the problem itself. Some problems are very linear and can be broken down much like you might break down differential equalisations and reconstitute the individual elements and get the correct result. But many problems, particulars graphics and especially ray tracing, where the whole scene is interacting with almost everything else, are not easy to break apart without an impact to efficiency.That all kind of dovetails into what MfA was saying. Scaling software across multiple hardware dies (GPU) regardless of how they are stitched together is limited by the effort put into the software side of things.

Flappy Pannus

Veteran

The compute results weren't impressive, but I was impressed with how well the M1 Ultra scaled in SOTT at 4k - almost doubling performance over the Max. The fact that it's basically in 3080 territory at 1440p is also noteworthy imo.

From the Verge's Studio review.

From the Verge's Studio review.

Attachments

Remember that is running through Rosetta 2.The compute results weren't impressive, but I was impressed with how well the M1 Ultra scaled in SOTT at 4k - almost doubling performance over the Max. The fact that it's basically in 3080 territory at 1440p is also noteworthy imo.

From the Verge's Studio review.

View attachment 6362

Remember that is running through Rosetta 2.

Remember that no settings where specified in this review from Theverge. What they did mention was that the game was kinda unplayable due to microstutter at every resolution they tried. Also, why didnt they test the 3090 at 4k?

Techpowerup will put this into more context:

https://www.techpowerup.com/293019/...aims-proven-exaggerated-by-mac-studio-reviews

Another thing to remember is that a 3080 system is quite much in a different price range than a range topping 64core mac studio, were litterally in different universes here. I dont know if even being in 3090 class (the GPU apple compared it it, at low power levels) would be impressive considering the price differences.

Last edited:

Considering the fact that M1 Ultra has way bigger GPU (when counting both chips) than anything on desktop, is it really that noteworthy?The compute results weren't impressive, but I was impressed with how well the M1 Ultra scaled in SOTT at 4k - almost doubling performance over the Max. The fact that it's basically in 3080 territory at 1440p is also noteworthy imo.

From the Verge's Studio review.

View attachment 6362

I mean M1 Pro > Max adds almost a full Navi21 worth of transistors solely for bigger GPU and Ultra has 2 Maxes, it has more transistors for its GPUs than a damn Mi250X which is 2 GPUs too.

When talking MSRP (where were going again), you could build a system with 2x 3090 (or 2x 3080Ti if your not in PAL regions) and a 5950x/128gb ddr4 for the price of a 64 core Studio. You get like what, 10 times the performance in Blender, probably Arnold, Octane and Renderman aswell. And you can game on it..... which some seem very intrested in still comparing. NV/AMD gpus do encoding aswell, a wider range at that, just as fast in their native apps (H.265/AV1/XAVC/RAW). A tiger lake cpu would probably be faster than the M1 AV1 work too and CUDA if you wish. Oh and, your not bound to 5000mb/s speeds, and you can upgrade, which is kinda important for workstations.

Edit:

Seems someone left out some graphs from that review:

Edit:

Seems someone left out some graphs from that review:

Last edited:

When talking MSRP (where were going again), you could build a system with 2x 3090 (or 2x 3080Ti if your not in PAL regions) and a 5950x/128gb ddr4 for the price of a 64 core Studio. You get like what, 10 times the performance in Blender, probably Arnold, Octane and Renderman aswell. And you can game on it..... which some seem very intrested in still comparing. NV/AMD gpus do encoding aswell, a wider range at that, just as fast in their native apps (H.265/AV1/XAVC/RAW). A tiger lake cpu would probably be faster than the M1 AV1 work too and CUDA if you wish. Oh and, your not bound to 5000mb/s speeds, and you can upgrade, which is kinda important for workstations.

Edit:

Seems someone left out some graphs from that review:

Lmao, what are you smoking. There’s no way, with current prices, to build 2 * 3090, 5950X, and 128GB of RAM (plus storage and cooling) for the price of a Studio.

You’re back to your old tricks again: using Blender and gaming. Why are we not surprised.

And, again, as documented by Andrei F, Geekbench sucks for M1 benchmarks. It doesn’t even scale properly going from M1 to M1 Pro.

EDIT: Trying to even compare M1 Ultra’s media encoding capabilities to anything from Nvidia/AMD is a sad cope. M1 wipes the floor in actual content creation.

Similar threads

- Replies

- 8

- Views

- 652

- Replies

- 5

- Views

- 465

- Replies

- 10

- Views

- 1K