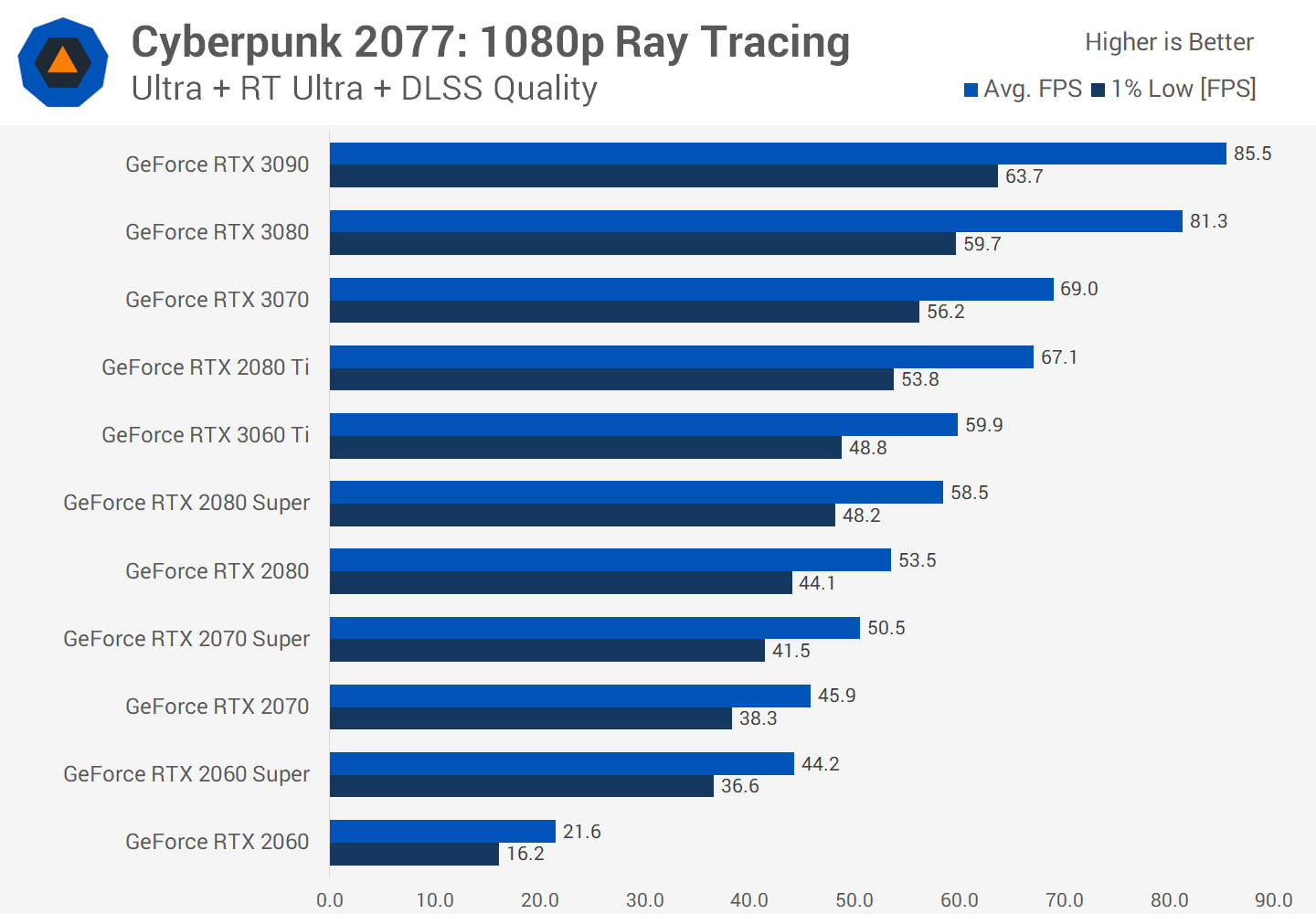

Console and mobile gaming are the common denominators, for low value gaming and you do not need a PC to game at 30, or 40 fps. We understand why Angry Birds doesn't need to be 60FPS for you to enjoy it, and even in certain PC genre style game don't need high Frames; like DOTA vs Cyberpunk. Typically multiplayer games need more fps, but again in a game like DOTA where isometric top down view, aiming is not time critical... or even single player games like Cyberpuke where nothing is critical, or matters. Game is saved.

But if Games where Vistas and in first person view, need to be as fluent and unfettered as possible, therefor your in-game movement rate should never be hindered by hardware. 60Hz + 60 FPS is the bare minimal for PC and anything less on Windows PC platform and you are just lying to yourself. Get a Console or retro Pi.

But if Games where Vistas and in first person view, need to be as fluent and unfettered as possible, therefor your in-game movement rate should never be hindered by hardware. 60Hz + 60 FPS is the bare minimal for PC and anything less on Windows PC platform and you are just lying to yourself. Get a Console or retro Pi.