Do you think PS4´s APU will have unified address space and some kind of L3 or GPU will have to bring cpu data from cpu's L2 and cpu from GPU´s L2?.

Does this sound like Unified Address Space?

From the guy on Arstechnica a day after the PlayStation Meeting before VGLeaks posted the info about the GPU – compute, queues and pipelines

http://arstechnica.com/civis/viewtopic.php?p=23922967#p23922967

Blacken00100 said:Posted: Thu Feb 21, 2013 1:38 am

So, a couple of random things I've learned:

-It's not stock x86; there are eight very wide vector engines and some other changes. It's not going to be completely trivial to retarget to it, but it should shut up the morons who were hyperventilating at "OMG! 1.6 JIGGAHURTZ!".

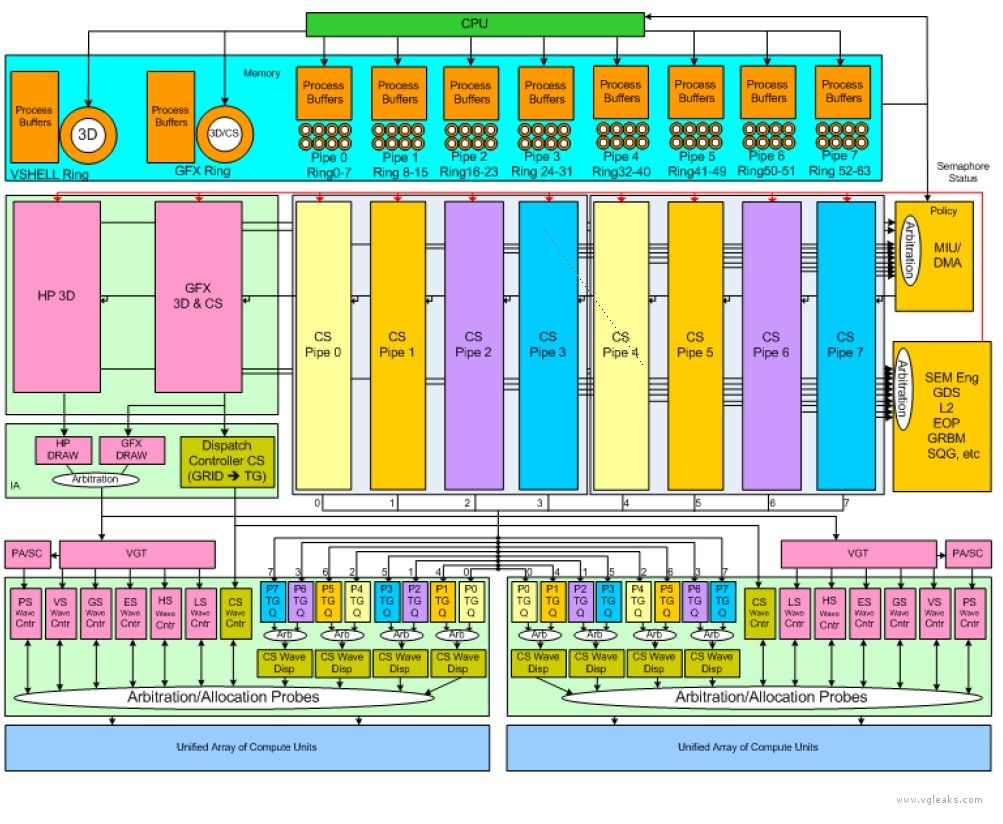

-The memory structure is unified, but weird; it's not like the GPU can just grab arbitrary memory like some people were thinking (rather, it can, but it's slow). They're incorporating another type of shader that can basically read from a ring buffer (supplied in a streaming fashion by the CPU) and write to an output buffer. I don't have all the details, but it seems interesting.

-As near as I'm aware, there's no OpenGL or GLES support on it at all; it's a lower-level library at present. I expect (no proof) this will change because I expect that they'll be trying to make a play for indie games, much as I'm pretty sure Microsoft will be, and trying to get indie developers to go turbo-nerd on low-level GPU programming does not strike me as a winner.