It depends on the particular ASIC configuration. The understanding that CU's need to be be in groups of 4, though, is a misperception.I am understanding from this, that it is possible to have other CU counts than divisible by 4.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Southern Islands (7*** series) Speculation/ Rumour Thread

- Thread starter UniversalTruth

- Start date

upnorthsox

Veteran

It depends on the particular ASIC configuration. The understanding that CU's need to be be in groups of 4, though, is a misperception.

So then you will have CU's that are not in groups of 4?

Interesting. So does that mean that some ASICs have smaller CU groups (2 or 3 maybe)? Would such chips also potentially scale back the L1I and the shared scalar data cache? Or could you indeed have chips with different CU group size even within the same chip (by disabling some CUs)? Or maybe disable one CU within each group?It depends on the particular ASIC configuration. The understanding that CU's need to be be in groups of 4, though, is a misperception.

In any case I'll have to take it back then that those "leaked" specs are impossible (I won't take back though that I think they are completely fake).

The AF improvements are very much a hardware change in how the AF logic works, so unfortunately this is a GCN specific change.Is it possible for the AF improvements to make it to older models or is it strictly 7 series+ ?

Obviously I won't yet comment on the particulars of other implementations of GCN in Southern Islands, but it is wise to take the Lensfire stuff with a large grain of...Interesting. So does that mean that some ASICs have smaller CU groups (2 or 3 maybe)? Would such chips also potentially scale back the L1I and the shared scalar data cache? Or could you indeed have chips with different CU group size even within the same chip (by disabling some CUs)? Or maybe disable one CU within each group?

In any case I'll have to take it back then that those "leaked" specs are impossible (I won't take back though that I think they are completely fake).

Understandable, very happy this was addressed thoughThe AF improvements are very much a hardware change in how the AF logic works, so unfortunately this is a GCN specific change.

It's just that the GCN presentations seemed to imply always 4 CUs are grouped together, so the ability to possibly have smaller groups somehow raises some questions. So it's not actually ASIC specific questionObviously I won't yet comment on the particulars of other implementations of GCN in Southern Islands, but it is wise to take the Lensfire stuff with a large grain of...

So exactly like we knew it was gonna be. The 7990 is the interesting one; oh, and the 780GTX.

It's five days later and I've realised why I have almost no interest in the 7970:

Almost every response when someone comments on the price is: "but its 20% faster than a 580 for only 10% more". But the problem is its only 40% faster than a 6970 for 90% more. "Only 10%" when you're already at $500 is a LOT of money.

It's five days later and I've realised why I have almost no interest in the 7970:

Almost every response when someone comments on the price is: "but its 20% faster than a 580 for only 10% more". But the problem is its only 40% faster than a 6970 for 90% more. "Only 10%" when you're already at $500 is a LOT of money.

It's 20% faster at 1920×1080. I don't believe that anybody would buy ~$500 GPU for ~$150 LCD. At 2560×1600+AA4× HD 7970 outperforms GTX 580 by by 33% according to ComputerBase.de and by 30% according to Hardware.fr.

Additionally, HD 7970 3GB isn't more expensive than GTX 580 3GB. Looking at NewEgg the cheapest 3GB model of GTX 580 costs $549. In fact HD 7970 offers 1/3 better price/performance ratio in enthusiast segment. I can't remember any Nvidia's GPU, which would offer anything similar since 2006…

Additionally, HD 7970 3GB isn't more expensive than GTX 580 3GB. Looking at NewEgg the cheapest 3GB model of GTX 580 costs $549. In fact HD 7970 offers 1/3 better price/performance ratio in enthusiast segment. I can't remember any Nvidia's GPU, which would offer anything similar since 2006…

Additionally, HD 7970 3GB isn't more expensive than GTX 580 3GB.

From all the reviews I've seen, there is no difference between 1.5GB and 3GB 580s at any resolution. Only a crazy person would buy one, and I refuse therefore to include them in my pricing figures. 1.5GB is the base 580, 3GB is the base 7970.

It's 20% faster at 1920×1080. I don't believe that anybody would buy ~$500 GPU for ~$150 LCD. At 2560×1600+AA4× HD 7970 outperforms GTX 580 by by 33% according to ComputerBase.de and by 30% according to Hardware.fr.

Additionally, HD 7970 3GB isn't more expensive than GTX 580 3GB. Looking at NewEgg the cheapest 3GB model of GTX 580 costs $549. In fact HD 7970 offers 1/3 better price/performance ratio in enthusiast segment. I can't remember any Nvidia's GPU, which would offer anything similar since 2006…

I honestly don't get the logic when it's really that simple. It's like they want to compare the 7970 vs 580 1.5gig price wise then compare the 7970 to the 580 3gig performance wise

I've seen this "reference" made more then once in a few other threads. It's like no one is suppose to catch on to that

As for the 7970 vs 6970 in price, it's clear that the 6970 is now a mid range card. You simply can no longer make price comparisons as the performance gap is to great.

From all the reviews I've seen, there is no difference between 1.5GB and 3GB 580s at any resolution. Only a crazy person would buy one, and I refuse therefore to include them in my pricing figures. 1.5GB is the base 580, 3GB is the base 7970.

That's because you're looking at 1920x1080 again, at higher res the 3GB does make a difference, especially in multi-monitor solutions. And while multi-monitor setups are not common, I bet they are a lot more prevalent among people willing to drop $500+ on a video card.

I honestly don't get the logic when it's really that simple.

Simple? Yet you've gone and misse the point completely...

It's like they want to compare the 7970 vs 580 1.5gig price wise then compare the 7970 to the 580 3gig performance wise.

See? There is no perf difference between a 1.5GB and a 3GB 580. Paying the extra gets you no extra performance, its simply bragging rights. Anyone who tries to stack a 3GB 580 against a 7970 is just weighting the deck in AMDs favour more than is necessary.

As for the 7970 vs 6970 in price, it's clear that the 6970 is now a mid range card. You simply can no longer make price comparisons as the performance gap is to great.

Sorry, but 30-40% difference is not "too great". I expect that from a refresh, not a brand new card.

That's because you're looking at 1920x1080 again, at higher res the 3GB does make a difference, especially in multi-monitor solutions. And while multi-monitor setups are not common, I bet they are a lot more prevalent among people willing to drop $500+ on a video card.

Given that I run multiple 30" monitors, I hardly ever look at 1920x1080 (eugh, dont get me started on that consolitus resolution). I'm *ALL* about the 2560x1600.

Game (2560x1600 AND 8x FSAA):

Benchmark (2560x1600 AND 4xFSAA and Tess):

EDIT: Dont let me stop with just one sites review:

Zero difference.

Congratulations for cherry picking two graphs where 3GB doesn't beat overclocking.

http://images.hardwarecanucks.com/image//skymtl/GPU/HD7970/HD7970-55.jpg

yawn

http://images.hardwarecanucks.com/image//skymtl/GPU/HD7970/HD7970-55.jpg

yawn

Given that I run multiple 30" monitors, I hardly ever look at 1920x1080 (eugh, dont get me started on that consolitus resolution). I'm *ALL* about the 2560x1600.

As someone who owns a 580 Lightning XE (the 3GB version) and coincidentally knows a bit or two about the cards, I can assure you that if you want to run Heaven on "multiple 30" monitors" you don't want to do that on 1.5GB cards.

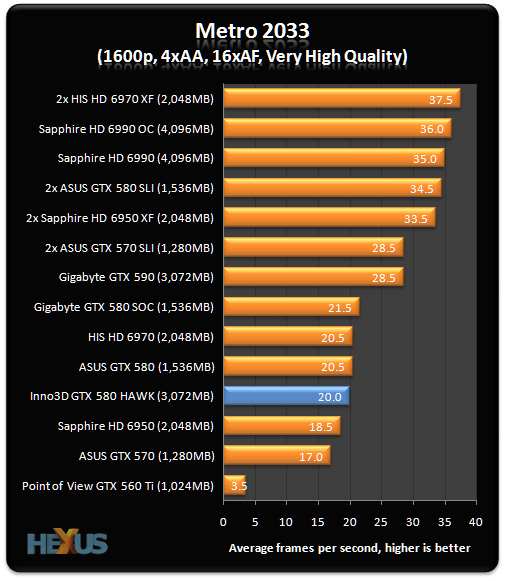

since you were so nice to show the Metro benches from Hexus, why don't you show the Metro benches from HardwareCanucks?

yes, score 0 for the 1.5GB version. Unigine with any multiple monitor setup will give you ~30% higher FPS on a 3GB card versus 1.5GB.

edit: here the more detailed graph for Shogun2: if AVG FPS with 1.5GB = min FPS for 3GB, give me the 3GB card please.

I didn't cherry pick, I went to google and found the top two or three reviews from a "GTX 580 3GB" search.

http://www.hardwarecanucks.com/foru...ws/44390-evga-geforce-gtx-580-3gb-review.html

Not one result there shows an increase. And talking of cherry picking, only Shogun and BF3 from the whole review you linked a graph from show more than 1fps or so increase.

http://www.hardwarecanucks.com/foru...ws/44390-evga-geforce-gtx-580-3gb-review.html

Not one result there shows an increase. And talking of cherry picking, only Shogun and BF3 from the whole review you linked a graph from show more than 1fps or so increase.

since you were so nice to show the Metro benches from Hexus, why don't you show the Metro benches from HardwareCanucks?

Cool, 10fps, thats playable...

Can't we just agree that the 3GB on a 580 makes *NO* games that weren't playable, playable. There is no magic 40fps -> 60fps. The difference is either <1%, or pointless. Either way, the price of the 3GB isn't justified (as in: to compare with a 7970).

Last edited by a moderator:

Cool, 10fps, thats playable...

Can't we just agree that the 3GB on a 580 makes *NO* games that weren't playable, playable. There is no magic 40fps -> 60fps. The difference is either <1%, or pointless. Either way, the price of the 3GB isn't justified (as in: to compare with a 7970).

Can't we just agree that you're wrong?

The advantage of 3GB will become more apparent when the 1.5 GB SI cards start shipping and there's some eyefinity comparison testing.

Similar threads

- Replies

- 3K

- Views

- 588K

- Replies

- 3K

- Views

- 650K

- Replies

- 1K

- Views

- 376K