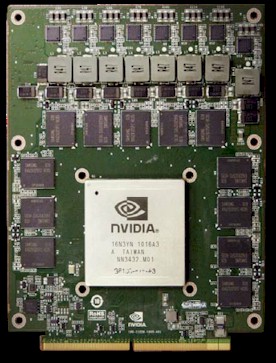

I don't think so. The X2090 is a card that come without any fan and so on, but it still has a slot and is obviously screwed to the mainboard.I think the GPU is soldered onto the motherboard in this case.

That's a Tesla X2090:

And that's a XK6 board with 4 of them at the right side:

I see slots there.