You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Xbox One: DirectX 11.1+ AMD GPU , PS4: DirectX 11.2+ AMD GPU what's the difference?

- Thread starter onQ

- Start date

They are both DX 11.2+ capable.

Yeah I seen where it was reported that the AMD HD7000 cards was getting a driver update to make them DX11.2 capable but I was wondering if it was a difference in the DX11.1 & DX11.2 hardware.

No difference in GPU abilities aside from the PS4 being 50% more powerfull.

AMD stated that GCN is capable of DX11.2 and that it would be available via a windows driver update.

I'm pretty sure Sony can update it's Unix drivers and tools to feature parity if it's needed.

Um. You must have missed an upclock as it is now 39% more

Um. You must have missed an upclock as it is now 39% moreor One being 29% less depending on how you want to look at it

actually it's 40.679953107% more powerful

768 x 2 x 853 = 1310208 (1310.2 GFLOPS)

1152 x 2 x 800 = 1843200 ( 1843.2 GFLOPS)

1310208 + 40.679953107% = 1843200

but who's counting?

Bigus Dickus

Regular

Or more importantly, "powerful" encompasses a lot more than compute unit count. There may also be other areas ps4 has an advantage, and still other areas where xb1 has an advantage. Even if "all other things were equal" 50% more cu wouldn't mean 50% (now 40%) more powerful in many or most typical real world uses. But other things aren't even equal so make such a sweeping simplification simply isn't warranted.

Every abhors car analogies so here's an extreme one... you shove an V12 engine somehow into a 1970's estate wagon that weighs 3 tons and race it against a V8 Ferrari. What could you say about more power? Are all other things equal? And say for the engines at least they were... 800bhp vs 600bhp. What could you say about real world performance?

And no, I'm not implying the xb1 is a Ferrari or the ps4 is a station wagon. Just making what should already be an obvious point about using one spec to compare. PS4 could turn out to have a gpu greater than 40% more powerful. Or less.

Every abhors car analogies so here's an extreme one... you shove an V12 engine somehow into a 1970's estate wagon that weighs 3 tons and race it against a V8 Ferrari. What could you say about more power? Are all other things equal? And say for the engines at least they were... 800bhp vs 600bhp. What could you say about real world performance?

And no, I'm not implying the xb1 is a Ferrari or the ps4 is a station wagon. Just making what should already be an obvious point about using one spec to compare. PS4 could turn out to have a gpu greater than 40% more powerful. Or less.

Or more importantly, "powerful" encompasses a lot more than compute unit count. There may also be other areas ps4 has an advantage, and still other areas where xb1 has an advantage. Even if "all other things were equal" 50% more cu wouldn't mean 50% (now 40%) more powerful in many or most typical real world uses. But other things aren't even equal so make such a sweeping simplification simply isn't warranted.

Every abhors car analogies so here's an extreme one... you shove an V12 engine somehow into a 1970's estate wagon that weighs 3 tons and race it against a V8 Ferrari. What could you say about more power? Are all other things equal? And say for the engines at least they were... 800bhp vs 600bhp. What could you say about real world performance?

And no, I'm not implying the xb1 is a Ferrari or the ps4 is a station wagon. Just making what should already be an obvious point about using one spec to compare. PS4 could turn out to have a gpu greater than 40% more powerful. Or less.

Aside from a 10 gigaflop audio-chip, there is not a single known specification in which the XBone exceeds the PS4. Is this correct? And no, "transistor count" or "# of kinects included in the BOM" don't count as specifications

They are both DX 11.2+ capable.

By that logic a GeForce 2MX is DX9 capable

Dominik D

Regular

X1 GPU specs have nothing to do with HD7000. X1 was initially reported as 11.1+ because 11.2 hasn't been announced back then. It's 11.2+ just like PS4's GPU. As for the feature set exposed in 11.2 that wasn't there in 11.1: mostly fast presents (cutting present latency) and tileable resources (resources only partially resident in GPU memory useful for stuff like streaming in general or megatextures specifically).Yeah I seen where it was reported that the AMD HD7000 cards was getting a driver update to make them DX11.2 capable but I was wondering if it was a difference in the DX11.1 & DX11.2 hardware.

Dominik D

Regular

You're obviously new here, so here's a hint: don't try to be snarky if your arguments are about numbers and not deeper knowledge of the hardware. Especially if your generalizations are incorrect from the get go: there is one "X1 specification" that exceeds PS4 - embedded RAM. Perhaps others, perhaps not. But all this is irrelevant, hardware is not a set of ticks on a box and values that you compare 1:1. There are significant architectural wins in both PS4 and X1 designs that will be beneficial in certain scenarios. It's always like that.Aside from a 10 gigaflop audio-chip, there is not a single known specification in which the XBone exceeds the PS4. Is this correct? And no, "transistor count" or "# of kinects included in the BOM" don't count as specifications

Except that 11.2 features compared to 11.1 have little to do with HW and a lot to do with how you access it/what you expose.By that logic a GeForce 2MX is DX9 capableI mean, I did install compatible DX9 drivers when they came out

By that logic a GeForce 2MX is DX9 capableI mean, I did install compatible DX9 drivers when they came out

Look, don't be a jerk. It won't get you anywhere on these forums but on the list of users who have no posting rights.

As explained, the hardware is 11.2+. There is no gimmick with driver levels as you would hope to imply.

...there is one "X1 specification" that exceeds PS4 - embedded RAM....

I don't want to sound snarky, but that is like saying a person who only has 1 leg exceeds a healthy person, just because the disabled person has 1 titanium leg, whereas the healthy person just has regular bone.

While titanium is much stronger than bone, it takes more time to learn how to walk on it, it takes special care as well and in general: it's just a big hassle when compared to just having 2 real legs. Also the 1titaniumleg- person will produce less erythrocytes, though he will have a tactical advantage when flying a fighter jet under extreme g-forces but that is probably thinking about it in too much detail.

The esram is a merely a patch, nothing more. And most certainly not an advantage, sorry.

Look, don't be a jerk. It won't get you anywhere on these forums but on the list of users who have no posting rights.

As explained, the hardware is 11.2+. There is no gimmick with driver levels as you would hope to imply.

Ok, sorry for my mistake, to be honest I didn't check the 11.2+ specification, I merely anticipated it to be inline with other MS PR specifications.

I understand now that both windows 8.1 and the new xbox have hardware support for 11.2+ functions.

Aside from a 10 gigaflop audio-chip, there is not a single known specification in which the XBone exceeds the PS4. Is this correct? And no, "transistor count" or "# of kinects included in the BOM" don't count as specifications

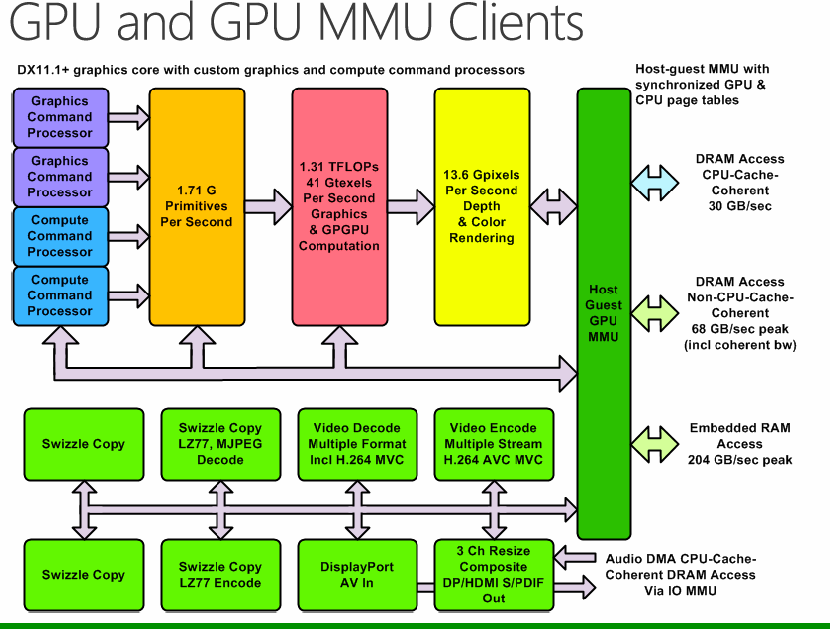

Peak BW. Peak bandwidth per flop is over double on Xbone ((204+68/1.31)>>176/1.84).

Also a minor ~7% edge in triangle setup rate due to the 853mhz gpu clock.

Dominik D

Regular

It is an obvious advantage in certain scenarios. Anyone who's programmed graphics understands that. Most of the renderers these days are deferred in some way and they will benefit from ESRAM. And since it's behind the MMU, there isn't much one has to do to use it: you map it where you need it in GPU's virtual address space and you reap benefits of faster g-buffer access.The esram is a merely a patch, nothing more. And most certainly not an advantage, sorry.

How is DirectX 11.2 a PR specification exactly?Ok, sorry for my mistake, to be honest I didn't check the 11.2+ specification, I merely anticipated it to be inline with other MS PR specifications.

...Anyone who's programmed graphics understands that. ...

How is DirectX 11.2 a PR specification exactly?

In this case, if you are to be believed: 11.2+ is not a PR specification, the rest of the specifications like : "40 times the power in the cloud!", "let's add up all the individual bandwidths and present them as actual bandwidth!" and "whoops, turned out we invented memory that is capable of simultaneously reading and writing at the same time!", are PR specifications; they won't explain )read very carefully) come november 2013 why watchdogs runs in a much lower resolution and without bokeh. (hint: bookmark this post ;-) )

Anyone who's programmed graphics understands that

You are probably thinking of added esram to complement the main graphics memory.

In this case the main graphics memory is severely underpowered, so the esram is there to lower the disadvantage of not using fast graphics memory.

But I would love to see an example to your claim, taking into consideration the esram-less solution has about 300% the memory bandwidth in comparison.

In this case, if you are to be believed: 11.2+ is not a PR specification, the rest of the specifications like : "40 times the power in the cloud!", "let's add up all the individual bandwidths and present them as actual bandwidth!" and "whoops, turned out we invented memory that is capable of simultaneously reading and writing at the same time!", are PR specifications; they won't explain )read very carefully) come november 2013 why watchdogs runs in a much lower resolution and without bokeh. (hint: bookmark this post ;-) )

This is not GAF or some similar place. Either you start contributing something more worthwhile than console cheerleading / porridge pissing, or be on your way (for the latter case we will help with the trip arrangements). Whilst I am aware that the console forums here are something of a quagmire to begin with, even that pit has a bottom.

Dominik D

Regular

Look, computing cores are probably almost identical if not identical for both X1 and PS4 and general feature set is definitely a close match in both cases (multitasking, 3D and compute, tesselation and everything one would expect from a modern GPU). Both are 11.2 for sure. Those pluses usually mean some extra stuff that's not exposed through regular 11.2 interfaces. Stuff like mappable SRAM would be that. Some new texture compression format would be in the "+" space. Custom filtering for, say, shadows' PCF would be in the "+" space. It's not PR, it's "these are 11.2 GPUs with some additional/custom stuff we can't talk too much about". Some changes that happened recently were well grounded in math. If you bump up the core speed, you get extra power. The memory controller case is curious but not impossible - driver code* tends to abuse HW design in many cases. You do this whenever you can to gain some advantage. Programmers have done this for ever - take C64 and sprites on the screen border. This has nothing to do with PR but I'm pretty sure that you wouldn't have a clue how HW is being exploited even if you got all the technical details on a silver platter. If it's PR for you - fine. But being ignorant in the "Console Technology" section is something that won't get you far around here.

* consoles have no drivers, they have thin code layer that mostly hides HW issues from the game developers and exposes nice and familiar interfaces to them

* consoles have no drivers, they have thin code layer that mostly hides HW issues from the game developers and exposes nice and familiar interfaces to them

Similar threads

- Replies

- 124

- Views

- 15K

- Replies

- 5

- Views

- 3K