Is Nvidia collecting such data as what web pages you browse, documents you view etc?It's insane to me that people expect drivers and devices to work properly in their PCs.. but they aren't willing to allow the manufacturers to collect information about how they are used and what went wrong when things stopped working......

Stop bitching about issues if you're not willing to send the information that would allow them to figure out how to fix them!

I understand not wanting to have to log in to an account... But when it comes to solving issues in games and other programs... as well as understanding which components are potentially conflicting... you've got to be willing to provide some information... otherwise they're searching for a needle in a hay stack.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Intel Execution in [2023]

- Thread starter Wesker

- Start date

- Status

- Not open for further replies.

A person with time and know how could mod it in from my understanding. FSR is included now, but DLSS is not. XeSS does not seem to be included in it at this point. I saw that portal RTX needs to have a launch flag set initially to make it work well. I thought there might be things like that or just settings that were recommended. I don't have the time I used to now, so thought I'd try and rely on othersDoes it support XeSS or can it be modded in?

Probably. They all do one way or another.Is Nvidia collecting such data as what web pages you browse, documents you view etc?

did you get it for experimental purposes or as your main GPU? I am quite happy with it, the performance with RT on is quite good overall, and while I have a 4K TV, I usually prefer to play in my good ol' 1080p TV from 2013.Well I just got an a770 16gb installed. It will be fun to see how it works. Anyone know (like Cyan) if there is anything specific I should do to get quake 2 rtx to work better? I installed it an it works at least. When I searched the web it pulled up a thread in the closed architecture forum talking about how badly it workedIt was getting 39fps though about. Not great but playable.

Are you using FSR2 to play Quake 2 RTX? I have the game and I got a perfect locked 60fps framerate on a 4K display. 'cos the game has also a feature to upscale the game to run at a certain framerate regardless of how low it must go resolution wise.

The resolution isn't that great on a modern 4K TV with all the best and whistles of current TVs, but on my 1080p TV it looks fine and more than anything, the image quality is like real life when it comes to lighting.

As for FSR2, maybe you could try the Intel XeSS Unlocker and even add the new XeSS 1.2 DLL file.

Intel XeSS Unlocker for all GPUs including AMD Radeon

Unlock Intel XeSS Super Sampling for Marvel's Spider-Man Remastered.

Thanks for your help Cyan. I'll give it a shot. I was trying to install stable diffusion today and the BAT files included with

github.com

Seem to be messed up. I figured some stuff out and then rewrote them and maybe they worked, but then I was out of time to mess with them. I might have done better just only doing command line stuff, but it seemed people liked the webgui thing.

github.com

Seem to be messed up. I figured some stuff out and then rewrote them and maybe they worked, but then I was out of time to mess with them. I might have done better just only doing command line stuff, but it seemed people liked the webgui thing.

Installation on Intel Silicon

Stable Diffusion web UI. Contribute to openvinotoolkit/stable-diffusion-webui development by creating an account on GitHub.

Okay. In any case welcome to the Intel resistance.Thanks for your help Cyan. I'll give it a shot. I was trying to install stable diffusion today and the BAT files included with

Seem to be messed up. I figured some stuff out and then rewrote them and maybe they worked, but then I was out of time to mess with them. I might have done better just only doing command line stuff, but it seemed people liked the webgui thing.

Installation on Intel Silicon

Stable Diffusion web UI. Contribute to openvinotoolkit/stable-diffusion-webui development by creating an account on GitHub.github.com

Intel cancels $5.4bn Tower Semiconductor acquisition

Intel will pay a $353m (£277) termination fee to the Israeli chip manufacturer after the merger failed to obtain regulatory approval from China.

Just a rumor, but if true, it sounds like Intel 4 is still quite immature and Intel hasn't built much scale around it yet. Them being so late to acquire EUV machines might be hurting.

I'm quite convinced that there's basically no chance that 20A/Arrow Lake releases next year. Intel desktop parts are likely going to face something of a 'Skylake++++' problem until they can actually ramp stuff up properly.

Also means Intel GPU's are almost definitely gonna be TSMC for the foreseeable future.

I'm quite convinced that there's basically no chance that 20A/Arrow Lake releases next year. Intel desktop parts are likely going to face something of a 'Skylake++++' problem until they can actually ramp stuff up properly.

Also means Intel GPU's are almost definitely gonna be TSMC for the foreseeable future.

https://wccftech.com/intel-disclose...sed-on-risc-architecture-66-threads-per-core/

During Hot Chips 2023, Intel showed off a brand new CPU design featuring 8 cores but a massive 528 threads based on RISC.

During Hot Chips 2023, Intel showed off a brand new CPU design featuring 8 cores but a massive 528 threads based on RISC.

Intel's 8 Core & 528 Thread CPU Can Provide Insane Parallelism & Multi-Threaded Capabilities

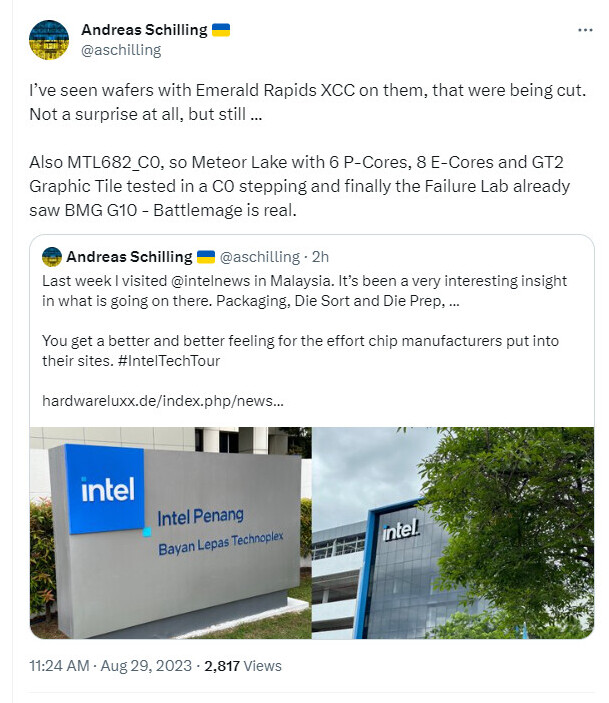

Battlemage BMG-10 rumours:

D

Deleted member 2197

Guest

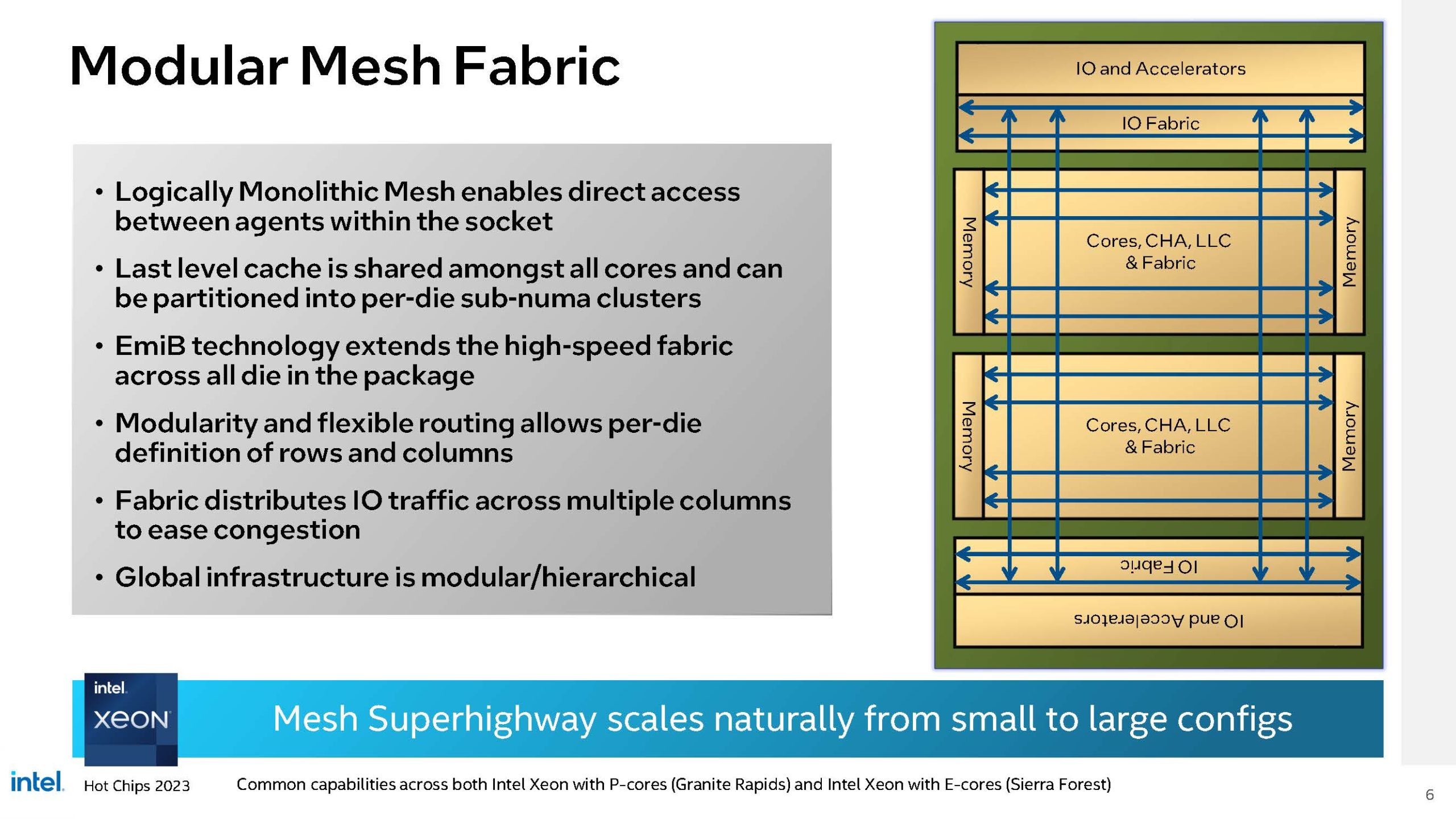

Intel on Changing its Xeon CPU Architecture at Hot Chips 2023

Intel detailed how it is completely changing its Xeon CPU architecture at Hot Chips 2023 in designs for Granite Rapids and Sierra Forest CPUs

Battlemage BMG-10 rumours:

Intel Arc Alive & Well for Next-gen - Battlemage GPU Spotted During Malaysia Lab Tour

HardwareLuxx's editor, Andreas Schilling, was invited by Intel Tech Tour to attend a recent press event at the company's manufacturing facility and test labs in Malaysia. Invited media representatives were allowed to observe ongoing work on next generation client and data center-oriented...

I wonder how much of that is because Intel is (intending to) ramp so many different processes in close succession, and if the production lines are sufficiently different, it might just not make financial sense to ramp Intel 4 very much if they can't amortise it over enough years/units.Just a rumor, but if true, it sounds like Intel 4 is still quite immature and Intel hasn't built much scale around it yet. Them being so late to acquire EUV machines might be hurting.

I'm quite convinced that there's basically no chance that 20A/Arrow Lake releases next year. Intel desktop parts are likely going to face something of a 'Skylake++++' problem until they can actually ramp stuff up properly.

Also means Intel GPU's are almost definitely gonna be TSMC for the foreseeable future.

As far as I can tell, Intel 4 and Intel 20A are the "full nodes" while Intel 3 and Intel 18A are the "half nodes". So, I would expect Intel 4 lines to be convertible (at a cost) to Intel 3, but the changes in 20A are so big I doubt that would be an easy/cheap upgrade. At this point, "Intel 4" is just Meteor Lake and some networking chips, while "Intel 3" is just Sierra Forest and Granite Rapids (both server chips). Now that Meteor Lake is notebook-only, I suspect the latter are meant to be much higher wafer volume; server is typically lower unit volume, but they are very big chips, and together I think they are basically replacing Intel's entire server lineup without any update for Granite Rapids until 2025. But the peak volume for Intel 4 will happen after Intel 3 has already significantly ramped up which is an unusual situation for Intel.

I agree this means consumer Intel GPUs are going to stay at TSMC for a long time though - I really can't see them switching to their own fabs until 18A. And I suspect that would be for "Celestial" which is 2026 so far behind other Intel products (including 2025 Falcon Shores datacenter GPU which is probably 18A and a very important product for Intel as it is also meant to replace Gaudi 3 on TSMC N5 for AI datacenter acceleration - I personally think Gaudi is a very decent architecture, but it's not as programmable or flexible as a GPU, so I can see why they'd want to unify everything to Falcon Shores rather than keep iterating on it).

Alternatively, maybe things really are bad with their yields/ramp/etc... it's always hard to tell with Intel, but I feel like this time there's a reasonable alternative explanation at least.

That's a weird/interesting focus given the really exciting part, from my perspective, is the photonics mesh network. Intel has been on the bleeding edge of photonics for a long time (although they are using a TSMC process for the silicon part here, the photonics tech is presumably theirs) but they have done a hilariously bad job of productising it. The processors here are interesting as well, specifically the part where they can switch from the very slow barrel-threaded threads/cores (IPC of 1 instruction every 8 clocks?) to the fast single-threaded threads/cores dynamically, and optimising for 8B DDR5 fetches is interesting if they've managed to make it efficient enough, but the photonics is the really important part.https://wccftech.com/intel-disclose...sed-on-risc-architecture-66-threads-per-core/

During Hot Chips 2023, Intel showed off a brand new CPU design featuring 8 cores but a massive 528 threads based on RISC.

Intel's 8 Core & 528 Thread CPU Can Provide Insane Parallelism & Multi-Threaded Capabilities

I think this kind of tech could have an incredible impact on datacenters. So much of the silicon and complexity even in large H100 installations is the networking/interconnects (I was originally skeptical of NVIDIA's Mellanox acquisition but in retrospect it was genius) and this basically provides distance-independent communication latency across a large number of chips which is really exciting. In the Hotchips Q&A, they were saying the vast majority of the latency is the conversion from/to optical to/from electrical and the on-chip latency, so it is practically distance independent at up to (at least) multiple meters. There's a lot of missing details but it feels very promising.

It would be incredible if they were able to make use of this for Falcon Shores but realistically this is Intel so I'm not holding my breath...

That's a weird/interesting focus given the really exciting part, from my perspective, is the photonics mesh network. Intel has been on the bleeding edge of photonics for a long time (although they are using a TSMC process for the silicon part here, the photonics tech is presumably theirs) but they have done a hilariously bad job of productising it. The processors here are interesting as well, specifically the part where they can switch from the very slow barrel-threaded threads/cores (IPC of 1 instruction every 8 clocks?) to the fast single-threaded threads/cores dynamically, and optimising for 8B DDR5 fetches is interesting if they've managed to make it efficient enough, but the photonics is the really important part.

Yeah, massively threaded CPU was tried quite some time before by Sun. It's an interesting idea but there were two main limiting factor: (lack of) memory bandwidth and, well, single threaded performance is still important. In a way, GPGPU is also massively threaded but they are of course less flexible.

I guess this new CPU is either just a tech demo for showcasing the photonics (which should be able to provide good interconnection for such design), or have some specific design target (e.g. network processors).

https://wccftech.com/intel-core-ult...ormance-on-par-core-i9-13900h/ Black: $459.99

https://wccftech.com/intel-1st-gen-...u-specs-leak-core-ultra-9-185h-up-to-5-1-ghz/

Intel Shows Close-Ups of Next-Gen Granite Rapids Xeon & Meteor Lake Client CPUs Using Advanced Packaging Tech

Intel Shows Close-Ups of Next-Gen Granite Rapids Xeon & Meteor Lake Client CPUs Using Advanced Packaging Tech

the Intel Core Ultra 7 155H "Meteor Lake" CPU came out just as fast as the Core i9-13900H and even outperformed the AMD Ryzen 9 7940HS. The CPU also ended up almost on par with AMD's Ryzen 7 7745HX CPU which is a 45-75W TDP CPU

https://wccftech.com/intel-1st-gen-...u-specs-leak-core-ultra-9-185h-up-to-5-1-ghz/

Intel 1st Gen Core Ultra “Meteor Lake” CPU Specs Leak: Core Ultra 9 185H Up To 5.1 GHz

Last edited:

I'm gonna use this soapbox to do a quick rant about why I hate Intel's chiplet approach:

They are gonna need SO MANY different dies. They are disaggregating so much, and clearly the intent is to try and have some sort of mix-and-match sort of thing going on, but this is never really gonna work, cuz of the constantly varying needs of every separate part. Not to mention that they're gonna need to have varying packaging sizes, including the critical and probably not cheap sort of base interposer(or whatever Intel is calling it) die anytime they want to provide some larger range of products.

And they're gonna struggle with this each and every generation. It would probably be less of an issue if most of these dies were being homegrown, but the fact that they have to produce so many on TSMC means very high design and manufacturing costs for each and every die.

Intel is racing ahead towards 'process leadership' like a lead footed drunk behind the wheel. Maybe they get where they're going, but in what shape will be they be when they get there? And it seems equally likely they crash somewhere along the way cuz they're betting the farm on everything working out, so I doubt they really have much in the way of contingency plans.

Just scares me. I know there's counter arguments for how this might well work out great, and I definitely want to believe so, but man.

They are gonna need SO MANY different dies. They are disaggregating so much, and clearly the intent is to try and have some sort of mix-and-match sort of thing going on, but this is never really gonna work, cuz of the constantly varying needs of every separate part. Not to mention that they're gonna need to have varying packaging sizes, including the critical and probably not cheap sort of base interposer(or whatever Intel is calling it) die anytime they want to provide some larger range of products.

And they're gonna struggle with this each and every generation. It would probably be less of an issue if most of these dies were being homegrown, but the fact that they have to produce so many on TSMC means very high design and manufacturing costs for each and every die.

Intel is racing ahead towards 'process leadership' like a lead footed drunk behind the wheel. Maybe they get where they're going, but in what shape will be they be when they get there? And it seems equally likely they crash somewhere along the way cuz they're betting the farm on everything working out, so I doubt they really have much in the way of contingency plans.

Just scares me. I know there's counter arguments for how this might well work out great, and I definitely want to believe so, but man.

DegustatoR

Legend

Intel Xe2 "Gen20" GPU architecture appears in Linux Mesa drivers patches - VideoCardz.com

First Xe2 architecture patches for open-source Mesa After initial inclusion of the Xe2 architecture reference in Intel GPU driver support last month, Intel engineers have now commenced their work on Mesa support. The Xe2 architecture, also known as Battlemage, is the forthcoming architecture...

https://www.hardwaretimes.com/hyper...ore-efficient-than-smt-on-amd-ryzen-cpus-but/

Hyper-Threading on Intel is More Efficient than SMT on AMD Ryzen CPUs: But Ryzen chips see more performance uplift with smt enabled

Hyper-Threading on Intel is More Efficient than SMT on AMD Ryzen CPUs: But Ryzen chips see more performance uplift with smt enabled

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 3

- Views

- 2K