A CRT would show the same. But yeah, 60fps/Hz is where it's at. The most noticeable for me is 60fps 2D games like Rayman/Child of Light, games which are constantly panning high contrast images across the screen.which creates ghost images in your vision for the same reason that sample-and-hold creates motion blur)

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Great Framerate Non-Debate

- Thread starter brunogm

- Start date

D

Deleted member 86764

Guest

This is crazy! The best thing about TR was the nice feeling when navigating the environments. Also, what do color levels have to do with the frame rate?

I agree completely. Suggesting that the framerate was off-putting is bonkers. The game looked great.

L. Scofield

Veteran

I was running some game trailers with motion interpolation and one game that stood out is FFXV. The combination of PBR and a (pretty much) perfect implementation of HDR lighting (best of any game I've seen so far) really makes the scenery look real (and the characters sometimes). At 60fps it looks like real-life footage. Fast character and camera motions help in hiding any possible flaws.

To be honest: Tomb Raider 2013 (or should that be.. 2014? lol), did look rather strange. The high framerate actually did make the game look worse;

the fire effects look strange at ~ 60fps, same for the motion and animation. Lara running just feels off.

They should have patched the game to run at 30fps with a good object based motion blur implementation, as well as patch the color levels, and we would have had an excellent running next-level game. Instead... we have a sharper looking, sped up version of the Xbox game..

The same thing applies to the Dead Space PC version: firing certain rifles it's obvious that the gun animations/muzzle flashes/ firing rates were optimised for 30 fps. Isaac walking (especially when viewed on a 5:4 monitor) is weird as well.

In conclusion: if the game is not built to be 60 fps, then it could most certainly look worse.

If it bothers you that much, just use vsync + frame rate limiter. For me, the higher the frame rate the better, but often, a solid lower frame rate can be better than an uneven higher framerate. Definitely depends on the game though.

Huh? Poll the mouse at the same frequency of the display - no stutter at any speed (beyond display framerate stutter).

Silent_Buddha

Legend

Huh? Poll the mouse at the same frequency of the display - no stutter at any speed (beyond display framerate stutter).

Perhaps he was trying to show the correlation between that and games running at 30 hz while input is polled at 60 hz. Not sure though.

Regards,

SB

You wouldn't render 125 fps if your mouse is polling at 120. If your mouse is polling much faster than the refresh, you won't get judder. 1000 fps displays is a ludicrous notion, at least for anything other than a hardware sprite mouse overlaid.Would that be any good ?

I'm thinking about utilities like ps2 rate that would poll your mouse at 1000hz (and many gaming mice poll at 1000hz) why would they be useful if polling at framerate is the solution ?

Silent_Buddha

Legend

You wouldn't render 125 fps if your mouse is polling at 120. If your mouse is polling much faster than the refresh, you won't get judder. 1000 fps displays is a ludicrous notion, at least for anything other than a hardware sprite mouse overlaid.

Just because a game is being rendered at 60 frames per second, it doesn't mean the game is limited to registering player input or movement 60 times per second. Or in fact the OS, that is presenting the game with your input data.

In other words. Say you poll the mouse 120 times every second. Meanwhile you are playing a game that is rendering 60 frames per second. For each rendered frame you have a greater chance of grabbing an input from the mouse that is closest to when the frame is rendered.

This becomes even more important if you require precise input combined with very quick movements hence the popularity of mice that can poll 500 times per second or even more. For example, I often cite the example from my competitive FPS days of doing 180 spins mid jump that take a fraction of a second (out of 60 frames in a second, I could do it in 1-2 frames) and still being able to pick people off that are following me or coming at me from the side.

There's also a similar keyboard thing for professional gamers in N-key rollover keyboards. The ability for a keyboard to be able to register not only multiple simultaneous key presses but the order in which those key presses happens even when the buttons are pressed simultaneously (to humans it may appear simultaneous, but for n-key rollover it can very much determine which key was pressed first). It's very key in competitive RTS play, for example.

Both of those things are levels of precision that your average gamer will not be able to notice. But for professional or semi-professional players (if they are good) it is extremely noticeable.

Here's a nice wiki example for an arcade emulator that explains why you need very high polling rates for mice with regards to emulated arcade games.

http://wiki.arcadecontrols.com/wiki/Info_on_Serial/PS2/USB_Mouse_'Polling'

For the same 60Hz game, lets say we now use 500Hz OS polling. So now during 20 of the game polls, the OS supplies 9 polls. For the other 40 game polls, the OS supplies 8. From that you can see that the higher the OS poll rate, the closer the OS polling will match the original games polling.

It's not exactly the same, but similar in how you want the input the game is using for calculating the frame to be the one that most closely lines up with when the frame is rendering.

Regards,

SB

You've lost me. Display tech is limited to 120 Hz, 144 or 240 at a push in the most niche situation. This makes IO polling at screen refresh impossible. And if the displays are capped at 120 Hz, there's no point IO pollling at 125 Hz if you don't want judder. Just poll at 120Hz. Or poll a lot higher.It's not exactly the same, but similar in how you want the input the game is using for calculating the frame to be the one that most closely lines up with when the frame is rendering.

I don't see the relevance of the post on 1000 Hz IO polling and screen refresh rates. The suggested frequencies are daft, the screen refresh rates unrealistic (125 Hz monitor refresh when typically high refresh is 120 or 144 Hz), and the technology not relevant to anything outside of a laboratory.

Silent_Buddha

Legend

You've lost me. Display tech is limited to 120 Hz, 144 or 240 at a push in the most niche situation. This makes IO polling at screen refresh impossible. And if the displays are capped at 120 Hz, there's no point IO pollling at 125 Hz if you don't want judder. Just poll at 120Hz. Or poll a lot higher.

I don't see the relevance of the post on 1000 Hz IO polling and screen refresh rates. The suggested frequencies are daft, the screen refresh rates unrealistic (125 Hz monitor refresh when typically high refresh is 120 or 144 Hz), and the technology not relevant to anything outside of a laboratory.

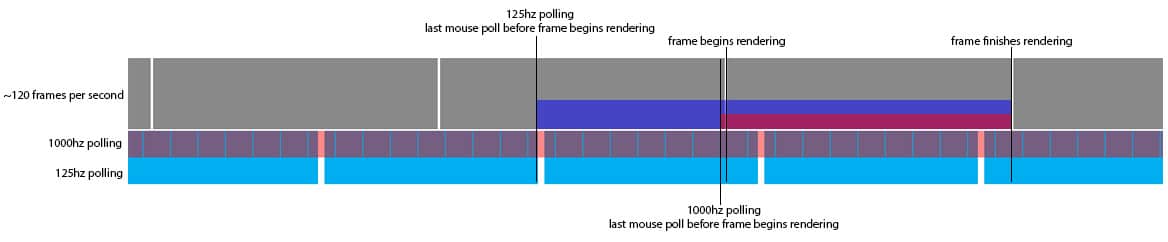

Except there is no guarantee that the input device was poled at the same time the frame started rendering. It's similar to how even if you are rendering at a locked 60 fps on a 60 hz monitor, if you don't have vsync enabled, you can still have screen tearing. Nvidia's Gsync and the VESA standard Adaptive sync will deal with it on that. But that is much simpler than trying to synchronize device input with a game's rendered frame.

With PC's especially you have a fixed mouse polling rate. Yet frame render times for games can vary greatly. Even more so when you have budget/midrange graphics cards or a budget/midrange CPU and frame render times will vary on a frame by frame basis.

Where with a locked 60 fps game your input might be offset from frame render by as much as a full frame (if by bad luck your game received the last input the OS received which just happens to be 2 ms after your frame started rendering for example), it will at least be consistent, so not bad on average but still noticeable to professional gamers.

Throw in a game that might be rendering 45 frames per second and suddenly a locked 60 hz mouse polling rate is going to have erratic differences between input and output. Again nothing your average gamer will notice but would be noticeable to some.

Now if a game is varying between a 30-60 frames per second and then even your average gamer might start to notice.

Having a high polling rate (say 500 hz for example) means that no matter when a frame starts rendering, you will have an input point very near to the start of the frame rendering.

Perhaps it's just not something a non-competitive (professional) gamer would understand/comprehend/appreciate. Just like wondering why would professional gamers lower image quality in an FPS such that they can get 200+ FPS when they only have a 60 hz display.

Everything is done to get input delay and display delay as small as possible. I've known some professionals that could actually reliably distinguish between a wired mouse and a wireless mouse due to the minuscule input lag induced by wireless. In this world single digit millisecond differences in response can be noticed.

Again, not something your average gamer is going to notice, ever. So, irrelevant to the majority of gamers. Even those that think they are elite gamers probably wouldn't notice even though they pay higher prices for those types of devices.

And on consoles is less relevant, usually. Although even in console land you rarely have games that are locked 100% of the time to 30/60 hz during gameplay segments.

Regards,

SB

You don't need to synchronise IO. If you poll the mouse at 60 Hz and display at 60 Hz, and they're not in sync, then you'll have between 0 and 17 ms of offset between input and game reading that. That offset will be uniform and so you won't see any judder.Except there is no guarantee that the input device was poled at the same time the frame started rendering. It's similar to how even if you are rendering at a locked 60 fps on a 60 hz monitor, if you don't have vsync enabled, you can still have screen tearing. Nvidia's Gsync and the VESA standard Adaptive sync will deal with it on that. But that is much simpler than trying to synchronize device input with a game's rendered frame.

Yes, that's why I said you'd use a higher IO polling rate if you don't want mouse stutter.Having a high polling rate (say 500 hz for example) means that no matter when a frame starts rendering, you will have an input point within 1 ms or less of the frame rendering.

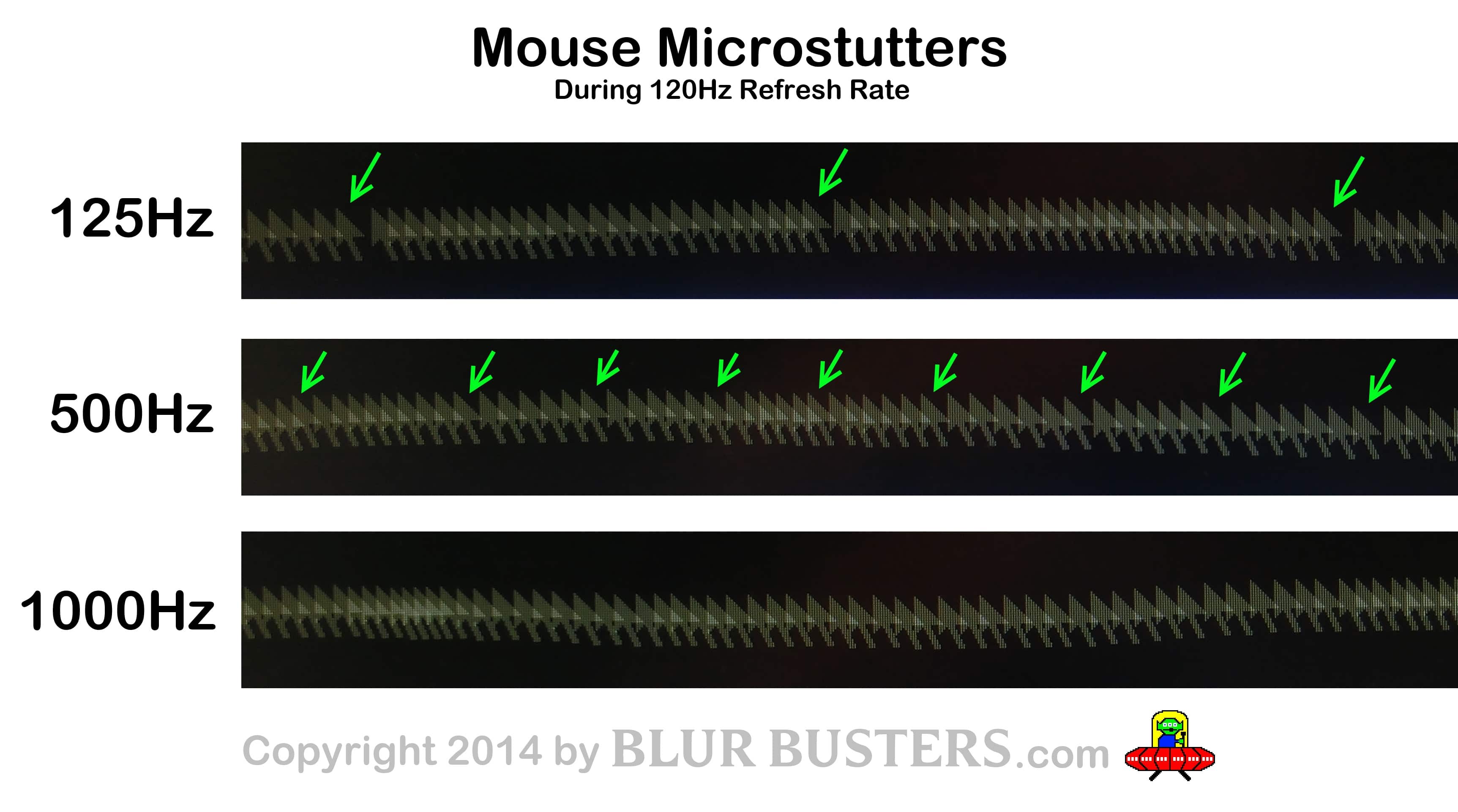

Hang on. You're talking about wanting a fast IO. I agree with that. I was talking about mouse stutter on displays with stupidly high refreshes (that don't exist). In the examples, the mouse is polling at 1000 Hz, and the result on 125, 500 and 1000 Hz displays...Perhaps it's just not something a non-competitive (professional) gamer would understand/comprehend. Just like wondering why would professional gamers lower image quality in an FPS such that they can get 200+ FPS when they only have a 60 hz display.

Pbbbbt, sorry I was reading that chart all wrong!

upnorthsox

Veteran

You've lost me. Display tech is limited to 120 Hz, 144 or 240 at a push in the most niche situation. This makes IO polling at screen refresh impossible. And if the displays are capped at 120 Hz, there's no point IO pollling at 125 Hz if you don't want judder. Just poll at 120Hz. Or poll a lot higher.

I don't see the relevance of the post on 1000 Hz IO polling and screen refresh rates. The suggested frequencies are daft, the screen refresh rates unrealistic (125 Hz monitor refresh when typically high refresh is 120 or 144 Hz), and the technology not relevant to anything outside of a laboratory.

And I don't see the relevance of posts discussing mouse I/O polling in the console forums.

I know lately we've been playing Houston to the PC forums New Orleans, but do we really need to listen to the Saints Come Marchin' In for every game at the Astrodome?

D

Deleted member 11852

Guest

For a digital controller theres no real advantage of polling the device at a higher frequency than the display but for an a analogue device like a mouse, where people are crazy inconsistent how they move it, polling it at a higher frequencies let's you normalise (smooth out) the inputs you can can diminish or almost completely filter out unintentional twitches. Alternatively you may be wanting to capture really nuanced input so more data is definitely good.

I learned this who working on a trackball interface in an avionics module in the late 1990s. Trackballs and mice tend to have the same problems so I'm sure the same issues would affect mount input capture.

I learned this who working on a trackball interface in an avionics module in the late 1990s. Trackballs and mice tend to have the same problems so I'm sure the same issues would affect mount input capture.

A decade?

I had one development machine for work driving three 24inch Sony Trinitron CRTs up until about 2009.

There were over 150 million CRTs sold in 2004.

Worldwide LCD shipments didn't exceed CRT shipments until 2008.

High def progressive scan TV in the us were predominantly dlp or plasma then unfortunately the inferior LCD technology won out over dlp or plasma. I don't recall any hidef crts

CRT monitors are basically "hidef CRTs," and are capable of progressively scanning very high resolutions in some cases.High def progressive scan TV in the us were predominantly dlp or plasma then unfortunately the inferior LCD technology won out over dlp or plasma. I don't recall any hidef crts

I'm not sure what their actual scan options tended to look like (I've heard that they usually didn't clock scanlines fast enough for 720p60, so 720p sources were displayed with 1080i, for instance), but the last few years of CRTs saw numerous HD CRT TVs, such as the extremely highly-regarded Sony KD-34XBR960.

Sorry, if it looked random.

The high fps debate also encompasses the sync in render and input. The extreme example of micro-stuttering shows is not only a feeling but is detectable in rendering. To detail a little:

Even 30hz games have drops, 60hz input helps but maybe a more complex management can reduce input in difficult times to ease cpu and when ramping up a prediction puts objects at the correct positions. Them both render and input benefit

The high fps debate also encompasses the sync in render and input. The extreme example of micro-stuttering shows is not only a feeling but is detectable in rendering. To detail a little:

Even 30hz games have drops, 60hz input helps but maybe a more complex management can reduce input in difficult times to ease cpu and when ramping up a prediction puts objects at the correct positions. Them both render and input benefit

Even 30hz games have drops, 60hz input helps but maybe a more complex management can reduce input in difficult times to ease cpu and when ramping up a prediction puts objects at the correct positions.

Or get a plasma...60fps on a screen which supports black frame insertion is my new gaming nirvana. It's like being back on CRT again.