When I loaded it up the menu was running at about 5fps, presumably pre-caching, then it returned to 90. I then played the game and went further than I had before (new environment). I had a few tiny stutters throughout the bits I had previously played and the new bits, but there was no discernible difference between the two.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Callisto Protocol [PC Stutter Fest]

- Thread starter BRiT

- Start date

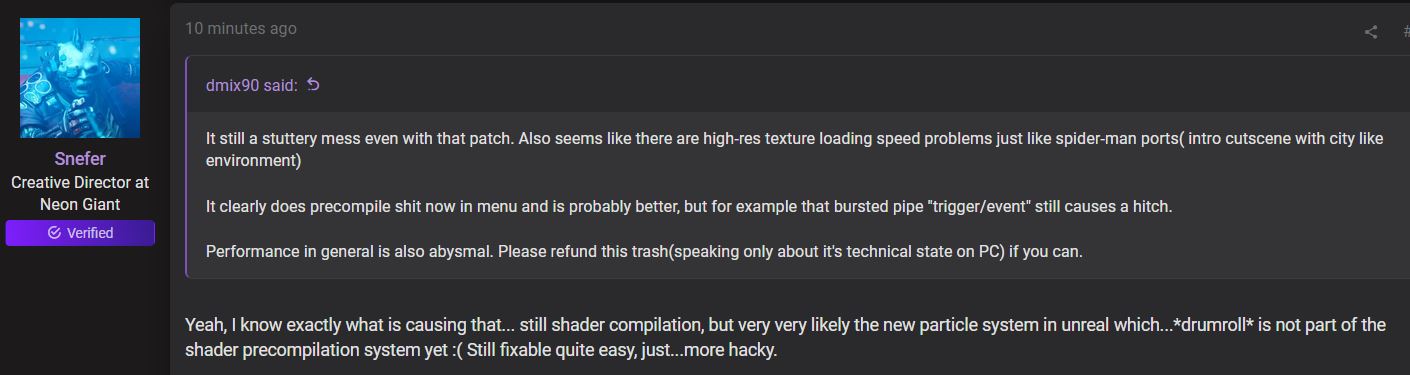

Yea, basically for those shaders that they can't catch, they have to essentially load a little level in the background upon initial load to draw them all so they can be cached that way.It's better, but stutters still remain. The Ascent dev says this:

I remember those days.Remember when games used to work? Good times.

digitalwanderer

Legend

At least they're taking it seriously. This seems like a pretty clear case of the engineers being pushed by the management to release the product before it's ready. I'll bet they're gutted by the negative response given how great a technical achievement the game is outside of the these issues.

I just hope they take the time and effort to perfect the PC and Xbox versions and don't just throw out a quick patch or two to get gloss over the worst 90% of issues, then call it a day.

Nah we don't, others on Twitter are also claiming the game suffers from loading stutters as well as shader ones, all you need to do it look at Disk and I/O use during stutters.

The game is also a memory hog consuming 19GB total at 1440p.

It wouldn't surprise me if the game struggles to stay in Series-X's 10GB of 'GPU RAM'

It might be all in the same. Shader compilation happens on the CPU so it has to be shuttled over to the gpu. If the game is super shader heavy and it’s creating a bunch of PSOs it may be putting pressure on the IO and VRAM. AMD recommends efficient PSO use as higher volumes of PSOs can eat up VRAM fairly quickly.

MS, Nvidia, AMD and Khronos are all recommending that devs avoid jit shader compilation. But devs are doing it anyway so maybe the level of shader compilation during loading on modern titles are embarrassingly long or the other option of building uber shaders first and more specialized shaders as runtime permits is currently problematic.

Maybe the devs need to stick with Directx11 for release and migrate games to Directx12 at a later date until they can release stutter free directx 12 titles comfortably.

Last edited:

A shame DX12 is running slower than DX11 in this game. DX12 allows so many new optimization features like Mesh Shaders, Sampler Feedback and VRS yet nobody uses them (except for some medicore VRS implementations). When will games finally get optimized for next generation hardware? It's so tiring.

A shame DX12 is running slower than DX11 in this game. DX12 allows so many new optimization features like Mesh Shaders, Sampler Feedback and VRS yet nobody uses them (except for some medicore VRS implementations). When will games finally get optimized for next generation hardware? It's so tiring.

Bare bones DX12 conversions are likely to be slower than DX11 on the GPU. PC is usually an afterthought mainly because of all the different configurations, makes testing optimizations more time consuming.

Async compute overlap is one good example, what works well on consoles doesn't necessarily mean it's a gain on most PC configs so a lot of engines usually disable most of those by default (especially on Nvidia).

I don't know what UE4 does but I expect consoles to be more optimized than PC, even if you can brute force better performance. There are always exceptions though, some teams put more effort on PC than others.

DavidGraham

Veteran

The advent of DX12 on PC did nothing but causing major problems all over the place, stutters, lower fps than DX11, longer loading times, higher usage of VRAM, and bugs of all sorts and types. All without even a single ounce of visual improvement over DX11! The only saving grace of DX12 has been DXR! That's it!A shame DX12 is running slower than DX11 in this game.

The rest of the DX12 Ultimate package has yet to see a good application.

The advent of DX12 on PC did nothing but causing major problems all over the place, stutters, lower fps than DX11, longer loading times, higher usage of VRAM, and bugs of all sorts and types. All without even a single ounce of visual improvement over DX11! The only saving grace of DX12 has been DXR! That's it!

The rest of the DX12 Ultimate package has yet to see a good application.

That's not always true, Shadow of the Tomb Raider was pretty good. Major CPU perf uplift and good GPU performance. But yeah most Dx12 titles are rough, and almost all UE4 games.

I just can't understand how you can miss.. the performance of your shipped code running like shit.

interesting

That is... unless you don't test the finished product on a completely sanitary PC.

Taking time to figure out the solution to the issue.... is normal. Taking time to figure out IF there is an issue in the first place, is what my problem is... because it seems developers don't.

Yeah there's also the case of the many released games that are only using DX12 - so we have no reference point to DX11. Chances are, these would run slower on DX11.That's not always true, Shadow of the Tomb Raider was pretty good. Major CPU perf uplift and good GPU performance. But yeah most Dx12 titles are rough, and almost all UE4 games.

FH5 is a great example of DX12 done right. The title runs amazingly well, has zero stutters and fantastic graphics.

Silent_Buddha

Legend

I just can't understand how you can miss.. the performance of your shipped code running like shit.

That is... unless you don't test the finished product on a completely sanitary PC.

Taking time to figure out the solution to the issue.... is normal. Taking time to figure out IF there is an issue in the first place, is what my problem is... because it seems developers don't.

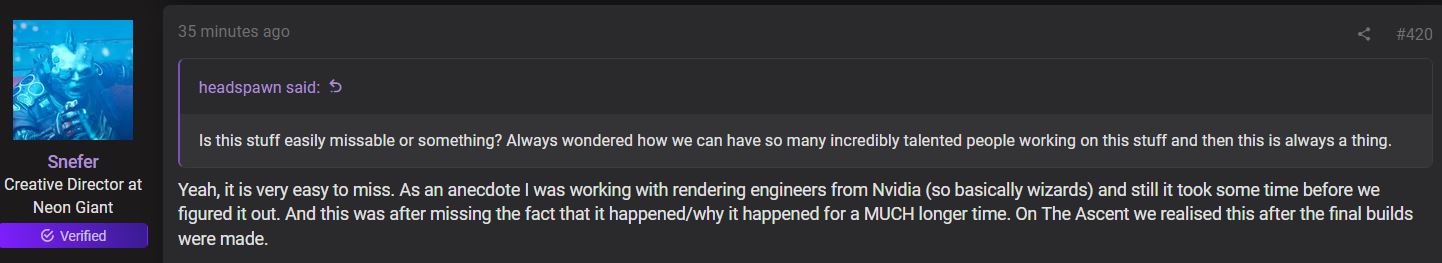

Keep in mind that even NVidia's engineers didn't notice it somehow.

Regards,

SB

What is "it" though? Story needs more context.Keep in mind that even NVidia's engineers didn't notice it somehow.

Regards,

SB

Obviously no developer is going to come out and admit they didn't do their due diligence... but I mean come on.. When I first played The Ascent, I knew as soon as the game started up and I started moving around... and ESPECIALLY when shooting the exploding barrels... that there was a shader compilation issue.

I figured that out by simply running the game once.

He states they realized it "after the final builds were made" which lends credence to what I've been saying which is that they build the "final" shipping build... but don't realize that they need to play that very final build again, to collect the PSO data for all the final shaders that are in the game, and the rebuild it AGAIN after that.

So essentially they have the "final build" which is the entire content complete and final version of everything... and then they have to play the hell out of that final build to generate the full PSO data cache, and then rebuild it again to integrate it into the ACTUAL final build. lol

Here's my thing though... We know they announced that "automated PSO caching" system that they have planned upcoming for Unreal Engine 5.1... and the way it works is that when the assets are loaded into memory, the engine will automatically begin gathering all required PSO combos for that asset on background threads, which gives it more time to hopefully have everything compiled by the time it's needed. And now we know that you can use either method, or both the new method and the old PSO caching methods together, depending on what works best. But what I thought the "automated PSO gathering" was going to be.. that as the developers and testers played the game, it would generate the PSO data as normal, but that the automated part would be how it was reintegrated back into the new build. Right now you have to do a bunch of convoluted things to generate the files, grab the .scl.csv files from your project, put them in a different directory, then play the game to log the PSOs then extract the .upipelinecache files, then run another bat to create a new .csv file with the information from the pipelinecache files, then copy that back over to a certain directory and rebuild the project....

Basically it's convoluted as hell...

Why can't Unreal Engine just have a specific "build" type which does all that shit automatically?? You already have the development build, debug build, and shipping build... but why not add another new type of build.. let's call it "PipelineCaching Build"... which is specifically for generating those PSO caches. Devs would know, ok we're ready for shipping, but first now we have to create the "PipelineCaching build", have our team play the game furiously, and the engine automatically generates the files and gets them ready to integrate into the "final shipping build".

It should all be automatic so that the developers don't have to think to do any of that. Right now it doesn't even seem like some developers are aware that you can, or have to, do any of that.

Unreal Engine creators have some work to do. If they don't fix that to ease the development many developers could lose sales and reputation and maybe they aren't the culprits.

interesting

(from this post) https://forum.beyond3d.com/threads/the-callisto-protocol-pc-stutter-fest.63075/page-2#post-2277167

Unreal Engine creators have some work to do. If they don't fix that to ease the development many developers could lose sales and reputation and maybe they aren't the culprits.

(from this post) https://forum.beyond3d.com/threads/the-callisto-protocol-pc-stutter-fest.63075/page-2#post-2277167

UE5.1 does have features to address this.

Silent_Buddha

Legend

What is "it" though? Story needs more context.

Obviously no developer is going to come out and admit they didn't do their due diligence... but I mean come on.. When I first played The Ascent, I knew as soon as the game started up and I started moving around... and ESPECIALLY when shooting the exploding barrels... that there was a shader compilation issue.

I figured that out by simply running the game once.

He states they realized it "after the final builds were made" which lends credence to what I've been saying which is that they build the "final" shipping build... but don't realize that they need to play that very final build again, to collect the PSO data for all the final shaders that are in the game, and the rebuild it AGAIN after that.

So essentially they have the "final build" which is the entire content complete and final version of everything... and then they have to play the hell out of that final build to generate the full PSO data cache, and then rebuild it again to integrate it into the ACTUAL final build. lol

Here's my thing though... We know they announced that "automated PSO caching" system that they have planned upcoming for Unreal Engine 5.1... and the way it works is that when the assets are loaded into memory, the engine will automatically begin gathering all required PSO combos for that asset on background threads, which gives it more time to hopefully have everything compiled by the time it's needed. And now we know that you can use either method, or both the new method and the old PSO caching methods together, depending on what works best. But what I thought the "automated PSO gathering" was going to be.. that as the developers and testers played the game, it would generate the PSO data as normal, but that the automated part would be how it was reintegrated back into the new build. Right now you have to do a bunch of convoluted things to generate the files, grab the .scl.csv files from your project, put them in a different directory, then play the game to log the PSOs then extract the .upipelinecache files, then run another bat to create a new .csv file with the information from the pipelinecache files, then copy that back over to a certain directory and rebuild the project....

Basically it's convoluted as hell...

Why can't Unreal Engine just have a specific "build" type which does all that shit automatically?? You already have the development build, debug build, and shipping build... but why not add another new type of build.. let's call it "PipelineCaching Build"... which is specifically for generating those PSO caches. Devs would know, ok we're ready for shipping, but first now we have to create the "PipelineCaching build", have our team play the game furiously, and the engine automatically generates the files and gets them ready to integrate into the "final shipping build".

It should all be automatic so that the developers don't have to think to do any of that. Right now it doesn't even seem like some developers are aware that you can, or have to, do any of that.

It was about shader stutter. And even when they identified it, even with the help of NV engineers it took quite a while for them to track it down and fix it. Of course, now that they know what was causing the stutters and how to fix it, you see them reach out to other Indie developers to help them identify and point them in the right direction to fix it. Not only that you see them piping in from time to time to let others know that shader stutter is a problem and not necessarily an easy one to identify and fix when you're deep in development. IE - lots of bugs, lots of performance issues, lots of optimization to do, etc. So you may not know until the final build of the game that it's not a bug and instead it's due to JIT shader compilation.

Even though there are developers that keep an eye on DF videos, not all developers do and when you're an indie developer working on an ambitious project like The Ascent, you may not have a lot of time as the engine and rendering architect to pay attention to other games during development of your title.

Now, if it slips through again on their next title, I'll definitely blast them about how they couldn't miss it and fix it before release now that they know the root cause and how to fix it, but this was their first studio title which came out before there was so much attention being paid to shader stutter. So, I give them kudos for taking it seriously after their game was released and before shader stutter got as much publicity as it has.

I fully expect we'll continue to see this for a bit and I'll generally give Indie devs (like Neon Giant) more slack, especially if it's their first major title release (The Ascent).

Now someone like Epic releasing the latest Fortnite with shader stutter? They should absolutely know better by this point. The Callisto Project? Tough call. They obviously ran out of development time for any version that wasn't the PS5 version. So, who knows if shader stutter would or would not have been fixed if they actually had enough time to put into the PC version.

Regards,

SB

Last edited:

Remember to blow and spit into the cartridge a little and hope for the best.I remember those days.

Similar threads

- Replies

- 10

- Views

- 755

- Replies

- 24

- Views

- 3K

- Replies

- 9

- Views

- 2K