Well it should do a lot better against 5070/Ti thanks to FSR4 and the improved RT - it is obviously not on the same level as Nvidia's but no one sane ever expected that.

The pricing for the XT seems solid at a first glance. I'll reserve a final judgement for when I'll look through all the interesting benchmark results.

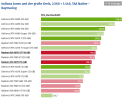

The non-XT is a weird one though. It is seemingly just a tad faster than 5070 - but in both non-RT and "lite" RT workloads. I'm not sure that it's going to sell well against a similar priced 5070 - or just a +$50 XT model upsale (MSRPs of course, we'll see how they'll sit in retail). To me it seems almost like they couldn't have make it any cheaper and thus made it unattractive to not lose much on it.

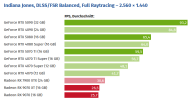

It's doing fine unless you switch to heavy RT (PT, Full RT). CP2077 isn't an exception here.

View attachment 13261

Edit: I have to add that "doing fine" here means that the card is now less limited by its RT h/w throughput which means that the bottleneck has shifted to shading again. So in games where this is the case the "RT comparisons" are likely showing us shading comparisons, not RT comparisons. The PT/Full RT/whatever results are the ones which showcase the relative capabilities of RT h/w.