So now we have added PS5 Pro doesn't do hardware RDNA3 dual issue to the FUD pile.

There's is no FUD pile. No-one is trying to spread fear, nor doubt, nor uncertainty. There's only theories and discussion and bits of pieces of evidence here and there. It doesn't matter which theory is right or wrong. We speculate, debate, and then see who's right when we finally know. This is your last warning. If you don't like people expressing viewpoints you don't agree with, you don't belong in B3D.

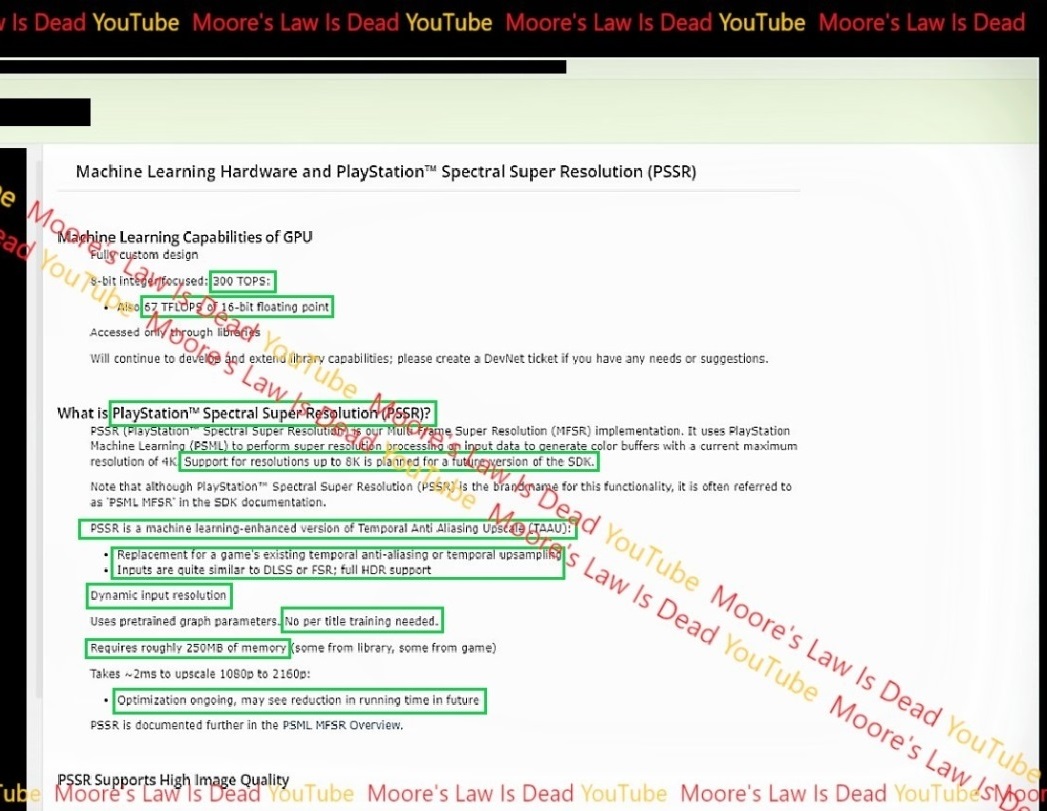

It is 67 Tflops of FP16 according to the leaked docs. And we have developers who are confirming it. This is some special conspirational stuff I am reading in this thread.

But we have different information from different sources! If they all said the same thing, it'd be very easy to understand what reality is. Where they differ, we need to understand why to arrive at a correct conclusion. The only people who don't embrace that process of collating more evidence and evaluating it are those who already have a preferred outcome, select evidence to support their preferred understanding, and deride those who challenge it.

Here, we like the process of discovery. We like the process of getting evidence, and then getting different evidence, and not quite knowing. Then comes the challenge of a hypothesis that can consolidate the disparate evidences and arrive at the truth. Platforms don't come into that process.

One question raised from your source - it claims 2ms upscaling time, this might be reduced. If PSSR is operating on a discrete upscaling unit, why is there interest in reducing the time taken? If that 2 ms isn't taking away from the GPU, who cares whether it's 2ms or 5ms or 1 ms? The worst it'll do is add that much input latency as lag after the frame has been rendered, but 2 ms is neither here nor there so reducing that won't make any difference.

When all the evidence points to one possibility, yay. Until then, we ponder, Yay!