Which is why Khronos mentioned Vulkan limiting games/game engines dynamism in it's current state. They also clearly articulate that it limits several configuration from reaching it's maximum hardware potential (pretty sure these configuration include some NVIDIA GPU). So now you have software limitations and hardware limitations.

It's disappearing because most developers are switching to DX12 to implement Ray Tracing, or some of the features in the DX12U package (mesh shaders/variable rate shading .. etc). The pace of DX12 adoption has greatly accelerated after DXR.

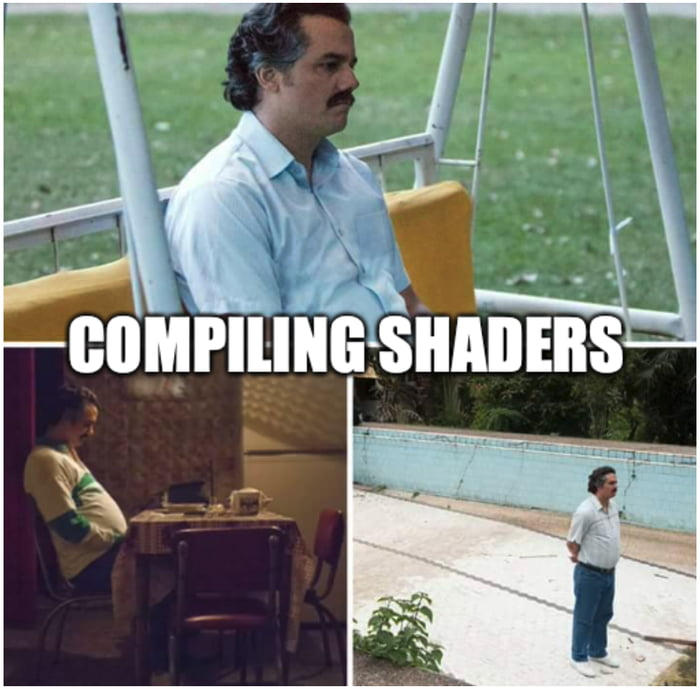

This is the kind of thinking that got us here in the first place, we are only starting to fully unravel the big picture years after the inception of DX12/Vulkan, we have been fed a bunch of misleading points about the advantages of going lower level, but the major disadvantages were carefully hidden, with most developers afraid to talk about it or criticize it, almost a decade later things are clear now, and voices are loud demanding change. This is wrong. This discussion should've happened many years ago, instead of forcing the entire spectrum of developers to go down an undesirable path, then to back peddle on it so late in the game.

Very weird take, user experience is the reason developers spend so much time writing code. It's the product in the end that matters, not the journey per se. This is the reason we have so many arrogant developers not caring about stuttering on PC at all.

I mean who stands to gain from all of this? software and hardware limitations, bugs, bad memory management, horrendous stuttering. Which developer? Which IHV? what percentage of market share do they have so that they can force the entire industry and the userbase along with them into this sub optimal position?