DiGuru said:Jaws said:DiGuru said:ERP said:I remember the first time I saw that PiXAR demo with the jumping desk lamps in the 80s (can't remember their names), it had fantastic animation and lighting, but it didn't have millions of polygons, 50 texture layers or what not but to this day I've yet to see an interactive game that matches it's 'realism' or maybe I still have my rose tinted goggles on.

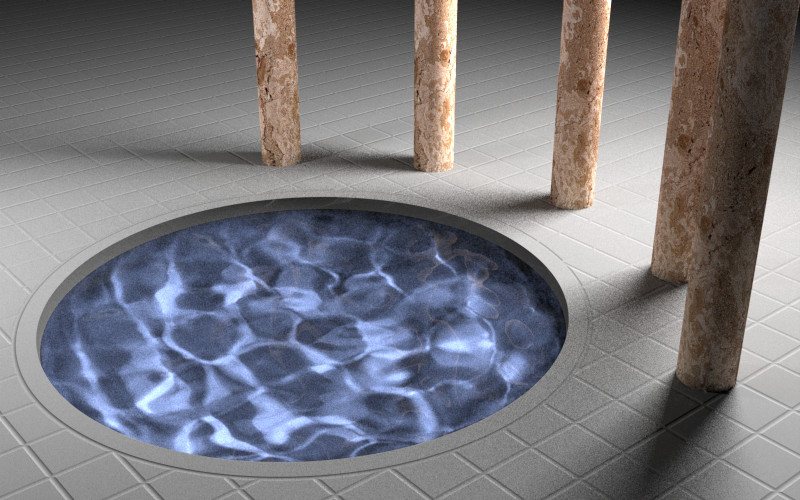

And I guess it's my turn to point out that very few (Pixar) Renderman renders use significant amounts of ray tracing.

Renderman in a lot of ways is just a collection of hacks, and as such it's a really good example of how far the hacks can get you.

You need very clever and creative people to create brilliant things with a series of good hacks. With real-time ray-tracing hardware and good tools, most artists can create really nice things. Choose.

Well you could argue that anything simulated on a computer is a series of hacks!I suppose I was referring to the term 'hack' in a relative sense indirectly using renderman as a reference point where mainstream 3D aims to match it's rendering quality through a series of 'it's own' hacks. I assume PiXAR is selective of what it ray traces due to cost rather than quality? If it's cost then there's a bigger incentive for faster ray tracing hardware and algorithms, no?

DigGuru, I'm not sure what you're asking me to choose here? 3D can be 'artistically' pretty or 'realistically' pretty...I'm referring to realistically pretty here as it's less subjective.

Yea, but realistically is split between the realism of Hollywood (that looks much better) and "real" realism (more like Half-life 2, very gritty).

To produce brilliant art, you need brilliant artists. But you can create very nice art by giving regular artists great tools. So, we can have a few brilliant pieces, created by a team of outstanding people, or we can have those same pieces AND a lot of very nice ones.

Those teams produce only one or two masterpieces, and it doesn't happen every year that one is produced. Especially with computers, we can create hardware and software that does the hacks and other hard stuff, freeing the artists from all those constraints. For computer graphics as I see it, that would require clever GPU's that use ray-tracing.

On the other hand, the method that uses the brute force approach is known to most people. It is not very artist-friendly, has lots of dead-ends and requires a major in graphics programming, but it is how it has to be done, right?

IMO, a few brilliant titles created by a few outstanding individuals is a scenario that will always be present, whatever tech is being used as talent, true talent is always sparse and is a neccesity for the industry to inspire others. The doom, half-life and unreal guys would be an example. They have written cutting edge 3D engines to inspire others and have even been financially astute to license these engines to 'lesser' mortals thereby raising industry standards. IMO, some new kid on the block needs to do the same and create a 'ray tracing' game engine that inspires others.

Yeah, 3D originated from geeks in R&D and not artists. As the tools matured over the years, artists have grasped the tech but still need a degree in programming like you say. Due to the competitive nature of this 'graphics whore' industry, people will always try to tinker with the low-level metal to extract every ounce of performance.

IMO, I think the true culprit is DirectX. The movers and shakers of the mainstream PC graphics industry will try to cater for whatever the 'next' DirectX dictates and to a lesser extent OpenGL. The question is if the next iterations of these API's promote a 'ray tracing' API, will the industry suddently clamour for their cards to support it ala SM3 etc.