You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia's 3000 Series RTX GPU [3090s with different memory capacity]

- Thread starter Shortbread

- Start date

-

- Tags

- nvidia

Do you want to risk being "stuck" with 10GB until a worthwhile better card launches in 2 years' time? What happens to second-hand prices of 10GB cards if 20GB cards launch in November? Or even 12GB?

Remember texture quality is what's really going to eat VRAM and that isn't improved by running at 1440p.

Sampler feedback is the one technique that will really make a difference in the lifetime of a 10GB card. In 2 years' time it will probably be a widely-used technique, with games being worked on right now taking the time to use the technique. But it does require a serious re-work of existing game engines.

One of the things that I'm still struggling to understand is the VRAM impact of ray tracing. It's likely to become more common in games over the next couple of years but I have no idea whether it's going to be significant.

I might even be willing to take slightly slower performance on an amd card but with 16 gigs of ram vs the 10 gigs on the 3080. The vega 56 is going into my wifes computer so it will get another 3 years or so of life. The 3080 or amd card i purchase next will eventually go into her machine also so ram can play a much bigger diffrence at that point

IF the 2080TI was ridiculous overpriced that doesn't mean Ampere is good, just show how bad Turing was. Of course we could not see it because AMD did not offer any point of comparison

Overpriced, yes. 2080ti and 2080 were quite literally the best performing gpus you could buy. To characterize turing as "bad" because nvidia abused their market position with high prices is unfair. It's not a bad architecture or bad performance.

I also don't understand why ppl are so happy with, 5-10% better efficiency out of a new Arch against a 2 year+ one plus a new node? Like we should see even more gains that that just by the node itself.

It looks like it's much more efficient than that. When computerbase normalized the power to 270W with the 2080ti, the architecture looks to be about 25% more efficient. They're basically shipping the card well beyond the best point in its efficiency curve which makes it look less efficient than it is.

https://www.computerbase.de/2020-09...gramm-control-effizienzvergleich-in-3840-2160

And this kids is why you should never pre-order a product base on a paid review that says it will be 80% faster.

Literally no one has pre-ordered because pre-orders are not available.

Jawed

Legend

All of what you've said seems pretty solid to me - I'm upgrading from a PC built in 2012 that's had a CPU and GPU upgrade, so my plans are very similar though I think ray tracing is less exciting as a feature now than it was before 2000 series launched (hype effect) - Crysis Remastered ray tracing shows that judicious usage is vastly more important than the checkbox feature, so I expect performance/visual aspect of ray tracing is less compelling than ultra-high-res game worlds with massive draw distances and no pop-in.In case you aree curious. I built my last computer 2013 which I'm still using. Only upgrades I did was better ssd and better gpu. I see pc technology development being slower and slower,... Not counting gpu my next planned pc build is slightly over 2000$ in parts at the moment. I might get 3080 or 3090. I'm not expecting something in 2 years time that would obsolete that build. When I look at turing to ampere upgrade that would not provoke me to update gpu. Earliest maybe 4 years from now there is something good enough to make me consider upgrading. Thinking about how good dlss2.0 already is compared to native 4k it might be 6 years upgrade cycle for gpu. dlss is only going to get better over time and I can sneak by few years with dlss on to avoid upgrading.

I'm firmly in the boat to buy something so good that I rarely need to upgrade. I'll wait until winter though. I want something zen3 based and perhaps by that time the availability and understanding what is good high end buy is more clear to me. Also by that time cyberpunk2077 has had enough patching that I dare to start to play it.

1080ti I was forced to upgrade as my old gpu just wasn't good enough for vr. 1080ti I want to upgrade to get ray tracing for new games like cyberpunk2077 and bloodlines2 and so on. But I suspect my old 4core cpu is just not going to cut it anymore for those games with ray tracing on. If there was no ray tracing I would still be happy with 1080ti.

edit. I wouldn't be surprised if developers started to optimize 4k for 10GB memory now that there is 4k capable card with that spec. In past that was not the case and 4k was 2080ti or better only affair. 3080 and 3070 are going to sell a ton of units and can in theory run optimized 4k well.

It sounds like you're planning to play at 4K, like me (I'm upgrading from 1080p). It seems likely, though, that with 10GB you'll be turning down textures and geometry detail even when using DLSS. Ray tracing may also have to be scaled back if it really does use a lot of memory.

Anyway, you're playing the waiting game. Let's see if AMD pokes NVidia/AIBs into larger memory setups for the cards below 3090...

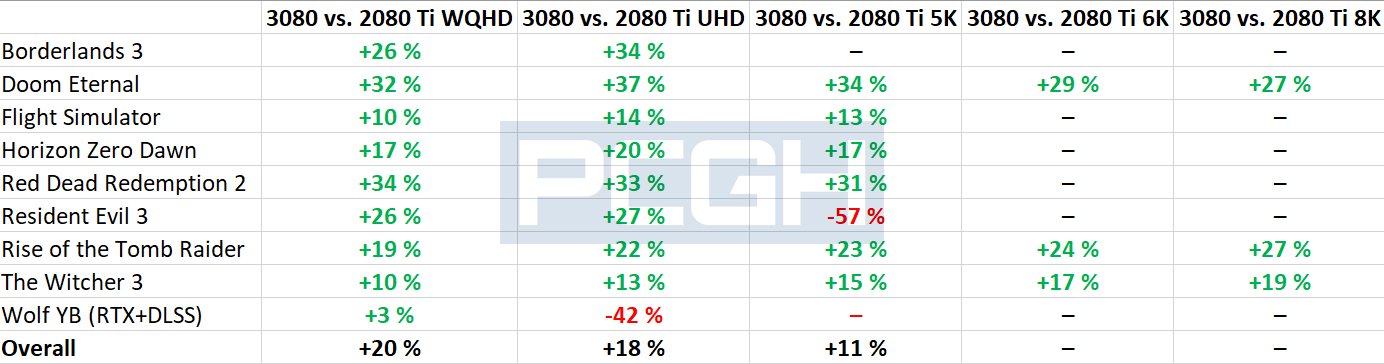

40% increase compare to a card that is 2+ years old and that it's performance was a disappointment? I cannot call it a victory. Surely the better competition we expect from AMD has save the buyers hundreds of dollars but still....If a product is bad any comparisons with that will look good.

Overpriced, yes. 2080ti and 2080 were quite literally the best performing gpus you could buy. To characterize turing as "bad" because nvidia abused their market position with high prices is unfair. It's not a bad architecture or bad performance.

It looks like it's much more efficient than that. When computerbase normalized the power to 270W with the 2080ti, the architecture looks to be about 25% more efficient. They're basically shipping the card well beyond the best point in its efficiency curve which makes it look less efficient than it is.

View attachment 4622

https://www.computerbase.de/2020-09...gramm-control-effizienzvergleich-in-3840-2160

Literally no one has pre-ordered because pre-orders are not available.

Well being the "best performing gpus you could buy" does not mean that you can sell it at any price and it will be good. ppl paid a extreme premium to have a feature that was for the must part unusable just to found Nvidia development. What was the diff, between performance vs price increase? something like 30%/60%?

About the efficiency you can do the same with literally any GPU or CPU on the market, playing with the power/voltage curve will give you different efficiency gain or loses on any arh or node. the comparative needs to be stock vs stock. And even then 25% for a 2+ years of arch development + a new node doesn't seem something we can be surprise of. AMD's claimed 50%(not counting the rumors of 60%) on a refine Arch and refined node now THAT is a efficiency gain that we should praise, if its true.

Should I pull the trigger? Still kinda concerned about 10GB.

Or maybe I buy that rowing machine...

Wait for the 3080 Ti if you can.

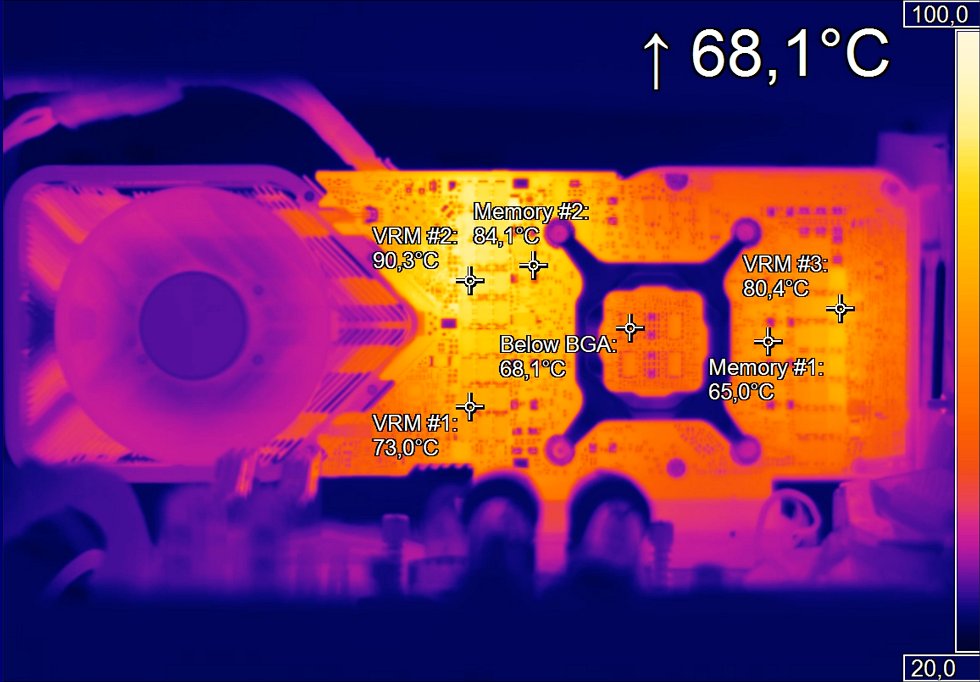

VRM #2 is like, fu** you VRM #1 and #3...

Given Ampere got a full-node shrink advantage, it got a standard gain.

40% increase compare to a card that is 2+ years old and that it's performance was a disappointment? I cannot call it a victory. Surely the better competition we expect from AMD has save the buyers hundreds of dollars but still....If a product is bad any comparisons with that will look good.

100/60 - 100% = 67% increase.

D

Deleted member 2197

Guest

Yeah, it would be an embarrassment if none of that panned out and the performance uplift was less than a RTX 3080.AMD's claimed 50%(not counting the rumors of 60%) on a refine Arch and refined node now THAT is a efficiency gain that we should praise, if its true.

that's the most interesting metric to me! @jayco posted something similar, gotta check Eurogamer.Overpriced, yes. 2080ti and 2080 were quite literally the best performing gpus you could buy. To characterize turing as "bad" because nvidia abused their market position with high prices is unfair. It's not a bad architecture or bad performance.

It looks like it's much more efficient than that. When computerbase normalized the power to 270W with the 2080ti, the architecture looks to be about 25% more efficient. They're basically shipping the card well beyond the best point in its efficiency curve which makes it look less efficient than it is.

View attachment 4622

https://www.computerbase.de/2020-09...gramm-control-effizienzvergleich-in-3840-2160

Literally no one has pre-ordered because pre-orders are not available.

Talking of efficiency, money cost per frame the 3080 obliterates everything.

Jawed

Legend

Even if it's true, it would seem to be because Navi's efficiency was terrible at launch.AMD's claimed 50%(not counting the rumors of 60%) on a refine Arch and refined node now THAT is a efficiency gain that we should praise, if its true.

Well being the "best performing gpus you could buy" does not mean that you can sell it at any price and it will be good. ppl paid a extreme premium to have a feature that was for the must part unusable just to found Nvidia development. What was the diff, between performance vs price increase? something like 30%/60%?

About the efficiency you can do the same with literally any GPU or CPU on the market, playing with the power/voltage curve will give you different efficiency gain or loses on any arh or node. the comparative needs to be stock vs stock. And even then 25% for a 2+ years of arch development + a new node doesn't seem something we can be surprise of. AMD's claimed 50%(not counting the rumors of 60%) on a refine Arch and refined node now THAT is a efficiency gain that we should praise, if its true.

Maybe adjust your expectations? Node shrinks will get harder, and architectures that are already efficient will not receive easy gains. 25% gain over the top end card at the same TGP and for something like 60 or 70% of the price looks really good to me.

I hope the AMD card is a monster, but this is an Nvidia thread.

D

Deleted member 2197

Guest

PCI EXPRESS 4.0 TESTING

https://pcper.com/2020/09/nvidia-geforce-rtx-3080-founders-edition-review/The Gen3 vs. Gen4 question becomes much ado about nothing once the benchmarks are run. I didn’t see any difference outside of a normal run-to-run variance in my testing, and yes, I verified that the card was running in Gen3 mode after manually adjusting the settings in the motherboard setup.

...

Of course there can be a measurable difference (I did see about 2 FPS higher using Gen4 with Metro Exodus at 1440/Ultra), but it’s very small. Until you break out the PCI Express Feature Test in 3DMark, that is!

Results of the PCI Express Feature Test were 26.24 GB/s bandwidth using PCI Express 4.0, dropping to just 13.05 GB/s using PCI Express 3.0. I’m sure there will be applications that can leverage more bandwidth than the games and synthetics benchmarks I ran, but for gamers on current Gen3 Intel platforms there shouldn’t be a noticeable impact.

For those concerned about VRAM use, Kiguru has this:

https://www.kitguru.net/components/...s/nvidia-rtx-3080-founders-edition-review/24/

Some games getting close to the limit at 4k max settings but very few, and none passing it. Its also possible the games are requesting more memory than they need simply because its available. That said, I'd personally feel more comfortable with 16GB+.

https://www.kitguru.net/components/...s/nvidia-rtx-3080-founders-edition-review/24/

Some games getting close to the limit at 4k max settings but very few, and none passing it. Its also possible the games are requesting more memory than they need simply because its available. That said, I'd personally feel more comfortable with 16GB+.

are they going to use eye tracking ? otherwise how do they know what part of the screen to render in high resAs far as I can tell, the console platforms are intending to use VRS.

Another thing to consider with regards to RAM size is that at the start of the current generation, the fastest GPU's available were sporting only 2 and 3GB vs the consoles 8. 10GB vs the new consoles 16GB is far better.

Man from Atlantis

Veteran

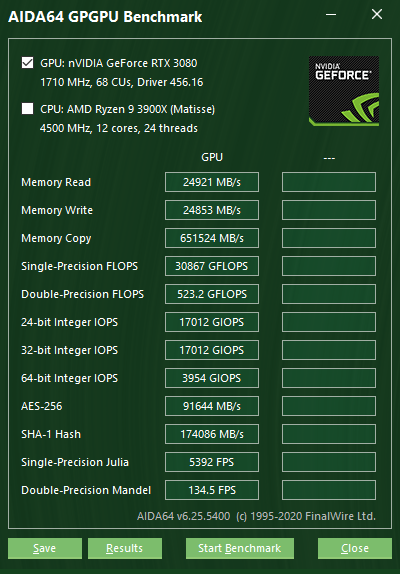

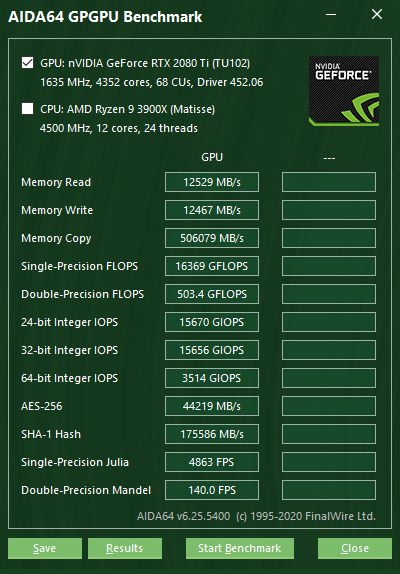

IPC benchmark

Founders Edition RTX 3080@2000MHz/19.8GT/s (792GB/s) [380W] vs MSI RTX 2080 Ti Gaming Z@2000MHz/18GT/s (792GB/s) [340W]

https://www.pcgameshardware.de/Gefo...Tests/Raytracing-Vergleich-DLSS-Test-1357418/

Last edited:

Similar threads

- Replies

- 359

- Views

- 29K

- Replies

- 3

- Views

- 384

- Locked

- Replies

- 10

- Views

- 861