Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia GeForce RTX 50-series Blackwell reviews

- Thread starter Scott_Arm

- Start date

DavidGraham

Veteran

The 5070Ti is the same level as a 4080 Super or 7900XTX.

www.techpowerup.com

www.techpowerup.com

MSI GeForce RTX 5070 Ti Ventus 3X OC Review - Beating RX 7900 XTX

NVIDIA has launched the GeForce RTX 5070 Ti today, lifting the review embargo. The MSI RTX 5070 Ti Ventus 3X comes at NVIDIA MSRP of $750, yet includes a small factory overclock. In terms of performance, the Ventus is able to compete with RTX 4080 Super and RX 7900 XTX.

DegustatoR

Legend

More reviews.

Seems like a solid if uneventful perf/price gain on this one.

Seems like a solid if uneventful perf/price gain on this one.

The 5070Ti is the same level as a 4080 Super or 7900XTX.

If the prices come back down to earth 50 series may well be remembered as a decent refresh lineup. Not very exciting overall though.

DegustatoR

Legend

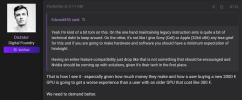

I guess you could call the results "interesting" in a sense that pretty much all of benchmarks show where the card is math or bandwidth limited in comparison to 4080/S.

In the first case it ends up being slower as you'd expect from TFs.

In the second - on par or even faster, again, as you'd expect from GB/s.

In the first case it ends up being slower as you'd expect from TFs.

In the second - on par or even faster, again, as you'd expect from GB/s.

Have they reported clocks on their tests? There could be variations which throw the results off by few %s. I think smallest gap between 4070 Ti Super and 5070 Ti we got was 9%HUB managed to find a few games where the 5070 Ti was slower than the 4070 Ti super. TPU had very different results. With the margins being so thin these discrepancies can significantly influence people’s perception depending on which reviews you consume.

Edit: we got 8% in Assetto Corsa and STALKER 2, both at 4k.

At 1080p it was just 3-4%

One thing I always wonder is in these days of upscaling, should I be paying attention to the 4K numbers which usually show the biggest performance increase or the 1080p number which is the actual internal resolution (with DLSS performance) I'll likely be running most of the most demanding games at with a GPU like the 5070Ti.

Regarding the PhysX being deprecated on 50-series.. it sucks, but they've got to move on at some point, right? So long as all of these games can be played without GPU PhysX acceleration.. then I don't see any big issue. We actually need IHVs and APIs to start being a bit more aggressive in deprecating some of this old stuff that's either not worth supporting anymore or is actively holding back progress in other areas. There's lots of tech demos from the past which no longer work on modern hardware.. it's the price we pay to move on.

Hardware accelerated PhysX was in a small amount of games to begin with, and yes they are cool.. and yes it's sad to see support fade away for some of this stuff... but 40 series GPUs still have many years of support left. There'll be GPUs out there which can play this stuff for a long time yet. If the extra effects are THAT important.. just keep one of these GPUs around lol.

Hardware accelerated PhysX was in a small amount of games to begin with, and yes they are cool.. and yes it's sad to see support fade away for some of this stuff... but 40 series GPUs still have many years of support left. There'll be GPUs out there which can play this stuff for a long time yet. If the extra effects are THAT important.. just keep one of these GPUs around lol.

Flappy Pannus

Veteran

Hardware accelerated PhysX was in a small amount of games to begin with, and yes they are cool.. and yes it's sad to see support fade away for some of this stuff... but 40 series GPUs still have many years of support left. There'll be GPUs out there which can play this stuff for a long time yet.

The problem is if you're looking to upgrade. A 40 series is not an option right now as Nvidia stopped making them, so any Nvidia upgrade path for my 3060 means losing these effects. I was actually looking forward to a 4k 60+ playthrough of Arkham Knight with all Nvidia effects enabled when I get a new card, welp.

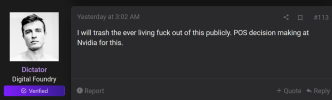

Secondly, while I don't think Nvidia exactly needed to call a press conference for this or anything, the way it wasn't mentioned until people starting filing bug reports is really not the best way they could have handled this. I gotta think some kind of better compromise was possible when Nvidia saw the writing on the wall for 32 bit CUDA years ago instead of this sudden stealth deprecation.

Chances are this is not the last you'll hear of it.

I was actually looking forward to a 4k 60+ playthrough of Arkham Knight with all Nvidia effects enabled

Me too. I saw somewhere that Arkham Knight is on 64-bit PhysX libraries so should be safe. Glad I finished the earlier Batman games already.

Secondly, while I don't think Nvidia exactly needed to call a press conference for this or anything, the way it wasn't mentioned until people starting filing bug reports is really not the best way they could have handled this. I gotta think some kind of better compromise was possible when Nvidia saw the writing on the wall for 32 bit CUDA instead of this stealth deprecation.

Yeah all they had to do is drop a sad face emoji and say they had no choice but to move on from legacy 32-bit CUDA. The silent sabotage is just asking for pitchforks.

Cynicalking

Regular

I think Arkham Knight works, its older Physx titles that don't. From the 360/PS3 era.The problem is if you're looking to upgrade. A 40 series is not an option right now as Nvidia stopped making them, so any Nvidia upgrade path for my 3060 means losing these effects. I was actually looking forward to a 4k 60+ playthrough of Arkham Knight with all Nvidia effects enabled when I get a new card, welp.

Flappy Pannus

Veteran

Me too. I saw somewhere that Arkham Knight is on 64-bit PhysX libraries so should be safe. Glad I finished the earlier Batman games already.

Yeah all they had to do is drop a sad face emoji and say they had no choice but to move on from legacy 32-bit CUDA. The silent sabotage is just asking for pitchforks.

I think Arkham Knight works, its older Physx titles that don't. From the 360/PS3 era.

Ah yeah that tracks, it's not 32 bit. Arkham City however.

1080p would be closer to the performance scaling you will get. As long as ray reconstruction isn’t used anyway.One thing I always wonder is in these days of upscaling, should I be paying attention to the 4K numbers which usually show the biggest performance increase or the 1080p number which is the actual internal resolution (with DLSS performance) I'll likely be running most of the most demanding games at with a GPU like the 5070Ti.

Last edited:

…why? What is the actual reason we need to break backwards compatibility?Regarding the PhysX being deprecated on 50-series.. it sucks, but they've got to move on at some point, right

DegustatoR

Legend

32 bit Windows are deprecated. 32 bit CUDA is deprecated. The API which is being used isn't available anymore.…why? What is the actual reason we need to break backwards compatibility?

Except that it still works on every other NVIDIA card. 32-bit CUDA and PhysX didn't disappear anywhere, only RTX 50 support for them did.32 bit Windows are deprecated. 32 bit CUDA is deprecated. The API which is being used isn't available anymore.

Last I checked 32bit applications still run on Windows.32 bit Windows are deprecated. 32 bit CUDA is deprecated. The API which is being used isn't available anymore.

DavidGraham

Veteran

Yeah, Arkham Knight works, any 64 bit game will still work fine. However 75% of PhysX games are 32 bit, so they are locked out.Ah yeah that tracks, it's not 32 bit. Arkham City however.

I also remembered a very funny thing, there were two games that straight up relied on CUDA (not PhysX) to do some visual features .. Just Cause 2 used CUDA to render more realistic water and better depth of field, and NASCAR 14 used CUDA to render more realistic smoke and particles.

Both of these games are 32 bit, which means they won't be able to use these effects (they can't be run on the CPU), which is hilarious and disgusting at the same time!

Last edited:

Similar threads

- Replies

- 280

- Views

- 17K

- Replies

- 135

- Views

- 7K