RTX 3060 now holds the number one spot in the Steam Survey (desktop + laptop variant).

Thats quite impressive, the 3060 is not a slouch at all. In special considering that thing sports 12GB framebuffer.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

RTX 3060 now holds the number one spot in the Steam Survey (desktop + laptop variant).

Wot? It's not one of their uber high end models?!?RTX 3060 now holds the number one spot in the Steam Survey (desktop + laptop variant).

Wot? It's not one of their uber high end models?!?

If it was due to the name, they would rename it, not cancel it?NVIDIA "unlaunches" RTX 4080 12 GB due the name

It takes time to rename something when you've made tons of cards with old names. If it was just stickers it would be quick, but they need to reflash every card too.If it was due to the name, they would rename it, not cancel it?

So would could be the real reason?

Nvidia's phrasing is "tens of thousands NVIDIA GPUs including A100 and upcoming H100 accelerators". So unless Tom's hardware has some further information on this, it's not necessarily tens of thousands of A100 and H100 as some of the hardware could be something lower end.

NVIDIA, Oracle CEOs in Fireside Chat Light Pathways to Enterprise AI

In a fireside chat, Safra Catz and Jensen Huang discussed their expanding collaboration to speed adoption of enterprise AI.blogs.nvidia.com

If it truly is tens of thousands of those highest end accelerators it would be a huge amount. For context:

The partnership, which builds on earlier deployments, sets up Nvidia with the kind of infrastructure it requires to expand on a long-term goal to become a software powerhouse. It also gives Oracle’s cloud service the plug-and-play hardware capacity and software framework to easily deploy AI software.

...

The companies are “looking at the full stack so not just the GPUs and infrastructure but getting into the software layer, getting into the service layer,” Leung said.

Nvidia is known as a graphics chip company, but is betting its future on generating more revenue from software and services. The company is looking at a Netflix style subscription business model and charging customers when its software and hardware are used to create products.

...

The AI Enterprise offerings from Nvidia have so far been limited to a handful of virtual machine interfaces on Google Cloud, Microsoft Azure and Amazon Web Services, which have their own AI software offerings that are largely based on open-source tools. But Nvidia has found a full-stack partner in Oracle, which is willing to take on the graphics chip maker’s proprietary software stack for its cloud service.

...

Oracle customers can currently get clusters of 512 GPUs, and is adding tens of thousands of GPU capacity, Leung said. The GPUs and AI Enterprise software stack will sit on top of the core Oracle Cloud infrastructure, which includes bare metal compute, storage and networking hardware.

...

Nvidia’s aiming to provide software services as a subscription model, and the chipmaker declined to comment on whether it’ll get a cut from GPU instances on the Oracle Cloud.

They are not that wrong, the only AI data center GPU missing from that statement is the A30, so the correct statement should be A30, A100 and H100. What a massive difference!Tom's article is just plain wrong. Both NVIDIA and Oracle say "tens of thousands GPUs including A100 and H100", not "tens of thousands of A100 and H100 GPUs"

It's not just for AI, it's "Accelerated computing and AI". Cloud services offer usually wide variety of different hardware configurations for their clients to serve different needs efficiently (even in this day of GPUs supporting several clients at once), why would Oracle be any different?They are not that wrong, the only AI data center GPU missing from that statement is the A30, so the correct statement should be A30, A100 and H100. What a massive difference!

The other data center GPUs, the A40, A10, A16 and A2 are based on the GA102 and GA107 dies, and they are for visual computing and video processing not AI.

Their main press release focused on AI, they integrated the whole NVIDIA AI software stack, AI hardware platforms, the whole sha·bang, I don't see any mention of visual computing and video processing in this release, and even if it was, these products are low volume anyway. The bulk of GPUs is most definitely going to be the A30, A100 and H100, you know the products directly aimed at serving AI. They even stated they are using A100 for data processing.It's not just for AI, it's "Accelerated computing and AI". Cloud services offer usually wide variety of different hardware configurations for their clients to serve different needs efficiently (even in this day of GPUs supporting several clients at once), why would Oracle be any different?

With the full NVIDIA AI platforms available on OCI instances, the extended partnership is designed to accelerate AI-powered innovation for a broad range of industries to better serve customers

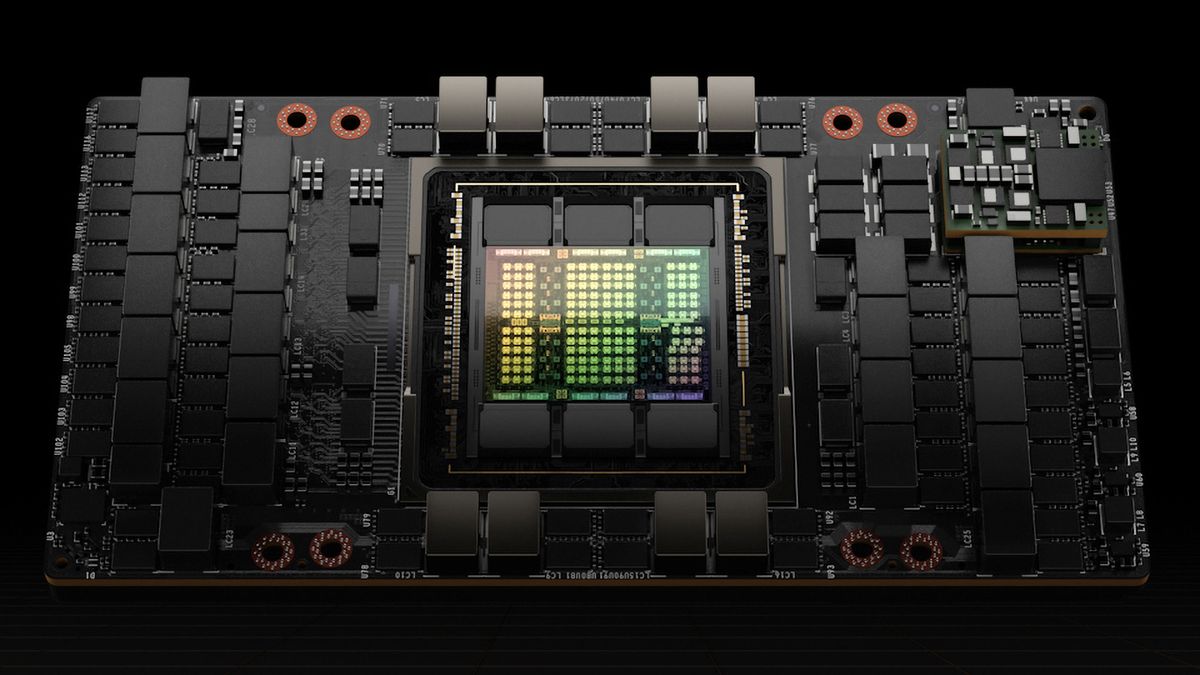

Data processing is one of the top cloud computing workloads. To support this demand, OCI Data Science plans to offer support for OCI bare metal shapes, including BM.GPU.GM4.8 with NVIDIA A100 Tensor Core GPUs across managed notebook sessions, jobs, and model deployment.

www.nextplatform.com

www.nextplatform.com

Oracle is adding tens of thousands of Nvidia “Ampere” A100 and “Hopper” H100 GPU accelerators to its infrastructure and is also licensing the complete Nvidia AI Enterprise stack so its database and application customers – and there are a lot of them as you see above – can seamlessly access AI training and inference if they move their applications to OCI. At the moment OCI GPU clusters still top out at 512 GPUs, according to Leo Leung, who is vice president of products and strategy for OCI and who has very long experience in cloud stuff with Oracle, Oxygen Cloud, Scality, and EMC.

To us, it looks like Oracle is adding capacity, meaning more GPU clusters, not scaling out its GPU clusters to have thousands or tens of thousands of GPUs in a single instance for running absurdly large workloads with hundreds of billions of parameters. Leung says that the typical OCI customers are still only wrangling tens of billions of parameters, so the scale OCI is offering is probably sufficient.

Leung was mum about when – or if – the NeMo LLM large language model service that Nvidia just announced at the fall GTC 2022 conference last month might be integrated into OCI, but we reckon that Oracle would rather not have a service running on AWS or Google Cloud or Microsoft Azure (where presumably these cloud LLMs run) linked to services running on OCI. And that means Oracle will eventually have to have enough GPU cluster scale to run the NeMo Megatron 530B model internally. That right there is 10,000 or more GPUs. So Oracle saying it is adding “tens of thousands” more GPUs is, well, a good start.

www.nextplatform.com

www.nextplatform.com