Megadrive1988

Veteran

Biggest question on my mind:

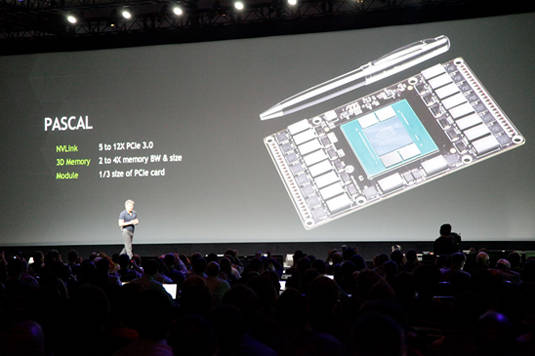

With Pascal probably becoming a reality in 2017 for Nvidia's GeForce gaming products, will AMD's equivalent Radeon GPUs (and APUs) with stacked DRAM, ( be it 2.5D or 3D stacking) likely to be also implemented in the semi custom APUs for the future next generation consoles in 2018/2019, assuming AMD gets those console design wins?

With Pascal probably becoming a reality in 2017 for Nvidia's GeForce gaming products, will AMD's equivalent Radeon GPUs (and APUs) with stacked DRAM, ( be it 2.5D or 3D stacking) likely to be also implemented in the semi custom APUs for the future next generation consoles in 2018/2019, assuming AMD gets those console design wins?