Both, according to few reports around the world the amounts of cards they're getting are quite limitedHigh demand?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

You can hear the tires of those electrons screeching as they slam the brakes trying to take a corner without slamming into a wall of gate oxide. (That's been my mental image since I learned about electro migration a good 25 years ago. Not sure if it's totally accurate.)Nominal voltage is 0.8V for crying out loud!

High demand?

I doubt that the demand is "that high that it surpass our expectations and we ran out of stock" Also there are only few models on sell right now...like 10 in amazon and 7 in newegg.

One thing that I dont understand is why they are sellers thinking they will sell a 980ti for 750 dollars or titan x for 1200...

You can hear the tires of those electrons screeching as they slam the brakes trying to take a corner without slamming into a wall of gate oxide. (That's been my mental image since I learned about electro migration a good 25 years ago. Not sure if it's totally accurate.)

It looks like people are trying to find that that point past the end in AMD's fmax/power graph where FinFET was starting to go horizontal.

Armchair scaling speculation: higher voltage tends to improve transistor switching frequency simply by giving more drive current to swing thresholds faster (at the expense of extra wattage). If higher voltage does not help the upper stable clock for a design, the next most likely bottleneck may be an interconnect signal speed limitation. TSMC's 16nm process changed the transistors to FinFET, but the interconnect BEOL is still mostly copied from TSMC's 20nm node. TSMC's 10nm node (already in test production) is kind of a process tick-tock, which updates the interconnect (smaller metal lithography, local interconnect layer, and possibly with air gaps) but with only minor tweaks to the transistor devices. So if interconnect is holding back GPU clock rates now, the 10nm node may lift that limit.It looks like people are trying to find that that point past the end in AMD's fmax/power graph where FinFET was starting to go horizontal.

Intel's 14nm process has, by far, the most advanced interconnect, including literal air gaps within key layers of metal specifically to reduce capacitance and boost signal frequencies.

Armchair scaling speculation: higher voltage tends to improve transistor switching frequency simply by giving more drive current to swing thresholds faster (at the expense of extra wattage). If higher voltage does not help the upper stable clock for a design, the next most likely bottleneck may be an interconnect signal speed limitation. TSMC's 16nm process changed the transistors to FinFET, but the interconnect BEOL is still mostly copied from TSMC's 20nm node. TSMC's 10nm node (already in test production) is kind of a process tick-tock, which updates the interconnect (smaller metal lithography, local interconnect layer, and possibly with air gaps) but with only minor tweaks to the transistor devices. So if interconnect is holding back GPU clock rates now, the 10nm node may lift that limit.

Intel's 14nm process has, by far, the most advanced interconnect, including literal air gaps within key layers of metal specifically to reduce capacitance and boost signal frequencies.

Interconnect delay is a definite factor due to things like the resistance of the thinner wires cancelling out the benefits of length reduction, but it is also one that has been a problem for many nodes now.

That's partly enabled the apparent magnitude of the improvement in the transition to FinFET, since they actually have drawbacks at the higher voltages and switching speeds that aren't particularly practical due to power and interconnect delay versus planar.

They have superior control over the channel, but at the same time there is simply more gate that needs to switch and multiple other considerations that are far beyond my education in the matter.

There were articles on the transition that even mentioned that the theoretical drive strength for the new FinFET foundry nodes was such that they could exceed the ability of their contacts to handle it, and the long-term degradation of the interconnect and transistor behavior under stress has worsened.

Overclockers are complaining that they aren't being allowed to push potential suicide run voltages.

There's an increasing amount of tuning circuitry, more complex cells, and lifespan management being put into SRAM at the finer geometries due to variation and a heightened rate of device degradation at the finer geometries, which might explain some of the higher transistor count versus prior generation chips with more resources on paper. That's probably not unique to FinFET, though.

This is interesting. Will we eventually see lifespan ratings on our GPUs and CPUs like we have on SSDs? This GPU is rated for 1,000,000billion cyclesInterconnect delay is a definite factor due to things like the resistance of the thinner wires cancelling out the benefits of length reduction, but it is also one that has been a problem for many nodes now.

That's partly enabled the apparent magnitude of the improvement in the transition to FinFET, since they actually have drawbacks at the higher voltages and switching speeds that aren't particularly practical due to power and interconnect delay versus planar.

They have superior control over the channel, but at the same time there is simply more gate that needs to switch and multiple other considerations that are far beyond my education in the matter.

There were articles on the transition that even mentioned that the theoretical drive strength for the new FinFET foundry nodes was such that they could exceed the ability of their contacts to handle it, and the long-term degradation of the interconnect and transistor behavior under stress has worsened.

Overclockers are complaining that they aren't being allowed to push potential suicide run voltages.

There's an increasing amount of tuning circuitry, more complex cells, and lifespan management being put into SRAM at the finer geometries due to variation and a heightened rate of device degradation at the finer geometries, which might explain some of the higher transistor count versus prior generation chips with more resources on paper. That's probably not unique to FinFET, though.

The devices do have design lifespans, they just have to work harder to maintain them.

I thought maybe a decade or more was the goal.

Even if not for electromigration or transistor degradation due to use, there's degradation of the packaging, solder failures, and other gradual processes of chemical and physical aging.

The silicon-level sources of degradation have sped up enough that it is no longer taken for granted that outside factors would kill the chip first.

I thought maybe a decade or more was the goal.

Even if not for electromigration or transistor degradation due to use, there's degradation of the packaging, solder failures, and other gradual processes of chemical and physical aging.

The silicon-level sources of degradation have sped up enough that it is no longer taken for granted that outside factors would kill the chip first.

I doubt that the demand is "that high that it surpass our expectations and we ran out of stock" Also there are only few models on sell right now...like 10 in amazon and 7 in newegg.

One thing that I dont understand is why they are sellers thinking they will sell a 980ti for 750 dollars or titan x for 1200...

Sometimes being actually available to purchase is the most important thing. I doubt they're moving significant G200 volumes but someone is still buying else the price would've dropped already.

Yes actually...I dont know who is buying the 980ti for 750 when you can get the 1080 for 700 upon availability...Really hard to know without actually information from their selling departmentsSometimes being actually available to purchase is the most important thing. I doubt they're moving significant G200 volumes but someone is still buying else the price would've dropped already.

D

Deleted member 2197

Guest

This card is in a weird spot right now. It's probably the equivalent of 480 in terms of perf but I'm sure Nvidia don't want to price it anywhere near $199 as that would create a huge gap between the 1070 and whatever they end up calling this. I'm guessing $249 is the lowest this thing will go for but i may be wrong.

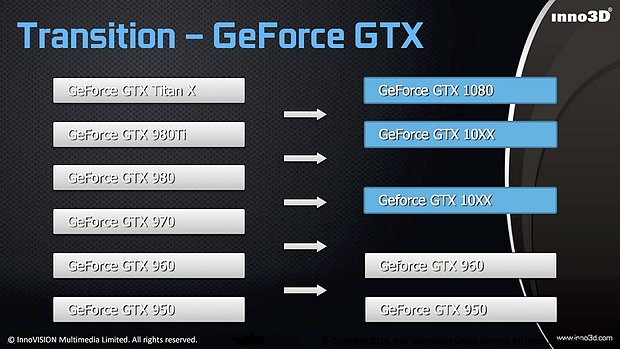

GM204/200 apparently are being discontinued for 2 months already and are just clearing inventory.Sometimes being actually available to purchase is the most important thing. I doubt they're moving significant G200 volumes but someone is still buying else the price would've dropped already.

http://techfrag.com/2016/04/18/nvid...-geforce-gtx-980ti-980-970-series-production/

Even though this is coming up in the 480 AMD thread I feel it is also very relevant here.

It seems AoTS is using a fp16 pipe as part of its calculations and they feel this is where the current rendering anomaly comes from.

Now with the changes to 1080 is it possible AoTS is not playing nice with the mix-precision fp32 cuda core or maybe some issue with how Nvidia reduced fp16...

Also I am surprised no publications has not picked up on the fp16 computation pipe in AotS before.

I think Stardock are wrong to say without any evidence this is just about getting a higher score, surprised they are coming out right now saying that and ofcourse they would infer this is Nvidia with the 1080.

The issue does not happen with the 980ti (asked someone already to try it), so if it was a deliberate attempt to get a higher score we would also see it with Maxwell 2 cards.

Kinda disappointing statement (and still animosity between Stardock and Nvidia I feel) but I am digressing from the point of the 1080-Pascal and how fp16 is now handled.

Cheers

It seems AoTS is using a fp16 pipe as part of its calculations and they feel this is where the current rendering anomaly comes from.

Now with the changes to 1080 is it possible AoTS is not playing nice with the mix-precision fp32 cuda core or maybe some issue with how Nvidia reduced fp16...

Also I am surprised no publications has not picked up on the fp16 computation pipe in AotS before.

https://www.reddit.com/r/pcgaming/c...ng_the_aots_image_quality_controversy/d3t9ml4We (Stardock/Oxide) are looking into whether someone has reduced the precision on the new FP16 pipe.

Both AMD and NV have access to the Ashes source base. Once we obtain the new cards, we can evaluate whether someone is trying to modify the game behavior (i.e. reduce visual quality) to get a higher score.

In the meantime, taking side by side screenshots and videos will help ensure GPU developers are dissuaded from trying to boost numbers at the cost of visuals.

I think Stardock are wrong to say without any evidence this is just about getting a higher score, surprised they are coming out right now saying that and ofcourse they would infer this is Nvidia with the 1080.

The issue does not happen with the 980ti (asked someone already to try it), so if it was a deliberate attempt to get a higher score we would also see it with Maxwell 2 cards.

Kinda disappointing statement (and still animosity between Stardock and Nvidia I feel) but I am digressing from the point of the 1080-Pascal and how fp16 is now handled.

Cheers

Ext3h

Regular

It's not necessarily an implementation detail which is flawed here, but something else.Now with the changes to 1080 is it possible AoTS is not playing nice with the mix-precision fp32 cuda core or maybe some issue with how Nvidia reduced fp16...

A problem with fp16 is, that you only have very little precision available to begin with. When working with any type of floating point numbers, you have to be aware that you can't just apply the rules of basic algebra, and hope to get the same results, but the order of operations, and how you fold sums etc. decides how much precision you actually loose. Multiplication and division are perfectly safe in these terms, but for simple additions, especially when the exponents differ, minimizing the error isn't trivial.

So even an over eager compiler optimization (or the lack of such!) can result in a huge error. Not so much noticeable with fp32, since the mantissa is long enough so that you won't notice rounding errors so easily. With FP16, the compiler got to stick to the algorithm dictated by the programmer, and must not just attempt to reorder operations arbitrarily.

Also worth bearing in mind as well it is working fine on Maxwell 2, this was checked for me the other day with their 980ti.It's not necessarily an implementation detail which is flawed here, but something else.

A problem with fp16 is, that you only have very little precision available to begin with. When working with any type of floating point numbers, you have to be aware that you can't just apply the rules of basic algebra, and hope to get the same results, but the order of operations, and how you fold sums etc. decides how much precision you actually loose. Multiplication and division are perfectly safe in these terms, but for simple additions, especially when the exponents differ, minimizing the error isn't trivial.

So even an over eager compiler optimization (or the lack of such!) can result in a huge error. Not so much noticeable with fp32, since the mantissa is long enough so that you won't notice rounding errors so easily. With FP16, the compiler got to stick to the algorithm dictated by the programmer, and must not just attempt to reorder operations arbitrarily.

Shame we have no details regarding the operation of FP16 with 1080.

Cheers

Ext3h

Regular

Which could possibly indicate that the shader code actually compiles to FP32 math on Maxwell 2, with conversions only on load/store. Whereby, if the 1080 actually does support fp16 natively (even if 2xfp16 isn't running at full rate), could indicate just that, and expose a precision loss caused by instruction reordering by the compiler.Also worth bearing in mind as well it is working fine on Maxwell 2, this was checked for me the other day with their 980ti.

Agreed and just one possibility, why I raised it in the way I did, also could be implications how they implemented this on 1080 compared to Tesla P100.Which could possibly indicate that the shader code actually compiles to FP32 math on Maxwell 2, with conversions only on load/store. Whereby, if the 1080 actually does support fp16 natively (even if 2xfp16 isn't running at full rate), could indicate just that, and expose a precision loss caused by instruction reordering by the compiler.

Fingers crossed this AoTS issue will force more details regarding the way 1080 operates to be released, although unfortunately it does seem Nvidia goes out of their way to ignore Stardock/AotS, so we may just be stuck with hypotheticals.

Cheers

Note that NVIDIA just updated Quadro M6000 and Tesla M40 to 24GB barely two months ago. They may be winding down GeForce production, but NVIDIA is going to be minting GM200 for some time to come.GM204/200 apparently are being discontinued for 2 months already and are just clearing inventory.

http://techfrag.com/2016/04/18/nvid...-geforce-gtx-980ti-980-970-series-production/

Similar threads

- Locked

- Replies

- 10

- Views

- 996

- Replies

- 135

- Views

- 6K

- Replies

- 774

- Views

- 126K

- Replies

- 12

- Views

- 2K