Latency comparisons between the same gaming using the DLSS 2 path on Ampere and the DLSS 3 path on Ada will be interesting. Especially in equivalently powerful GPU's like the 4080 12GB vs the 3090Ti.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia DLSS 3 antialiasing discussion

- Thread starter TopSpoiler

- Start date

hurleybird

Newcomer

Where did you find this out?

I'm willing to give Nvidia the benefit of the doubt that DLSS 3 frame insertion latency penalty is negligible, but I'm very curious about behavior.

Even assuming the latency penalty is nill, you aren't running the game's input loop on an inserted frame. So what happens when you hit a frame cap? Does DLSS 3 still insert frames, optimizing efficiency at the expense of input latency, or does it not insert frames, optimizing input latency at the expense of efficiency?

Worst case scenario is that it always inserts frames when up against a cap, and frame insertion can't be disabled on a 40 series card while running a DLSS 3 title. Best case scenario is that the user has explicit control on whether to insert frames when running up against a framerate cap.

It can't be better, but I also doubt it is significantly worse. All it should need is a single frame render ahead with the 2 previous frames for velocity and acceleration respectively.

Of course 1 frame will be more than 0, but in my experience 0 has never been worth it due to performance hit and unstable framerate.

The only downside I can see is the possiblity of some visual artifacting with dramatic changes, but should be able to just learn to go with the latest frame in that case.

Of course 1 frame will be more than 0, but in my experience 0 has never been worth it due to performance hit and unstable framerate.

The only downside I can see is the possiblity of some visual artifacting with dramatic changes, but should be able to just learn to go with the latest frame in that case.

More confirmation that the optical flow accelerator has been upgraded and is necessary for DLSS 3.

Frenetic Pony

Veteran

Not sold on this until we see input lag tests. The tech is obviously impressive though, just not sure how beneficial it’s going to be for games.

There's no input improvement in animations, possibly not in camera movement either but don't know there. It just takes current motion vectors and "moves all the pixels to what it expects the next frame to look like" while hoping the way it's moving things forward is actually correct, so expect more of those weird TAA upscaler visual artifacts as well. Technically you can sample the next controller input for camera and, kinda hope maybe you can figure that out at least, but it doesn't necessarily work well so expect more artifacts if they added that.

Either way I expect AMD and Intel to follow on quickly to keep up with the Joneses whether it works well or not. What's disappointing is Nvidia locking it to the new generation of cards, there's nothing special there, why are you screwing over your own customers?

There's no input improvement in animations, possibly not in camera movement either but don't know there. It just takes current motion vectors and "moves all the pixels to what it expects the next frame to look like" while hoping the way it's moving things forward is actually correct, so expect more of those weird TAA upscaler visual artifacts as well. Technically you can sample the next controller input for camera and, kinda hope maybe you can figure that out at least, but it doesn't necessarily work well so expect more artifacts if they added that.

Either way I expect AMD and Intel to follow on quickly to keep up with the Joneses whether it works well or not. What's disappointing is Nvidia locking it to the new generation of cards, there's nothing special there, why are you screwing over your own customers?

So they can use DLSS 2.0 vs DLSS 3.0 in their graphs and claim 4x improvement to justify the stupid prices of course

Although it’s possible the new frame generation requires some hardware acceleration that’s not possible in Ampere/Turing. The way they obfuscate the data on perf doesn’t look good at all though, but it’s typical Nvidia.

DegustatoR

Legend

I suspect that Reflex became a part of DLSS3 SDK for a reason.Technically you can sample the next controller input for camera and, kinda hope maybe you can figure that out at least, but it doesn't necessarily work well so expect more artifacts if they added that.

So they can use DLSS 2.0 vs DLSS 3.0 in their graphs and claim 4x improvement to justify the stupid prices of course

Although it’s possible the new frame generation requires some hardware acceleration that’s not possible in Ampere/Turing. The way they obfuscate the data on perf doesn’t look good at all though, but it’s typical Nvidia.

On the other hand, look at the new Iphone prices Apple has set, in special in Europe. Not much performance increase, or anything really.

DavidGraham

Veteran

Any DLSS3 game is a DLSS2 game by default, Ada just enables Frame Generation on top of DLSS2, which NVIDIA calls DLSS3.I wonder when Nvidia will start requiring developers to only support DLSS3 instead of both.

The new HW optical flow accelerator is needed for DLSS3:What's disappointing is Nvidia locking it to the new generation of cards, there's nothing special there, why are you screwing over your own customers?

Any DLSS3 game is a DLSS2 game by default, Ada just enables Frame Generation on top of DLSS2, which NVIDIA calls DLSS3.

Right, Turing and Ampere tensor hardware is not going to be 'unused' in the future.

DavidGraham

Veteran

They clearly stated it needs the more capable Optical Flow hardware in Ada.Although it’s possible the new frame generation requires some hardware acceleration that’s not possible in Ampere/Turing.

If you look at the Optical Flow SDK, you will see that pixel granularity of Ampere is higher than Turing, Ada should be even higher. Also, high fps at 4K resolution demand higher clocks, the combination of these factors and others make DLSS3 only feasible on Ada, for now at least.

Optical Flow SDK - Developer Program

It is entirely separate from the motion vectors needed for DLSS2, the link mentions that it's better for effects while motion vectors are better for geometry. Though it might not be as good without them, like the TAA implemented in one of the reshade shaders that tried to work without them but ended up just blurring the image in most cases.

edit: from the Ada thread, it should be quite good even as a standalone.

Uh... When vlc, plex, etc incorporated optical flow? Or it will work on Nvidia cpanel setting override?

Goodbye trashy TV motion interpolation, goodbye heavy interpolation via SVP

Indeed, why all the high quality offline frame generation models have not been ported to games yet because it's so easy and doesn't require any performance, judging by some comments) Anybody can do this!What's disappointing is Nvidia locking it to the new generation of cards, there's nothing special there, why are you screwing over your own customers?

Look at it this way, it's on average a little over twice as bad as turning on vsync.Of course 1 frame will be more than 0, but in my experience 0 has never been worth it due to performance hit and unstable framerate.

DegustatoR

Legend

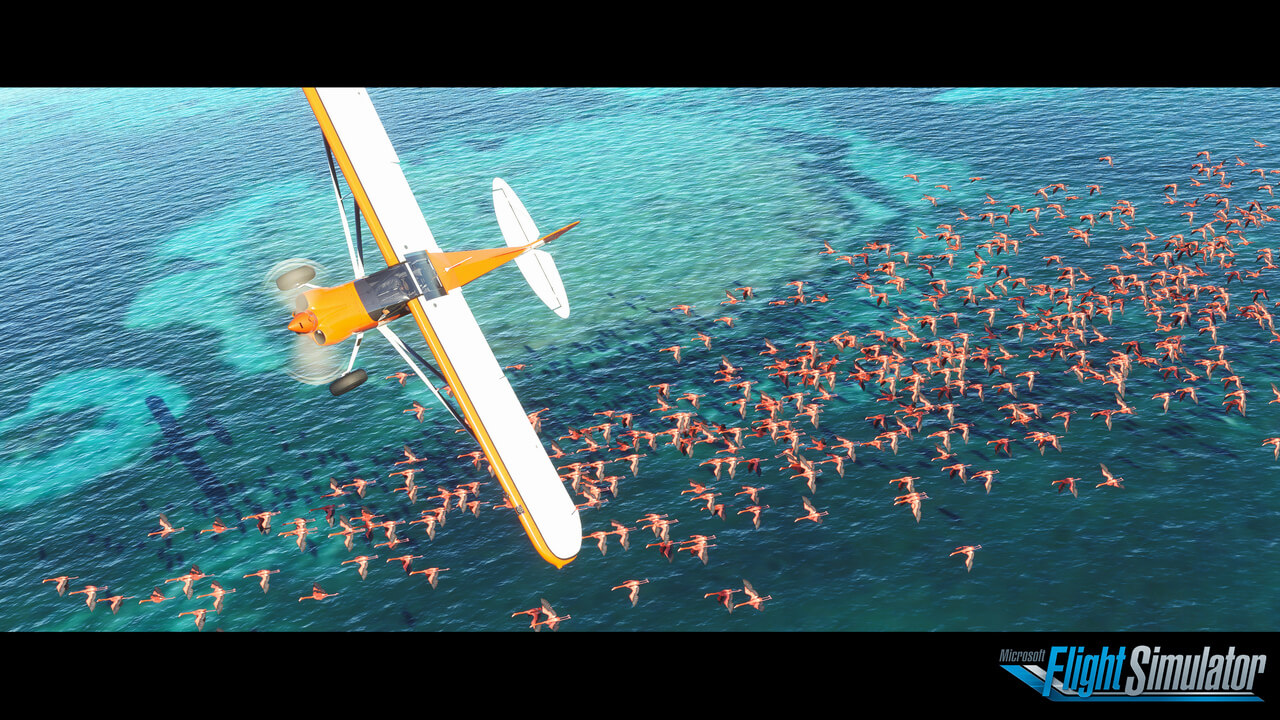

Microsoft Flight Simulator Update 10 released, adds DLSS support, improves DX12, full patch notes

Microsoft Flight Simulator's Update 10 adds support for NVIDIA's DLSS tech and brings a number of DX12 improvements.

www.dsogaming.com

www.dsogaming.com

Nah, you needed the buffering with vsync anyway. The real issue is that in situations where the additional frames will make the most difference is when the frame rate is fairly low, and it won't be doing anything to help responsiveness. Shady as hell to offer benchmarks like that too. If AMD can offer good RT perf, they get my business.Look at it this way, it's on average a little over twice as bad as turning on vsync.

My personal take is you have a number of different factors that make DLSS3 very interesting.

CPU + memory scaling

motion improvement/judder reduction

motion blur reduction

input latency

image quality

DLSS3 basically addresses the first three at the expense of the last two. I'll use the example of COD Warzone, because it's popular and incredibly demanding. To get 200+ fps consistently at 1080p you need something like a 12900K that's overclocked with ringbus and DDR4 (samsung b-die only) pushed to the absolute limit, and probably a 3090 overclocked. On top of that, you need pretty much every in-game setting set to low. The cost of that computer is absolutely out of reach of a ton of people, and requires a skill set and time and patience to deal with all of the overclocking. On top of that, DDR5 is the next spec for gaming, and so far it's very expensive and actually not as good for gaming overall because it has higher latency.

There is push to get to 1000Hz over time, because motion blur on sample and hold displays will effectively be eliminated. On top of that the reduction of judder when panning will create an incredibly lifelike image. There is no practical way to get there by drawing "native" frames. It's not practical with how hardware scales over time, especially as rendering requirements increase with ray tracing etc. What's going to get there is some form of frame construction that doesn't require rendering complete frames or rendering every frame.

The compromise is image quality and input latency. We'll have to see how bad the compromises are, and it's clear there are compromises, but overall this is the direction that gaming hardware has to take. RTX 40 series may not be the best example, and there will probably be good reasons to turn DLSS3 off with particular games, or even all of them. My guess is the intent is 240 fps with 120fps input lag, or 480 fps with 240fps input lag to match the current top of the line displays. 60 to 120 could be pretty good, but I think 30 to 60 is probably going to be trash. I also kind of wonder if games that are being made to support DLSS3.0 from the ground up will have higher quality than the ones that have the support added after release. Really don't know how involved or complex the integration of the new optical frame generation component is.

They have to start somewhere. You need D3D2 to get to D3D7. You need D3D7 to get to D3D12. You need TAA to get to DLSS/FSR2. DXR1 will lead to DXR2. DLSS3 is a first step to get to a better version of the same thing.

blurbusters.com

blurbusters.com

CPU + memory scaling

motion improvement/judder reduction

motion blur reduction

input latency

image quality

DLSS3 basically addresses the first three at the expense of the last two. I'll use the example of COD Warzone, because it's popular and incredibly demanding. To get 200+ fps consistently at 1080p you need something like a 12900K that's overclocked with ringbus and DDR4 (samsung b-die only) pushed to the absolute limit, and probably a 3090 overclocked. On top of that, you need pretty much every in-game setting set to low. The cost of that computer is absolutely out of reach of a ton of people, and requires a skill set and time and patience to deal with all of the overclocking. On top of that, DDR5 is the next spec for gaming, and so far it's very expensive and actually not as good for gaming overall because it has higher latency.

There is push to get to 1000Hz over time, because motion blur on sample and hold displays will effectively be eliminated. On top of that the reduction of judder when panning will create an incredibly lifelike image. There is no practical way to get there by drawing "native" frames. It's not practical with how hardware scales over time, especially as rendering requirements increase with ray tracing etc. What's going to get there is some form of frame construction that doesn't require rendering complete frames or rendering every frame.

The compromise is image quality and input latency. We'll have to see how bad the compromises are, and it's clear there are compromises, but overall this is the direction that gaming hardware has to take. RTX 40 series may not be the best example, and there will probably be good reasons to turn DLSS3 off with particular games, or even all of them. My guess is the intent is 240 fps with 120fps input lag, or 480 fps with 240fps input lag to match the current top of the line displays. 60 to 120 could be pretty good, but I think 30 to 60 is probably going to be trash. I also kind of wonder if games that are being made to support DLSS3.0 from the ground up will have higher quality than the ones that have the support added after release. Really don't know how involved or complex the integration of the new optical frame generation component is.

They have to start somewhere. You need D3D2 to get to D3D7. You need D3D7 to get to D3D12. You need TAA to get to DLSS/FSR2. DXR1 will lead to DXR2. DLSS3 is a first step to get to a better version of the same thing.

Blur Busters Law: The Amazing Journey To Future 1000Hz Displays - Blur Busters

A Blur Busters Holiday 2017 Special Feature by Mark D. Rejhon 2020 Update: NVIDIA and ASUS now has a long-term road map to 1000 Hz displays by the 2030s! Contacts at NVIDIA and vendors has confirmed this article is scientifically accurate. Before I Explain Blur Busters Law for Display Motion...

Similar threads

- Replies

- 7

- Views

- 2K

- Replies

- 4

- Views

- 3K

- Replies

- 11

- Views

- 3K

- Replies

- 173

- Views

- 15K