Man from Atlantis

Veteran

At first I was gonna post in the Ada Lovelace thread but it's locked.

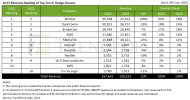

If accurate then about 1/3 of each TPC is allocated to something besides the SMs. Polymorph engine perhaps? With the GPC level rasterizers & ROPs that’s a lot of silicon dedicated to 3D fixed function.

This should probably be in the architecture thread though.