Silent_Buddha

Legend

I'd argue this isn't an opinion, it's just plain wrong.

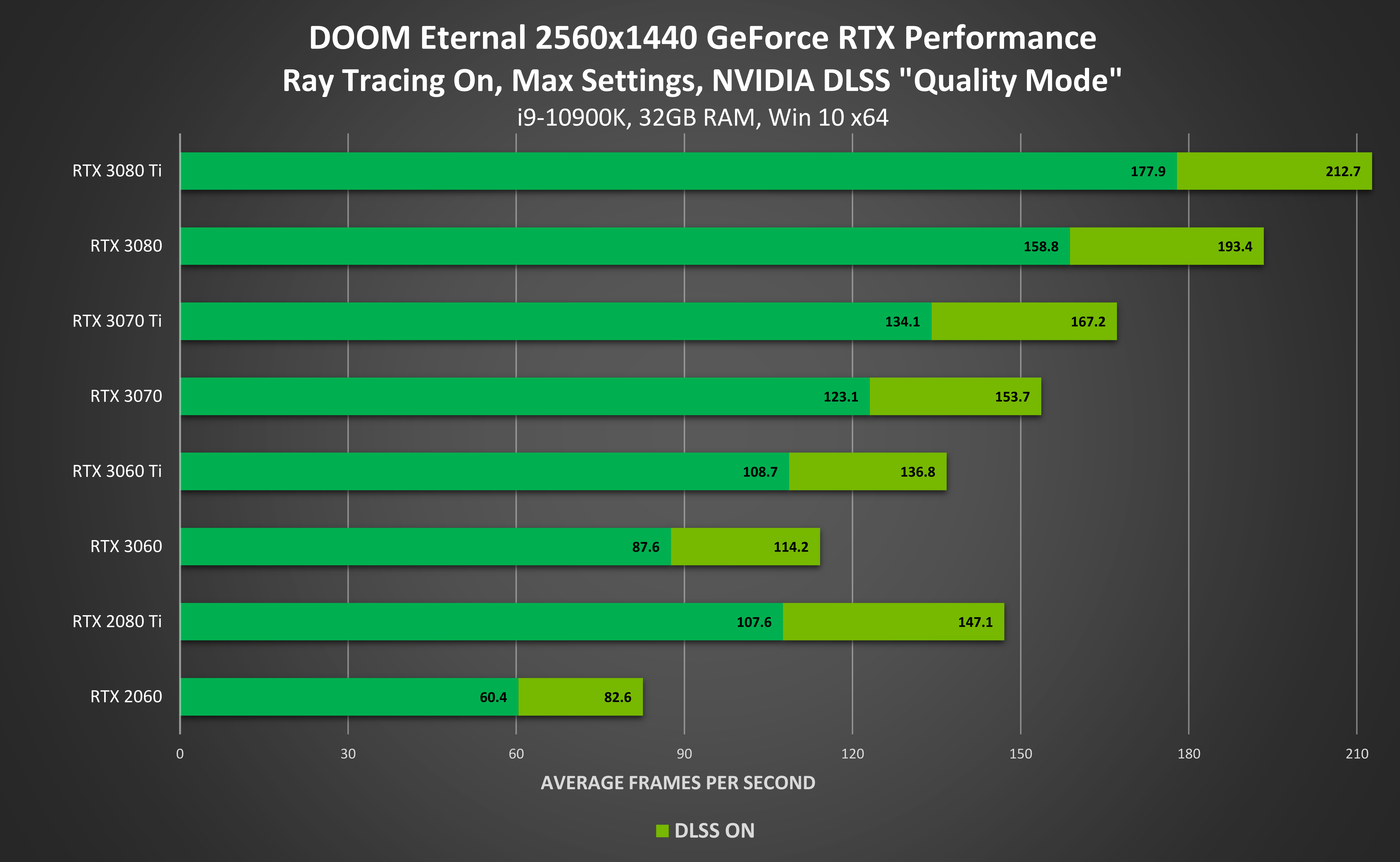

Not wrong for me. I wouldn't use the 2060S for RT other than to see it and then immediately disable it. Even with DLSS, the performance hit just isn't worth it in the vast majority of current "RT" enabled games. Metro: Exodus and maybe Control would be the only 2 that I might consider it, but if I can't run the game at a locked 60 FPS at 3200x1800 (my current standard gaming resolution on a 55" display) with at worst DLSS Quality setting, then it's just not something I would enable.

I prize fluid gameplay in a window at acceptable to me IQ settings over pushing the graphics settings so high that it either impacts my game play experience or forces me to play in a smaller window. Non-standard and pretty specific, yes. But I want to play games how I want to play them and not how someone else tells me I should play it.

Of course, it's a moot point at the moment since I'm not willing to pay out the wazoo for a 2060S.

I'm excited for the future of RT, but it's currently not quite there yet for me. It's a similar situation to how I immediately disabled shadows in all games up until about 2 or 3 years ago when I started allowing shadows to be on in some games where it wasn't so distractingly "wrong." With no shadows it was also distractingly wrong, but off offered a lot of extra performance I could use for other IQ settings that weren't so wonky to me.

Regards,

SB