I didn't do anything WRT to Forza myself - and with that out of the way: There's rumor that the UWP DRM seems to be running on that same, already hammered core, decrypting files on the fly for the streaming engine. Maybe that's a W10 exclusive? IIRC, DRM is handled quite differently on consoles and maybe it does not burden single cores there as much (or is it even locked to one core exclusively?).

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No DX12 Software is Suitable for Benchmarking *spawn*

- Thread starter trinibwoy

- Start date

Well, XBO dedicates a core for the OS (or two, not sure) not accessible for the applications, so the process doesn't interfere with the game. I guess that's why the CPU affinity hack does the job for most of the users -- a solution for the poor task scheduling in the PC port.I didn't do anything WRT to Forza myself - and with that out of the way: There's rumor that the UWP DRM seems to be running on that same, already hammered core, decrypting files on the fly for the streaming engine. Maybe that's a W10 exclusive? IIRC, DRM is handled quite differently on consoles and maybe it does not burden single cores there as much (or is it even locked to one core exclusively?).

DavidGraham

Veteran

A new patch has been released with the following line in the changelog:I didn't do anything WRT to Forza myself - and with that out of the way: There's rumor that the UWP DRM seems to be running on that same, already hammered core, decrypting files on the fly for the streaming engine. Maybe that's a W10 exclusive? IIRC, DRM is handled quite differently on consoles and maybe it does not burden single cores there as much (or is it even locked to one core exclusively?).

http://www.dsogaming.com/news/forza...mises-to-fix-stuttering-releases-later-today/– Fixed a performance issue on Windows 10 that caused stuttering with high or unlocked framerates on certain hardware configurations

D

Deleted member 13524

Guest

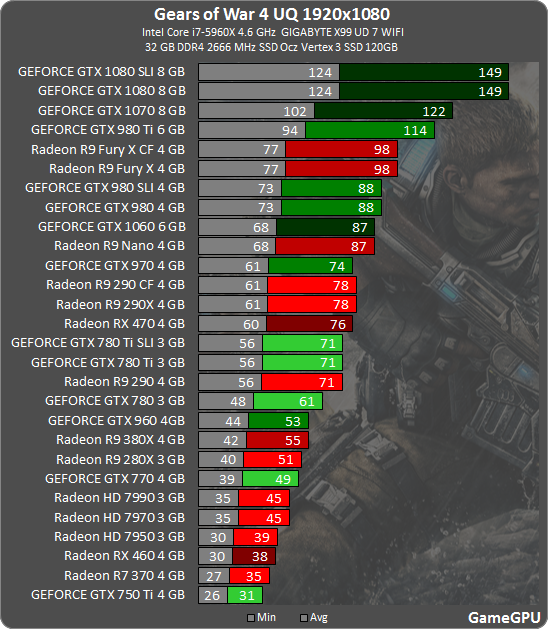

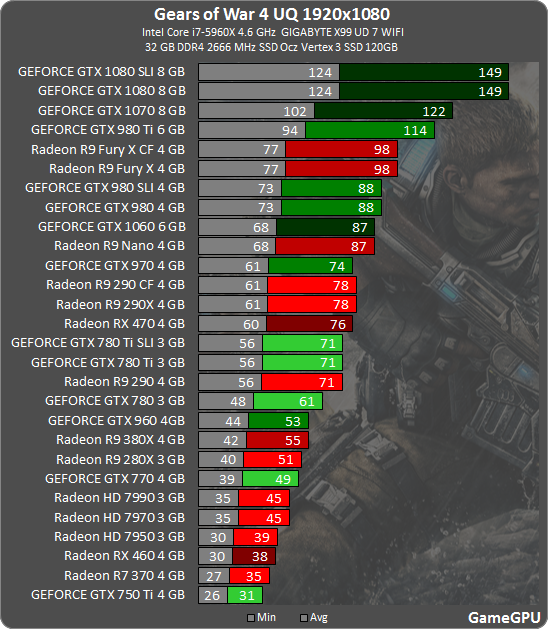

There's a Gears of War 4 performance comparison made at wccftech and PCWorld, but they didn't use latest AMD drivers with support for GoW4.

They both used the (currently) press-exclusive 373.02 drivers with official GoW4 support for nvidia cards, but for AMD cards they used the older 16.9.2, which have since then been replaced by the 16.10.1 with official support for GoW4.

That said, we may get a small performance bump with the currently available drivers on AMD cards:

It seems the game runs pretty well and PCWorld claims the memory management is top notch, with 4GB cards not suffering for lack of memory even at 4K:

They both used the (currently) press-exclusive 373.02 drivers with official GoW4 support for nvidia cards, but for AMD cards they used the older 16.9.2, which have since then been replaced by the 16.10.1 with official support for GoW4.

That said, we may get a small performance bump with the currently available drivers on AMD cards:

It seems the game runs pretty well and PCWorld claims the memory management is top notch, with 4GB cards not suffering for lack of memory even at 4K:

One thing is clear however: The game handles memory management superbly, presumably due to its used of Tiled Resources. No graphics card overshot its available dedicated memory, regardless of resolution or texture quality, and each made damn near full use of its RAM pool. For example, running the benchmark with Ultra graphics settings at 4K resolution ate 5.6GB of memory with the spacious 8GB GTX 1080, and just 3.52GB for the space-constrained 4GB Radeon Fury X, with no glaring loss of visual quality in the latter.

DavidGraham

Veteran

Gears Of War 4 DX12 test (internal benchmark), with latest drivers:

Nvidia GeForce Driver Release 372.90

AMD Radeon Crimson Edition 16.10.1

http://gamegpu.com/action-/-fps-/-tps/gears-of-war-4-test-gpu

http://www.gamersnexus.net/game-bench/2628-gears-of-war-4-gpu-benchmark-on-pc

It's interesting to note the game has some settings along the lines of "Nightmare" preset in Doom, it's called "Insane" here, and it increases the quality of DoF and reflections to a so called "next gen level", so far most sites have steered away from testing at those settings, as they are incredibly taxing, according to NV, insane reflections can cost you 73% of your frames, Insane DoF can set you back 50%!!! There are some useful info on that link dissecting the game. The port also appears solid with huge number of tweakable settings, and new impressive rendering techniques for shadows and lighting. Async Compute toggle is forcibly switched off on Maxwell hardware, though it's performance impact appears limited even on AMD and Pascal hardware.

Nvidia GeForce Driver Release 372.90

AMD Radeon Crimson Edition 16.10.1

http://gamegpu.com/action-/-fps-/-tps/gears-of-war-4-test-gpu

http://www.gamersnexus.net/game-bench/2628-gears-of-war-4-gpu-benchmark-on-pc

It's interesting to note the game has some settings along the lines of "Nightmare" preset in Doom, it's called "Insane" here, and it increases the quality of DoF and reflections to a so called "next gen level", so far most sites have steered away from testing at those settings, as they are incredibly taxing, according to NV, insane reflections can cost you 73% of your frames, Insane DoF can set you back 50%!!! There are some useful info on that link dissecting the game. The port also appears solid with huge number of tweakable settings, and new impressive rendering techniques for shadows and lighting. Async Compute toggle is forcibly switched off on Maxwell hardware, though it's performance impact appears limited even on AMD and Pascal hardware.

Last edited:

A new patch has been released with the following line in the changelog:

http://www.dsogaming.com/news/forza...mises-to-fix-stuttering-releases-later-today/

Unfortunately this patch made things worse for me not better.

Sent from my iPhone using Tapatalk

DavidGraham

Veteran

It appears pcgameshardware has found the source of the problem, when running a UWP game on anything other than the native screen resolution, a considerable latency is introduced, which manifests as stutters and frame drops, and high Core 0 CPU load as well! The site concluded that for proper 1080p testing you would need a 1080p native screen, and so forth with 1440p testing! you would need a new screen for each resolution! Also if you activate DSR or VSR then the highest resolution available becomes your native resolution, and running at any thing below that will re-introduce the issue!Unfortunately this patch made things worse for me not better.

http://www.pcgameshardware.de/Gears-of-War-4-Spiel-55621/Specials/Performance-Test-Review-1209651/

intersting, I do run native 1080p monitor with gsync. Also running 1080p, I still do get stutters. They either become less frequent the longer the game is playing, or my eyes are just adjusting to ignore them. I have been crashing more frequently with the patch which is actually the killer for me. I'll wait for yet another patch, the game runs perfectly fine on XBO for now.It appears pcgameshardware has found the source of the problem, when running a UWP game on anything other than the native screen resolution, a considerable latency is introduced, which manifests as stutters and frame drops, and high Core 0 CPU load as well! The site concluded that for proper 1080p testing you would need a 1080p native screen, and so forth with 1440p testing! you would need a new screen for each resolution! Also if you activate DSR or VSR then the highest resolution available becomes your native resolution, and running at any thing below that will re-introduce the issue!

http://www.pcgameshardware.de/Gears-of-War-4-Spiel-55621/Specials/Performance-Test-Review-1209651/

DavidGraham

Veteran

Ultra preset uses TAA. The game has a resolution scaler for downsampling as well.

Thanks,Ultra preset uses TAA. The game has a resolution scaler for downsampling as well.

Just read an interview and they say they are using Epic Games' latest temporal AA (fits with what you say).

<edit> ok looks like it is not using MSAA even though in my next sentence <edit>

UE4 documentation says: "Other techniques: UE4 currently supports MSAA only for a few editor 3d primitives (e.g. 3D Gizmo) and Temporal AA".

Wonder what level it would be in terms of supersampling, looking at Nvidia site I could not tell the difference between Ultra and Low alias setting but could be down to the static scene they used.

Just rechecked, with the 1080p I could tell the difference but only looking very carefully at specific point such as window/occasional leaf and then the difference is incredibly small in each case.

http://images.nvidia.com/geforce-co...active-comparison-19x10-001-ultra-vs-low.html

Also looking at Nvidia's own chart, the AA settings has no effect on performance; low and ultra within 0.3 fps of each other at 1440p with a GTX1080.

Cheers

Last edited:

Just for closure,

here is Epic Games Temporal Supersampling presentation from 2014 that is applicable to UE4 and also Gears of War.

https://de45xmedrsdbp.cloudfront.net/Resources/files/TemporalAA_small-59732822.pdf

Cheers

here is Epic Games Temporal Supersampling presentation from 2014 that is applicable to UE4 and also Gears of War.

https://de45xmedrsdbp.cloudfront.net/Resources/files/TemporalAA_small-59732822.pdf

Cheers

D

Deleted member 2197

Guest

Gears of War 4 performance comparison at PcGameshardware has updated article to include latest AMD driver (16.10.1). NVidia analysis performed using same 373.02 ... latest optimized Gears of War driver is whql 373.06.

http://www.pcgameshardware.de/Gears-of-War-4-Spiel-55621/Specials/Performance-Test-Review-1209651/

http://www.pcgameshardware.de/Gears-of-War-4-Spiel-55621/Specials/Performance-Test-Review-1209651/

I think one has to be nuts to play any game under UWP, or a big fan of the game to ignore the crap UWP causes in terms of resolution native-non native/VSYNC-unlocked framerate/window-borderless full screen exclusive/etc,

Makes you wonder who actually developed and supports UWP at Microsoft.

Anyway makes benchmarking a nightmare and not all sites will have valid data when a game is reviewed-benchmarked from UWP.

Cheers

Makes you wonder who actually developed and supports UWP at Microsoft.

Anyway makes benchmarking a nightmare and not all sites will have valid data when a game is reviewed-benchmarked from UWP.

Cheers

Last edited:

D

Deleted member 13524

Guest

Computerbase.de did 1440p tests. Their 1080p and 4K are pretty much equivalent to pcgameshardware's:

And it appears the performance on a FX 8370 is.. spectacular?

Only the GTX 1080 is facing a CPU bottleneck at 1440p.

And it appears the performance on a FX 8370 is.. spectacular?

Only the GTX 1080 is facing a CPU bottleneck at 1440p.

D

Deleted member 13524

Guest

DX12 is now out of Beta in Deus Ex Mankind Divided. DX12 mGPU is supported in Beta form.

https://steamcommunity.com/app/337000/discussions/0/343788552537105000

One tidbit from the developer:

Memory Pooling in LDA? Was this public info? Would SFR benefit from it?

https://steamcommunity.com/app/337000/discussions/0/343788552537105000

One tidbit from the developer:

Nixxes said:We are using Explicit Linked Display Adapter (LDA) instead of Multi Display Adapter (MDA) for our Multi-GPU implementation.

We are not using Memory Pooling.

Alternate Frame Rendering(AFR) allows us to better balance the load and create potentially the highest performance gain when using 2 identical GPUs, which will be the case for most players.

Split Frame Rendering(SFR) is indeed interesting when using MDA, allowing you to balance the load better across 2 different of GPUs.

However we would like to note that SFR and Memory Pooling are not actually limited to MDA, but can be implemented in all cases.

Memory Pooling in LDA? Was this public info? Would SFR benefit from it?

Because every game so far from UWP has suffered with at least one of those issues I mentioned, and it can mean ruining ones enjoyment.Why would I be crazy to play the free version of the game on a PC the only means thats possible? I bought the Xbox One game and for free I can play it on UWP Win10 PC. That seems great to me. You're stuck in Extreme Hyperbole mode.

It will be interesting to see sales of Quantum Break on Steam compared to UWP, because already they have sold 34k in 6 days on steam and last I heard on UWP-Windows Store sales were disastrous.

But yeah if its free sure, that does not apply to most people buying specifically PC games on the UWP-Windows store page though.

Cheers

Last edited:

Silent_Buddha

Legend

Because every game so far from UWP has suffered with at least one of those issues I mentioned, and it can mean ruining ones enjoyment.

It will be interesting to see sales of Quantum Break on Steam compared to UWP, because already they have sold 34k in 6 days on steam and last I heard on UWP-Windows Store sales were disastrous.

But yeah if its free sure, that does not apply to most people buying specifically PC games on the UWP-Windows store page though.

Cheers

Is it the platform or the developer?

Non-UWP games have plenty of problems of their own. The recent Mafia 3 release, for example. Or when Batman: Arkham Knight released if you want a rather extreme example. Assassins Creed: Unity didn't have UWP to blame either.

Some of it was obviously UWP for something like Tomb Raider when UWP was just starting to be used for AAA games. Or Quantum Break at the start with them releasing their first Dx12 game. It's much better now, and much of the problems some people experience with the recent releases could also be that these are the first full titles by these developers on PC. It's entirely possible that their non-UWP release would have had similar issues.

Heck, depending on your criteria (frame time consistency or FPS) and your hardware, the UWP version of Quantum Break runs better than the non-UWP version of Quantum Break.

Regards,

SB

Similar threads

- Replies

- 13

- Views

- 6K

- Replies

- 91

- Views

- 20K

- Replies

- 98

- Views

- 36K