so i run several benchmarks, DirectStroge on cpu, gpu, via sata ssd, sata hdd, nvme drive hooked up directly to the cpu, through b550 chipset et al.

5950X@CO -30@ALL CORES, 4*8GB 3600MHz CL14,

Palit RTX 4090 GameRock OC @2745MHz/24000MHz, 16*PCIe 4.0

Win11 Home 22H2, Driver 528.02,

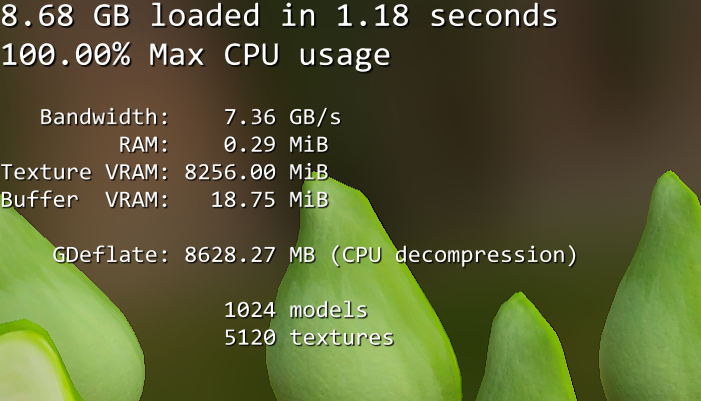

DS @CPU, Samsung 970PRO 512GB, 4*PCIe 3.0, Directly to CPU

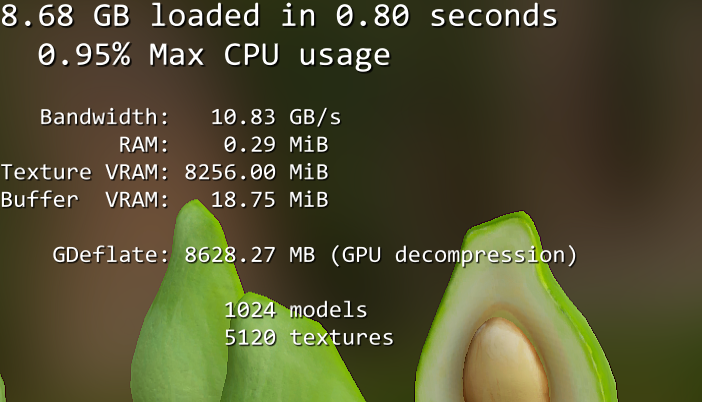

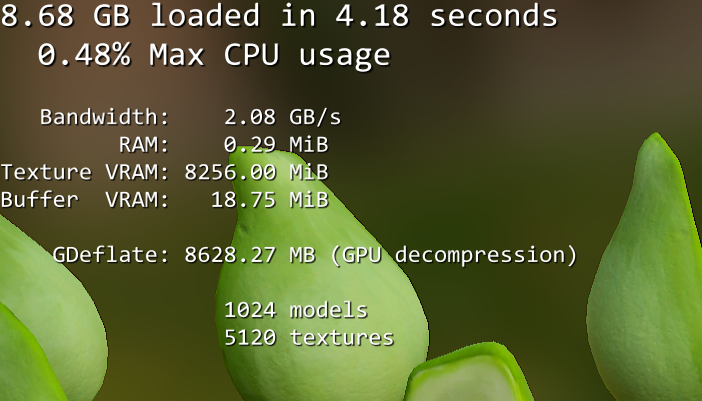

DS @GPU, Samsung 970PRO 512GB, 4*PCIe 3.0, Directly to CPU

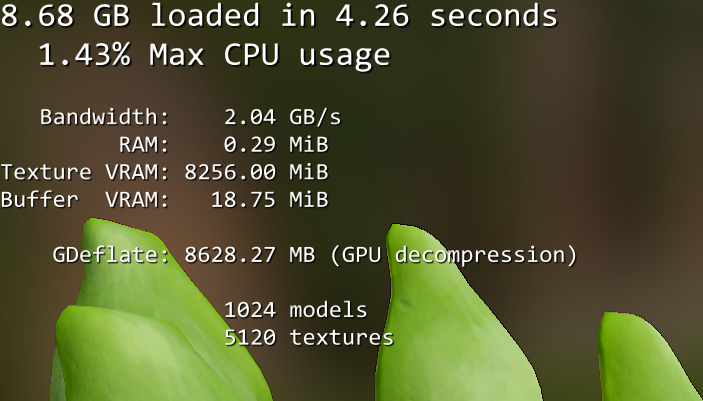

DS @GPU, HikVision 2TB, 4*PCIe 3.0, Through B550 Chipset

DS @GPU, Samsung 850EVO 256GB, SATA 3.0, Through B550 Chipset

DS @GPU, Western Digital Blue 4TB, SATA 3.0, Through B550 Chipset

The HDD score doesn't make much sense there. Its way too fast. I wonder if its using a cache somewhere?

The SATA SSD score with CPU might be interesting although I suspect your CPU will already max out the SATA.