Microsoft has been one of the biggest beneficiaries of the excitement around AI.

In any event, the 4/2/24 Vergecast has some more details about what MS might be doing with AI. First, apparently they tried Bing branding and most people assumed Bing was just a rebranded ChatGPT. But in fact MS has hired some people from lesser-known AI companies, including Inflection, for their in-house AI unit. Probably hedging their bets.

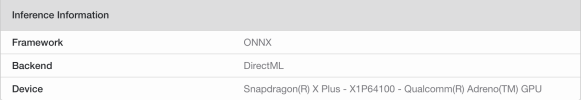

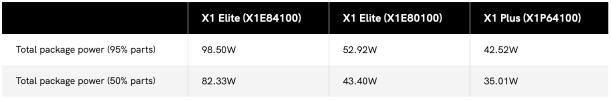

But they're going to have a big Surface event in May and they expect to unveil devices with OLED. However, it will also be the unveiling of the Qualcomm silicon developed probably from their acquisition of former Apple engineers.

Apparently MS has expressed confidence to The Verge's reporters that not only would this new Qualcomm chip get them near Apple Silicon, they expect this first chip to have greater performance than the M3.

It will also have some kind of AI chip which isn't new but they will tout it for Windows AI PCs, which have to have top Intel chips and this other AI processor -- which would do things like noise cancellation.

The new Surface and AI PCs will have a Copilot button and the "killer" feature will be something to let you control the timeline of your usage. So for instance, you were searching for a trip to Europe a week or two ago. You'd tell the AI to take you to where you were and it would open all the tabs you had opened when you were doing this trip research.

Makes sense to strike while the AI iron is hot. MS among the tech giants is the one probably benefitting the most from AI -- well now that Nvidia is worth over $2 trillion, because its stock has risen so fast, maybe it's benefitted the second most.

If MS could announce by the end of the year that they have x million users regularly using Copilot and that they've shipped x million AI PCs, it would stoke MSFT even further upwards.

At least until the AI bubble bursts.

In any event, the 4/2/24 Vergecast has some more details about what MS might be doing with AI. First, apparently they tried Bing branding and most people assumed Bing was just a rebranded ChatGPT. But in fact MS has hired some people from lesser-known AI companies, including Inflection, for their in-house AI unit. Probably hedging their bets.

But they're going to have a big Surface event in May and they expect to unveil devices with OLED. However, it will also be the unveiling of the Qualcomm silicon developed probably from their acquisition of former Apple engineers.

Apparently MS has expressed confidence to The Verge's reporters that not only would this new Qualcomm chip get them near Apple Silicon, they expect this first chip to have greater performance than the M3.

It will also have some kind of AI chip which isn't new but they will tout it for Windows AI PCs, which have to have top Intel chips and this other AI processor -- which would do things like noise cancellation.

The new Surface and AI PCs will have a Copilot button and the "killer" feature will be something to let you control the timeline of your usage. So for instance, you were searching for a trip to Europe a week or two ago. You'd tell the AI to take you to where you were and it would open all the tabs you had opened when you were doing this trip research.

Makes sense to strike while the AI iron is hot. MS among the tech giants is the one probably benefitting the most from AI -- well now that Nvidia is worth over $2 trillion, because its stock has risen so fast, maybe it's benefitted the second most.

If MS could announce by the end of the year that they have x million users regularly using Copilot and that they've shipped x million AI PCs, it would stoke MSFT even further upwards.

At least until the AI bubble bursts.