You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

MS: "Xbox 360 More Powerful than PS3"

- Thread starter Alpha_Spartan

- Start date

He describes it above.

He declares a MSAA buffer, renders to it in his new format, and does the final down sample manually, I would guess he reserves enough free bits at the top of the Luminance LSB to deal with carry out of the Bilinear filter lerps. Resolves the carry in the shader, does his tone mapping and writes the final result out in RGB.

He declares a MSAA buffer, renders to it in his new format, and does the final down sample manually, I would guess he reserves enough free bits at the top of the Luminance LSB to deal with carry out of the Bilinear filter lerps. Resolves the carry in the shader, does his tone mapping and writes the final result out in RGB.

Jawed

Legend

Ah, so his comment:

http://www.beyond3d.com/forum/showpost.php?p=648691&postcount=204

was referring to his Luv technique, too, not just FP16-non-blendable rendering.

Sigh, shoulda worked that out. Too stuck in the idea that MSAA means AA-resolve is performed by ROPs.

Jawed

http://www.beyond3d.com/forum/showpost.php?p=648691&postcount=204

was referring to his Luv technique, too, not just FP16-non-blendable rendering.

Sigh, shoulda worked that out. Too stuck in the idea that MSAA means AA-resolve is performed by ROPs.

Jawed

Crazyace said:brings to mind the Jaguar's 16 bit CRY mode for graphics - gave '24 bit' colour in 16 bits, but gourard shading was a bit strange at times

I get reminded of a technique that was popular awhile back for software renderers called S-buffering. Instead of using a depth value for each individual pixel, each fragment would be split along each scanline, and handled as a whole. It greatly sped up calculations in software (compared to a Z-buffer in software). It never took off when hardware accelerators became available, I think mostly because s-buffering is more of a complex logic test, where z-buffering is simple and can be parallelized in hardware, making performance more stable and predictable.

Still, this has gotten me to thinking about how one might be able to conceive of a pipeline (this includes a different graphics card design) geared towards a better colorspace for the actual work pre-display. Not sure if it will actually turn up anything interesting, but it would be a nifty research project nonetheless.

Bingo. btw I believe this is just the beginning, there's a lot more work to do.ERP said:He describes it above.

He declares a MSAA buffer, renders to it in his new format, and does the final down sample manually, I would guess he reserves enough free bits at the top of the Luminance LSB to deal with carry out of the Bilinear filter lerps. Resolves the carry in the shader, does his tone mapping and writes the final result out in RGB.

At this time I'm not exploiting at all the properties of this new color space, so I think there's room for some neat stuff.

Time will tell..

just to add something along the lines of what Marco and other smart blokes have been saying in this thread re alternative color space approaches (and because other guys in this thread seem to be thinking of that original approach as some 'hack' or something).

RGB space is not the One True(tm) color sampling space *waits for the 'aahs!', 'oohhs!' and 'blasphemy!' to fade out* and that is even without taking into account the subjectivity of the human visual system. even purely mathematically (i.e. from a signal sampling perspective) the "imperfections" of RGB space have been known for ages - they've been proven to come as a result of essentially wavelengths undersampling (see Hall83). that is, in the case of RGB we get three sample points in the spectre - red, green and blue wavelengths which, low and behold, does not quite adequately represent what we ideally want to do with it (but the issues would be the same with any model that tries to evaluate spectral intensity as a function of a scarce number of wavelengths - see Watt&Watt's classic text, chapter '2.3.5 Wavelength dependncies in the Phong model'.)

bottomline being, RGB HDR is just as much of a 'hack' as your next favourite color space (and often even worse) and it's all just __reasonably looking approximations__. so please, drop the agendas, relax, and let the game devs worry about what is correct, what looks good and what is neither.

ps: good job Marco and Deano - so when do we get to kick the shit out of your title? : )

RGB space is not the One True(tm) color sampling space *waits for the 'aahs!', 'oohhs!' and 'blasphemy!' to fade out* and that is even without taking into account the subjectivity of the human visual system. even purely mathematically (i.e. from a signal sampling perspective) the "imperfections" of RGB space have been known for ages - they've been proven to come as a result of essentially wavelengths undersampling (see Hall83). that is, in the case of RGB we get three sample points in the spectre - red, green and blue wavelengths which, low and behold, does not quite adequately represent what we ideally want to do with it (but the issues would be the same with any model that tries to evaluate spectral intensity as a function of a scarce number of wavelengths - see Watt&Watt's classic text, chapter '2.3.5 Wavelength dependncies in the Phong model'.)

bottomline being, RGB HDR is just as much of a 'hack' as your next favourite color space (and often even worse) and it's all just __reasonably looking approximations__. so please, drop the agendas, relax, and let the game devs worry about what is correct, what looks good and what is neither.

ps: good job Marco and Deano - so when do we get to kick the shit out of your title? : )

Jaws said:I guess tiling the backbuffer to the SPUs LS' would be feasible with the reduced bandwidth of INT8? Not to mention that SPUs should rip through that datatype!

AFAIK SPUs only support 32 bit float data, I recall DeanoC or Faf posting about this...

Yep, but one could work on the int8 format as it is or convert it to a full precision floating point representation (in Luv color space) with a little snippet of code.Laa-Yosh said:AFAIK SPUs only support 32 bit float data, I recall DeanoC or Faf posting about this...

Laa-Yosh said:AFAIK SPUs only support 32 bit float data, I recall DeanoC or Faf posting about this...

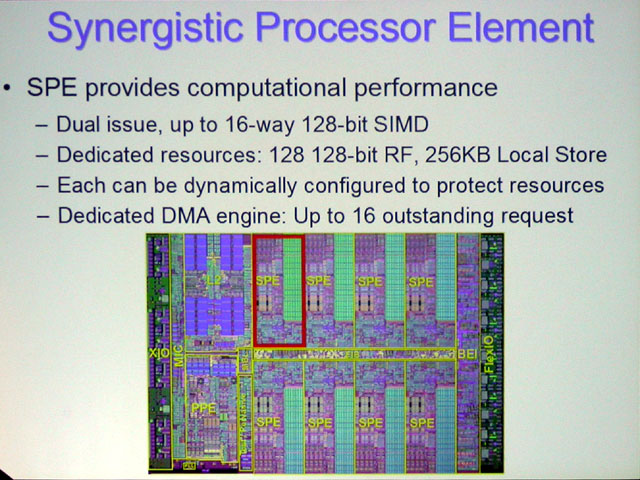

Each SPU can work on 16-way 8bit integers...

Looking on the ISA SPU can natively add/substract 16/32 Bit Integers, multiply 16Bit Ints. Everything else needs to be broken up into multiple operations. That aint that important though, I think the big problem is that divide is missing...Jaws said:Each SPU can work on 16-way 8bit integers...

Jawed said:What would you do with framebuffer data in Cell that RSX wouldn't do far more effectively?

Jawed

I would've thought post-processing effects, generally things that wouldn't need texture lookups...

Npl said:Looking on the ISA SPU can natively add/substract 16/32 Bit Integers, multiply 16Bit Ints. Everything else needs to be broken up into multiple operations. That aint that important though, I think the big problem is that divide is missing...

Thanks, this reminds me to finish reading those ISA docs!

heliosphere

Newcomer

RGB isn't a perfect colourspace but it's actually not a bad choice for rendering. The most 'natural' colourspace for a framebuffer (assuming you want to accumulate contributions from multiple lights over multiple passes) is some number of channels, each representing the number of photons accumulated within some wavelength band. FP16 RGB can be treated as a rough approximation of this for three wavelength bands. Some offline renderers are now moving to using larger numbers of channels and integrating at the end against curves approximating the response of the cones in the human eye.Cyander said:Still, I agree with them, the RGB colorspace is the WORST for lighting math, as RGB has no direct connection to a light source. We have made a lot of math to approximate how a light would work in the RGB space, as RGB is faster to display on a computer, even though something like HSV or YUV is a better choice when you are attempting to map something to reality. As long as the calculations themselves are done with reasonable accuracy by their shaders, does it matter what the storage format is in the framebuffer?

Some other colour spaces (YUV, LuV) are good for efficiently storing a representation of a final image in a format that preserves information important to the human visual system but they aren't as suited to accumulating lighting contributions because they have no simple correlation to anything physical like 'number of photons accumulated'. If you think of a framebuffer as like the CCD in a digital camera and each pixel as accumulating photons in a particular band you can see why FP16 RGB makes decent sense. HSV is a pretty poor choice for a framebuffer - it was designed as a convenient representation for humans to specify a colour, it's a pretty terrible representation for a simulation of the physics of light. YUV and LuV separate luminance from colour information which makes for efficient storage of a final image because it makes better use of the available precision for what is important when viewing but as has been pointed out elsewhere they don't blend correctly. If you want to correctly accumulate lighting over multiple passes they make life difficult. With programmable blending you could compensate for the errors by converting them back into another colour space but you would still be losing precision. It's not obvious to me how important that would be to the final image but it could lead to visual errors.

The technique nAo describes is a clever one and is useful for current hardware with it's limited FP support (and / or lack of memory bandwidth) but in terms of what colour space is best / most natural for lighting it's not better than FP16 RGB. Maybe one day we'll have the bandwidth to have 16 bands across the visual spectrum each with 32 bit precision...

Lighting is performed neither in FP16 nor nAo's color space - it's done in shader with normal FP32. The final results are downconverted with a loss of precision in BOTH cases - it just happens to be that the FP16 downsample is perceptually 'more' lossy.heliosphere said:but in terms of what colour space is best / most natural for lighting it's not better than FP16 RGB.

So blending issues aside - nAo's choice of colorspace would give better results for lighting.

One could argue that blending should not even be mentioned in the same time with physical correctness given that it's hardly any kind of physical phenomenon

heliosphere

Newcomer

As soon as you want to accumulate lighting over multiple passes additive blending is very important (and also physically correct as long as you are working in a linear RGB space). If you can do all your lighting in a single pass then blending is less important but one-pass-per-light is quite a common technique these days and then you want your frame buffer to do the right thing when you do an additive blend. Deferred renderers that do a pass per light also want a linear RGB frame buffer for correct lighting. Even for engines that aren't doing a pass per light it's useful to be able to accumulate lighting across more than one pass in many situations.Fafalada said:Lighting is performed neither in FP16 nor nAo's color space - it's done in shader with normal FP32. The final results are downconverted with a loss of precision in BOTH cases - it just happens to be that the FP16 downsample is perceptually 'more' lossy.

So blending issues aside - nAo's choice of colorspace would give better results for lighting.

One could argue that blending should not even be mentioned in the same time with physical correctness given that it's hardly any kind of physical phenomenon- but unfortunately it's far too important tool in CG that we could live without it either.

Graphics trends change - we do what works best with target platforms, not what is considered "common".heliosphere said:If you can do all your lighting in a single pass then blending is less important but one-pass-per-light is quite a common technique these days and then you want your frame buffer to do the right thing when you do an additive blend.

From where I'm standing, PS3 is the diametric opposite of PS2 - while on PS2 FB accumulation was our bread and butter, on PS3 you want to avoid it at all costs - regardless of what FB format you use.

On 360 excessive accumulation is a bad idea also - not performance wise but because you're stuck in FP10.

And even if you look crossplatform - the biggest nexgen middleware supplier also discourages using more then 2 shadowcasting lights per object

Don't get me wrong btw, I'd still love to have blending that 'just works' with a nice compact HDR representation. But I know there's some things I'd want to avoid using it for, regardless.

heliosphere

Newcomer

I wasn't disputing that this is a useful technique for next-gen platforms (when do we get to start calling them current-gen btw?) - it's a clever method for optimizing for the limited framebuffer bandwidth and / or blending capabilities available. What I was taking issue with was the idea that FP16 RGB is "the WORST for lighting math" - if you have the bandwidth and the blending support it is a good choice for a framebuffer because it allows you to accumulate lighting over multiple passes correctly (at least as correctly as is possible without moving to more spectral bands). I think FP16 RGB will be the framebuffer format of choice on the PC once DX10 hardware arrives because it makes it a lot easier to "do the right thing" and keep your lighting in a linear space that behaves properly under additive blends where other alternatives require you to move back and forth from one colour space to another (which is not straightforward without fully programmable blending) if you want correct behaviour when accumulating lighting across multiple passes.Fafalada said:Graphics trends change - we do what works best with target platforms, not what is considered "common".

From where I'm standing, PS3 is the diametric opposite of PS2 - while on PS2 FB accumulation was our bread and butter, on PS3 you want to avoid it at all costs - regardless of what FB format you use.

On 360 excessive accumulation is a bad idea also - not performance wise but because you're stuck in FP10.

nAo (and others) : Has your work on PS2 and it's lack of hardware features had a positive contribution on developing alternative software solutions? The need to solve in 'software' what PS2 lacked in hardware has thrown up a lot of research, including a lot of alternative data models for colourspaces. Or is this research into different data models for GPUs ongoing research across all platforms? Presumably not, as the IHVs have tended to keep developing standard 'symmetric' data formats.

I'm curious how much non-standard colour modelling is going to be appearing on PCs and consoles and who's going to be pushing the envelope.

I'm curious how much non-standard colour modelling is going to be appearing on PCs and consoles and who's going to be pushing the envelope.

No doubts about that, it helped and still helps..Shifty Geezer said:nAo (and others) : Has your work on PS2 and it's lack of hardware features had a positive contribution on developing alternative software solutions?

To be fair the answer in this case is negative, when I was working on PS2 I wasn't caring about color spaces at allThe need to solve in 'software' what PS2 lacked in hardware has thrown up a lot of research, including a lot of alternative data models for colourspaces.

I don't know the answer here..Or is this research into different data models for GPUs ongoing research across all platforms? Presumably not, as the IHVs have tended to keep developing standard 'symmetric' data formats.

My 'research' (real scientific research is another thing, I'm an amateur) has started cause we want to achieve some goals and I knew we had to find out betters solution to some problems.

Doing high quality HDR rendering using a 32 bits buffer is nice but it's only one small step, that's why I often say that limits and constrains can be a good thing cause they force you (if you care enough

I really don't know, but as long as we need more and more frame buffer bandwith I believe that using a different color space could be useful even in some years from now.I'm curious how much non-standard colour modelling is going to be appearing on PCs and consoles and who's going to be pushing the envelope.

I wouldn't mind to have TBDR to render everything at full precision without worrying about external memory bandiwith though

Similar threads

- Replies

- 14

- Views

- 4K

- Replies

- 21

- Views

- 10K

- Replies

- 3

- Views

- 13K

- Replies

- 40

- Views

- 8K