version said:if compute 4 vector at same time, then dotproduct is 1 cycle, what is your problem?

Err, yeah that was the point, IF the dot product is not a 1 cycle operation, then it's a total waste.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

version said:if compute 4 vector at same time, then dotproduct is 1 cycle, what is your problem?

MfA said:Version means that if you work on 4 dot products at a time 1 dot product will always effectively take 1 cycle ... this is a given, because you just need the multiply-adds. It's just a bit of a pain having to do so.

DeanoC said:Fafalada said:Well - having to spend the same amount of instructions&time on (rotational)matrix*vector transform as a 2-vector dotproduct is what greatly upsets software ppl.

Absolutely, no dot-product instruction sucks. Its even more relevant for non-graphics ops, where is often impossible to do more than 1 dot-product at a time (for example AI angle or distance calcs etc). So effectively you divide you theoritical flops by 3 or 4....

Grrr.... Just after I'd got used to being able to issue a dot product every cycle.

nAo said:128 MB gives just 12.8 GB/s for the GPU. Is it enough for a eDram-less GPU? I don't think solondon-boy said:How about 256MB for the BE and either 128 or 256 for the GPU?

DeanoC said:VMX doesn't have a single instruction dot-product, it just has a horizontal add but then VMX itself is a bit old and crusty. It has been significantly improved in some CPUs...Gubbi said:...snip...

Towards a 4 Teraflop microprocessor

I'm a microprocessor designer, computer architect, and manager with

40 years experience who has been developing for the last 3 years a

single device vector uni-microprocessor initially targeting 4

TERA-FLOPS (DP). It is not targeted at supercomputer end; it is

targeted for desktop. It's a vector co-processor that would be

inserted into a workstation or PC and is intended to crunch numbers for

applications that currently run for a fairly long time.

What I've done is to look at vertical integration to see how it could

be leveraged for a computation intensive application. Obviously one

could place a cluster of micros together, but there still remain many

problems in getting clusters to evenly distribute their workload as

well as being difficult to code for. "Massively parallel machines

can be dramatically faster and tend to possess much greater memory than

vector machines, but they tax the programmer, who must figure out how

to distribute the workload evenly among the many processors."

(National Center for Supercomputing Applications)

My thinking on making use of vertical integration was to instead define

a superscalar vector processor. The instructions have a general format

of <opcode>, <source reg3>, <source reg2>, <source reg1>, <destination

reg 0> where the opcode specifies two operations: (s1 op1 s2) op2 s3

--> d0. The arch splits into 2 operand spaces: one for scalars and

one for vectors. The scalars are processed with their own register

file and scalar instructions and are intended to handle address

generation and housekeeping such as loop counts, etc. Scalars are

currently restricted to integer only. Vectors are (for initial model)

128 fields of 64-bit data and are processed with their own register

file and vector instructions and are intended to do heavy number

crunching. The vector unit is sliced and vertically stacked with 8Kb

data bus(es) connecting slices. Level 0 vector operand cache is

distributed over slices as is the vector register file. Vector

instructions are primarily SIMD on the fields, but there are some VLIW

MIMD instructions as well (patent pending on method). The Level 1

cache is unified on different die and interfaces with the vertical data

bus. My estimate for in device cache size is a minimum of 2 GB.

A matrix multiply requires 0.01 * N**3 instructions while up to 128

sorts can be done in parallel.

The ISA is mostly complete and I have notes that I need to sift through

describing a unique method of handling virtual memory addressing, cache

handling, etc. One patent application has been published: Pub. No.:

US 2002/0144091 A1; Pub. Date: Oct. 3, 2002. This IP allows efficient

procedure activations without saving/restoring a lot of register data.

Currently I am mining this ISA for patents. I really need to find a

couple more people who feel like working on this. At the barest

minimum:

A compiler/debugger/applications person, with good experience in

technical computing, to assess the user-level ISA as a compiler target

and to provide input on applications. This may take 2+ persons or one

persone who's been doing FORTRAN compilers and scientific libraries

for 20+ years on vector machines and knows all of the pitfalls from

firsthand knowledge.

An OS person to help spec privileged operations, virtual MMU, cache

management, and support for host processor to vector processor

interactions. This has to be the kind of OS whos is used to dealing

with not yet completely specified ISAs and new hardware, and who has

some reasonable hardware knowledge.

Between them they would have to design the host software stack which

means they would need to know enough about Linux/Windows or Unix

(depending upon host environment) to do that.

A hardware systems designer familiar with high speed interfaces able

to make sure that it is possible to build plausible boards with the

proposed product.

Those with an initial interest who wish to learn more and who believe

thay meet the qualifications are invited to respond. Being financially

independent would also be a plus. Goal of effort is twofold: generate

IP content and strive to refine the architecture with an intent to

obtain funding for a startup.

Interested and qualified parties will be asked to sign an NDA for

further detailed information. Bringing an architecture to market is

extremely difficult and costly. Serious inquiries only please.

Larry Widigen

A good system interface for your processor could be the HyperTransport

HTX Slot. It will be used for low latency InfiniBand cards. Iwill will

release the DK8-HTXâ„¢ motherboard with this slot.

http://www.hypertransport.org/tech/tech_specs_conn.cfm

http://www.hypertransport.org/consortium/cons_pressrelease.cfm?Record...

http://www.pathscale.com/pr_110904.html

Look at the IBM's "Cell Processor", it will be a serious competitor for

your processor:

http://www.blachford.info/computer/Cells/Cell0.html

Patents:

US 2002/0156993 - Computer architecture and software cells for broadband

networks

US 6,826,662 - System and method for data synchronization for a computer

architecture for broadband networks

US 2002/0156993 - Processing modules for computer architecture for

broadband networks

US 6,809,734 - Resource dedication system and method for a computer

architecture for broadband networks

US 6,526,491 - Memory protection system and method for computer

architecture for broadband networks

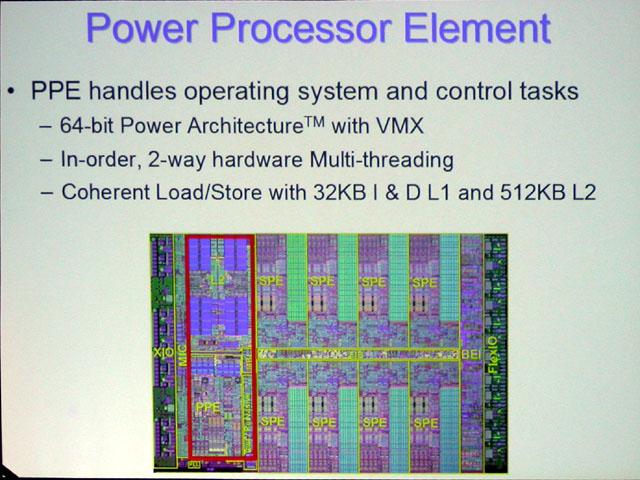

I think the demand for dot product calculations will slightly outstrip the PPE's capability.Panajev2001a said:Well, for those calculations use the VMX unit in the PPE, it might be better suited.

VMX doesn't have a single instruction dot-product, it just has a horizontal add but then VMX itself is a bit old and crusty. It has been significantly improved in some CPUs...

archie4oz said:And the horizontal summing can't be done with floats either... As for "improving" unless you're speaking of other SIMD architectures, AltiVec hasn't changed a bit ISA wise. The only differences have been with the various quirks with regards to physical implimentations...

DeanoC said:archie4oz said:And the horizontal summing can't be done with floats either... As for "improving" unless you're speaking of other SIMD architectures, AltiVec hasn't changed a bit ISA wise. The only differences have been with the various quirks with regards to physical implimentations...

There is at least one new version of VMX around, don't know if its 'official' though but it has VMX in its name.

Original VMX is pretty good , the new version just adds a few more features and lots of registers.

That's nothing but the classical "People are jumping the gun, and they should not, so somebody has to clarify the situation, even if it means writing a paper bordering condescension, and this somebody is me" kind of article.DaveBaumann said:Not a technical article, but Jon Peddie attempts to ground things a little:

http://www.jonpeddie.com/index.shtml

DaveBaumann said:Not a technical article, but Jon Peddie attempts to ground things a little:

http://www.jonpeddie.com/index.shtml

If it stepped into a 3D role specifically, possibly, but considering that hasn't been established (and likely won't for a while)--and B3D typically doesn't do console and other specialized 3D parts, instead focusing on PC solutions, I don't suppose we'll be seeing much.pc999 said:BTW will "B3D", make their own article about CELL