Posted on Tue, Jun. 22, 2004

Computer pioneer Goldstine dies at 90

BY GAYLE RONAN SIMS

Knight Ridder Newspapers

(KRT) - Herman Heine Goldstine, 90, the scientist who persuaded the U.S. military to back the development of the first computer, ENIAC, died Wednesday of Parkinson's disease at home in Bryn Mawr.

Dr. Goldstine's part in ENIAC began in 1942, when he enlisted in the Army. The Army sent the accomplished mathematician to the Ballistic Research Laboratory at Aberdeen Proving Ground in Maryland, where he worked on ordnance projects.

In 1943, he came across a memo from University of Pennsylvania scientists J. Presper Eckert and John Mauchly proposing that a calculating machine could be used to determine "firing tables" used to aim artillery. Those tables - the settings used for directing artillery under varied conditions and taking into account such variables as rounds, weather and atmospheric conditions, and distance to target - took hours to calculate. Mr. Goldstine persuaded the Army brass to fund the two young scientists' project, and the computer age was launched.

Dr. Goldstine understood the sophisticated ideas and principles that Mauchly and Eckert employed in developing digital computers that operated with numerical values expressed as digits as opposed to analogs. Intrigued by their proposal, Dr. Goldstine lobbied the brass at Aberdeen Proving Ground in April 1943, requesting $500,000 to pay for the research. He believed the Army, which was already shipping guns overseas without firing tables, was in a big enough jam to put money on a long shot.

Dr. Goldstine made his case to a committee headed by the mathematician Oswald Veblen. Veblen brought his chair forward with a crash, got up, and said to Col. Leslie E. Simon, director of the Army's Ballistics Laboratory: "Simon, give Goldstine the money."

Dr. Goldstine ran the show for the Army, and a team of scientists and engineers was assembled at Penn's Moore School to build the computer, with Mauchly and Eckert supervising. The project was kept secret as a matter of national security.

ENIAC, an electronic computer that could compute a trajectory in one second, was born in 1945. It was enormous. It was 80 feet long and had an 8-foot-high collection of circuits and 18,000 vacuum tubes. ENIAC operated at 100,000 pulses per second.

It took so long to build that the war ended before it could be used for its original purpose - churning out firing tables used to aim artillery.

ENIAC was publicly announced in 1946 on the first floor of the Moore School. In 1947, most of ENIAC was moved to Aberdeen Proving Ground, where it continued to operate until 1955.

Some original parts remain at Penn, in the basement where it was built.

Dr. Goldstine left the military in 1946, and a year later he was appointed associate director for the electronic computer project at the Institute for Advanced Study in Princeton, N.J., where he collaborated with John von Neumann in designing the second generation of computers, EDVAC (Electronic Differential Variable Computer). This computer and its successors were put to scientific and industrial uses.

IBM, realizing the computer's potential, hired Dr. Goldstine away from Princeton in 1958. Within two years, the company dominated the computer business. Dr. Goldstine stayed with IBM for 26 years, serving as director of mathematical sciences in research, director of scientific development for data processing, and director of research. He retired in 1984.

IBM established a Herman Goldstine Fellowship in mathematical sciences. In 1985, he was awarded the National Medal of Science for his work in the invention of the computer.

The author of five books, Dr. Goldstine wrote the widely read "The Computer from Pascal to Von Neumann."

In retirement, Dr. Goldstine became executive officer of the American Philosophical Society. During his tenure, he oversaw the construction of Benjamin Franklin Hall on South Fifth Street in Philadelphia.

Arlin M. Adams, a retired judge on the U.S. Court of Appeals for the Third Circuit and a longtime friend of Dr. Goldstine's, said: "Although Herman was known as a mathematician, he was very active in attracting foreign visitors to Philadelphia and the Philosophical Society."

Dr. Goldstine was born in Chicago and earned a bachelor's degree in 1933, a master's degree in 1934 and a doctorate in 1936, all in mathematics, from the University of Chicago. He taught at his alma mater and at the University of Michigan before enlisting in the Army. Always a thin man, he stuffed himself with bananas and milk shakes so he could gain enough weight to pass the military medical entrance exam for World War II.

Mr. Goldstine is survived by his wife of 38 years, Ellen Watson; a son, Jonathan; a daughter, Madlen Goldstine Simon; and four grandchildren. His first wife, Adele Katz, a mathematician who wrote a manual explaining how to program ENIAC, died in 1964 after 23 years of marriage.

A ceremony celebrating Dr. Goldstine's life will be held at the American Philosophical Society in the fall.

Memorial donations may be made to the Herman H. Goldstine Memorial Fund, American Philosophical Society, 104 S. Fifth St., Philadelphia 19106.

Source

Firstly, deepest condolences...

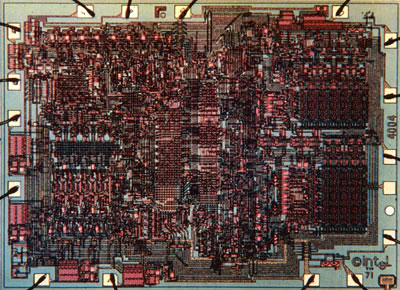

From 18k valves to 1 billion transistors...

From 100 khz to 4ghz...

From 80*8 square ft to a size of a console...

The last 60 years sure have changed from ENIAC to CELL...What can we realistically expect in the next 60 years for CPU/GPU/RAM/Media in consoles!