DavidGraham

Veteran

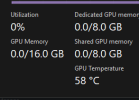

Congratulations on your 4070Ti, curious about your enhanced experiences over your previous 3060Ti.No more than 7GB use on my 4070ti on ultra RT settings

Congratulations on your 4070Ti, curious about your enhanced experiences over your previous 3060Ti.No more than 7GB use on my 4070ti on ultra RT settings

Congratulations on your 4070Ti, curious about your enhanced experiences over your previous 3060Ti.

Which game are you referring to when you're talking about 7 GB? Cyber?Nope, no problems here.

No more than 7GB use on my 4070ti on ultra RT settings

Which game are you referring to when you're talking about 7 GB? Cyber?

I guess 1080p?Yep.

Nope, no problems here.

Sigh!

Forspoken doesn't work on RX 400 and RX 500 GPUs, and all of the GPUs prior to them from AMD (RX 200, RX 300), as they lack DX12_1 feature levels, mainly Conservative Rasterization and Raster Order Views! This also locks all NVIDIA Kepler and Fermi GPUs from running the game, but allows GTX 900 and GTX 1000 to run it, as they support these features through DX12_1!

AMD Radeon RX 400/500 GPUs reportedly can't run Forspoken - VideoCardz.com

Forspoken Polaris support is broken Gamers report that they are unable to play Forspoken with some Radeon GPUs due to incompatibility with DirectX12 API. Redditor xCuri0 reports that AMD Polaris architecture is incompatible with the game Forspoken, as the game requires a higher version of the...videocardz.com

Forspoken mit DirectStorage im Technik-Test

Mit Forspoken wagt sich Square Enix an etwas Neues. ComputerBase hat die Technik, die PCs erstmals DirectStorage bringt, im Test.www.computerbase.de

Sigh!

Forspoken doesn't work on RX 400 and RX 500 GPUs, and all of the GPUs prior to them from AMD (RX 200, RX 300), as they lack DX12_1 feature levels, mainly Conservative Rasterization and Raster Order Views! This also locks all NVIDIA Kepler and Fermi GPUs from running the game, but allows GTX 900 and GTX 1000 to run it, as they support these features through DX12_1!

AMD Radeon RX 400/500 GPUs reportedly can't run Forspoken - VideoCardz.com

Forspoken Polaris support is broken Gamers report that they are unable to play Forspoken with some Radeon GPUs due to incompatibility with DirectX12 API. Redditor xCuri0 reports that AMD Polaris architecture is incompatible with the game Forspoken, as the game requires a higher version of the...videocardz.com

Forspoken mit DirectStorage im Technik-Test

Mit Forspoken wagt sich Square Enix an etwas Neues. ComputerBase hat die Technik, die PCs erstmals DirectStorage bringt, im Test.www.computerbase.de

This is my pet peeve. The worst offender is GTA V. The game opens at the bak job with Trevor, as Michael you take two f***ing steps and BAM! The game removes control from you and fires off tutorial boxes to read though.The first 2-3 hours of "gameplay" looks absolutely horrible with how often it takes control away from you and how little you're actually doing anything.

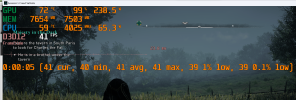

Are you aware that your textures do not load? Those capes are of low textures. Same goes for other elements.

in the game the modded one didnt look that inky black. it became like that after i converted the JXR HDR screenshot into SDR.

Yep. It never loads in the full game.. In the demo everything was peachy before the bridgeAre you aware that your textures do not load? Those capes are of low textures. Same goes for other elements.

More funny thing is, some people on an another platform were convinced that it was caused by my 16 GB RAM and low end CPU (2700). They claimed game would load textures fine on 32 GB budget and on a modern Zen 3 CPU, since it recommends so.Maybe there's a bug with the memory management indeed

I can go to 16GB used out of 6.7GB available on rtx 3070 LHR 8GB

Assuming the memory usage status meant

Currently in use / available