http://www.theinquirer.net/default.aspx?article=37548

Hint #1 - Those chips pictured in the slides look REALLY familiar.

Hint #2 - x86 is an Intel strong point.

Rather than dither around about what you think Larrabee may or may not be, read the link.

-Charlie

I don't see any mention of how Larrabee handles coherence or caching in that story, but I did forget about the ring bus.

It sounds plausible that the large shared cache might exist as banks hanging off of the bus or a ring stop, each bank being more closely tied to one proceessor than it is the others.

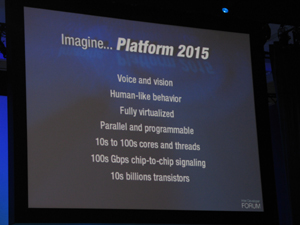

To synthesize with the slides:

That would be consistent with the notion of non-uniform access.

Also, the mention of arbitrary data duplication (new instructions/cache line states?) could be part of a solution for excessive coherence traffic.

Since hits to cache do not generate coherency traffic, Intel would do well to implement controls on memory coherence, as well as provide avenues for minimizing common-case coherency broadcasts by allowing a fast way to bring chunks of data into multiple places in the shared cache.

The rest would likely be swallowed up by the huge bus width.

It sounds like an attempt to get a case closer to Cell's scalability, with an ad hoc "local store" that has coherency when needed, as opposed to the SPE's lack of it.

The persistence of private caches indicates there is a latency penalty to the large shared cache, something the threaded nature of the mini cores also seems to indicate.

That aside, as much as x86 is a strength in areas where it is already predominant, I do not think Intel could do all that badly if it didn't go fully x86 for Larrabee. (Look how well x86 has done in embedded, or cell phones, controllers, etc.)

On the other hand, if it wants to reuse the minicores as helpers in a future Core 4 or something, it might not be too painful.

As a GPGPU, I see Larrabee as being very competitive.

GPU, not so much, at least not for the near future. Perhaps Larrabee 2 or 3, if Nvidia and ATI decide to lay down and die.