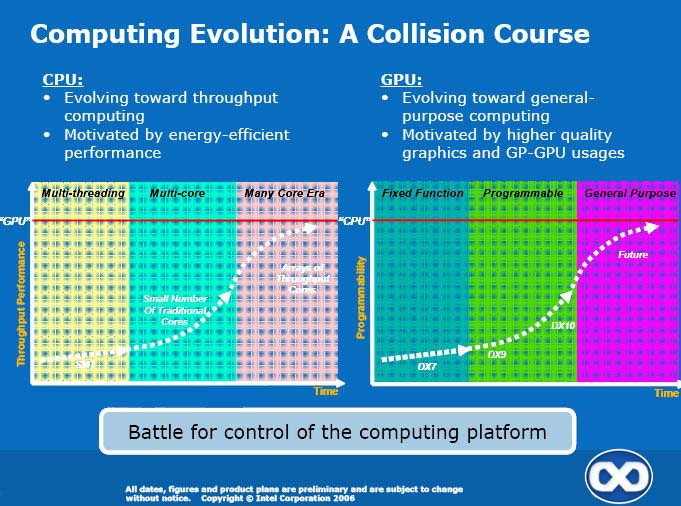

The war is on. The question is, are you ready?

Read on for the rest of the presentation, as well as our short analysis of Intel's proposed architecture. Please Digg it by clicking here if you liked it.

Read on for the rest of the presentation, as well as our short analysis of Intel's proposed architecture. Please Digg it by clicking here if you liked it.

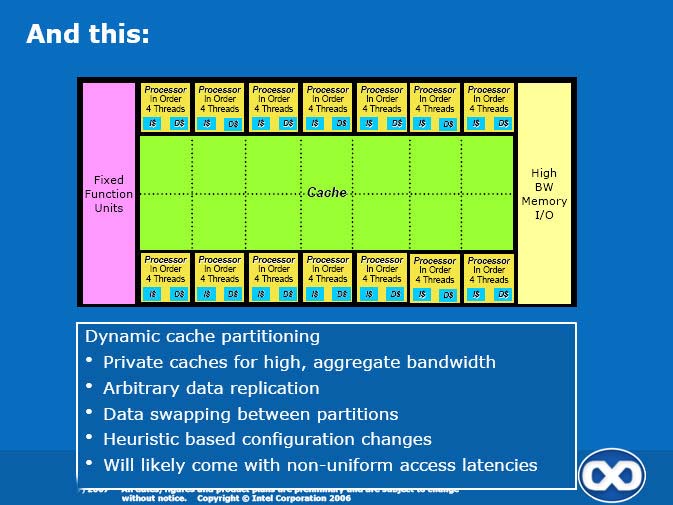

Back in February we reported that Intel's Douglas Carmean, new Chief Architect of their Visual Computing Group (VCG) in charge of GPU development at Intel, had been touring universities giving a presentation called "Future CPU Architectures -- The Shift from Traditional Models". Since then he's added a few more major university stops, and now the feared B3D ninjas have caught up with him. Our shadow warriors have scored a copy of Carmean's presentation, and we've selected the juicy bits for your enjoyment and edification regarding the showdown that Intel sees as already underway between CPU and GPU makers.