Of course, we wouldn't expect you to.

But how's about just leaking the technical documents and specs?

Shifty Geezer

uber-Troll!

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Of course, we wouldn't expect you to.

But how's about just leaking the technical documents and specs?

Shifty Geezer

uber-Troll!

Of course, we wouldn't expect you to.

But how's about just leaking the technical documents and specs?

.

Kepler and GCN are pretty much tied in ALU efficiency.

I don't know why we keep calling them something else, call them what everyone else but Microsoft would, DMA its what it is, DMA with fixed function compress/decompression stuck on the end of it.

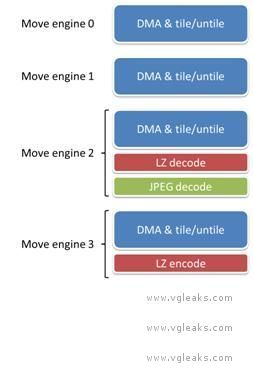

Heres a good graph explaining it from vgleaks.

As you can see the top two 'DME's contain a DMA unit and a tile/untile unit these two are what are present in ALL GCN cards (and probably older as well).

The other two bellow contain the previous twos functionality but also add the FF decompression/compression on.

Probably more informative just to ask him which version of the game he'd buy....

Now back on the efficiency thing and how Teraflops can be irrelevant in some cases, this is what I've found.

...

Bonaire 7790 HD has truly amazing efficiency without crippling compute. xD

...

For instance, I LOVE how the 7790 HD Bonaire is about as powerful as the AMD 6870, and even more powerful sometimes, but consumes 60% less power. :smile2:

So what do we have here?

Yes, the Xbox One connection. :smile2:

The conclusion is that a more expensive, more powerful (theoretically) graphics card -the 6870- than the Bonaire 7790 HD, doesn't perform quite as well as the later in games.

What's the mystery then? I guess it's all in the efficiency of the new design and that the 7790 HD based upon a console chip, most probably.

You might wonder what's the special sauce then. Well, being the basis of the Xbox One GPU, the secret sauce is that it combines the greater geometry processing power (2 primitives per clock) of the 7900 -Tahiti- and 7800 -Pitcairn- cores with a reduced 128 bits memory bus.

It was the first time ever someone had tried something like this.

This is the theory, but here we have the results, the fruits of AMD's labour on this, and how efficiency and smart console-like design defeats a power hungry beast.

In real terms this means that the paper specs say nothing if we don't back them up with actual results, and that, imho, teraflops alone aren't indeed the only indication about how capable the hardware is.

Actually paper specs will tell you pretty much everything you need to know about a systems performance as long as you account for ALL aspects of the system and know what workload your using to compare.

As I said, if I understood it correctly, the console has 4 move engines, and this allows for fast direct memory access to take place.Considering that 2 DMA units are par for the course on GCN, I really wouldn't put the move engines as a 'performance' advantage they are just strange microsoft speak for the usual DMA + compression hardware.

The four move engines all have a common baseline ability to move memory in any combination of the following ways:

(....)

- From main RAM or from ESRAM

- To main RAM or to ESRAM

- From linear or tiled memory format

- To linear or tiled memory format

- From a sub-rectangle of a texture

- To a sub-rectangle of a texture

- From a sub-box of a 3D texture

- To a sub-box of a 3D texture

All of the engines use a single memory path, resulting in the best throughput for all of the engines that would be the same for only one of the engines.

(....)

The great thing about the move engines is they can operate at the same time as computation is taking place. When the GPU is doing computations, the engines operations are still available. While the GPU is working on bandwidth, move engine operations can still be available so long as they use different pathways.

Pc....

Yup, some questions that could likely work with him would something like, for instance:Since there's a nextgen Trials in development, I'd say there's a good chance sebbbi knows a lot more than he's allowed to say at this time

Of course, we wouldn't expect you to.

But how's about just leaking the technical documents and specs?

Shifty just spoiled my secret question, he has been a visionary. :smile: As with his work as a moderator, I wondered if he would be either too strict or too lenient, but I guess he would be too straightforward. You need to be more tactful with such a sensitive developer like sebbbi. heh I'd deem Shifty's approach too progressive.

Nicely said. I wonder if it would work. Your idea is a conundrum itself.Probably more informative just to ask him which version of the game he'd buy....

he he

As I said, if I understood it correctly, the console has 4 move engines, and this allows for fast direct memory access to take place.

The true purpose of the move engines -excuse me if I am wrong- is to take workloads off of the rest of the architecture while still yielding positive results at a very low cost. Perhaps the so called *secret sauce*

From VGLeaks:

http://www.vgleaks.com/world-exclusive-durangos-move-engines/

From the leaked specs it would be my best guess that PS4 in most GPU limited situations would have an advantage performance wise, and certainly it has an advantage from a development standpoint.

I think AMD's technology is better at efficiency nowadays. I was SO happy when first rumours said MS would go AMD this gen...Well, maybe this is a you know something I dont thing, but I've done some figuring, Nvidia does seem to maintain perhaps a 10-20% advantage per flop with latest GPU's. Of similarly performing GPU's with similar bandwidth, Nvidia usually has somewhat less raw flops.

But it has of course shrunken incredibly since AMD went away from VLIW, as you'd expect. It's now more a footnote.

This is a completely bizarre comparison. You comparing a previous generation GPU built on a 40nm process to a current generation GPU built on a 28nm process. And you're surpised the newer one is more efficient?

This has nothing to do with "console efficiency" and everything to do with GPU's getting more efficient between generations and obviously more power efficient between node transfers.

No you don't. You have the connection of 1 AMD architecture progressing to a newer more efficient AMD architecture with the help of a node change. Thisis the same thing that happens every new GPU generation whether or not there's a console based on the new architecture.

As one could reasonably expect when comparing an older generation GPU with a newer one.

Oh geez you've got to be kidding? So your theory is that the 7790 - and by extension the rest of the GCN family which shares almost identical efficiency is only as efficient as it is because "it's based on a console chip?"

Never mind the fact that this architecture was on the market near enough 2 years before it comes to market in console form?

Tell me, how did Nvidia achieve similar efficiency with Kepler without the benefit of it being based on a console chip?

No this isn't special source. It's simply another configuration of a highly modular architecture. So what if the 7790 is the first implementation 2 setup engines plus a 128bit bus? Tahiti was the first to do that with a 384bit bus, Pitcairn was the first to do it with a 256bit bus. Are these equally secret sauce endowed or does only the 128bit bus qualify as the magic ingredient?

Again, no. GCN is not a "smart console-like design". It is AMD's current generation (currentlly around 1.5 years old) PC architecture that has been leveraged for use in consoles (partly) because of it's high efficiency.

It only defeats a "power hungry beast" that is build on a larger node and a much older design. Compared to a "power hungry beast" on the same node and a modern design like Tahiti or Kepler it's clearly much slower.

Actually paper specs will tell you pretty much everything you need to know about a systems performance as long as you account for ALL aspects of the system and know what workload your using to compare.

TFLOPS alone obviously aren't a great metric but when were comparing across the same architecure as you would be when comparing X1 with PS4 or indeed a GCN powered PC then 50% higher TFLOPs (not to mention texture processing) means exactly 50% higher throughput in shader and texture limited scenarios. You obviously have to compare other aspects of the system like memory configuration, fill rate, geometry throughput and CPU performance to get a more accurate picture of the systems overall comparitive capabilities. And even once you know all that, without knowing which elements of the system the workload is going to stress most you're still going to struggle to understand which will perform better.

All you can really say is that one has x% more potential in that type of workload provided no other bottlenecks are encountered first. Then wait for the games to come out and see if they take advantage of that potential or not.

Why do you say that?

Do you know your tools are better or more complete than MS's or are you referring to being unable to talk directly to the hardware on XB1?

Yes, Trials Fusion was announced at E3. It will be released for XB1, PS4, PC and Xbox 360

Well, mine would be a very innocent question, actually, as I rather prefer to be as tactful as possible with you, because of NDA and stuff.It goes without saying that I will not comment on any unofficial rumors/leaks/speculation about either one of the new consoles.

I was just talking about the pure ALU efficiency, not the efficiency of the full architecture. In general when running well behaving code (no long complex data dependency chains) the ALUs should be very close in efficiency (assuming all data is in registers or L1). Of course when we add the differences in memory architecture and caches (sizes/associativies/latencies) there will be bigger real world differences. And when we add the fixed function graphics hardware (triangle setup, ROPs, TMUs, etc) there will be even more potential bottlenecks that might affect the game frame rate. Actual GPU performance is hard to estimate by the theoretical numbers alone. These are very complex devices, and the performance is often tied to bottlenecks. A hardware might for example be much faster in shadow map rendering (fill rate, triangle setup), but be bottlenecked by ALU in the deferred lighting pass. Still the frame rate could be comparable to another hardware with completely different bottlenecks. But in another game performance might be completely different, since the other game might have completely different rendering methods (that are bottlenecked by different parts of the GPUs). Good balance is important when designing a PC GPU. PC GPUs must both be able to run current games fast, and be future proof for new emerging rendering techniques.Nvidia does seem to maintain perhaps a 10-20% advantage per flop with latest GPU's.

I expected next generation games to look very good. That has always happened (PS1->PS2 / PS2->PS3 / XBox->x360). Why would it be any different this time?Out of pure curiosity: Do you think Xbox One games looked as one should expect from the console? Or are you too surprised about how good games looked? Why or why not?