Driver optimizations aren't as important as you would believe they are in this day and age. Applications are now expected to be designed to reach the "fast paths" in a driver/hardware and if they don't well then there's not a whole lot a 'thin' driver implementation can do to save them. Great power (pipeline states/persistent mapping/bindless/GPU-driven rendering) comes with great responsibility (barriers/PSOs/explicit memory management) since these features can frequently reduce the amount of 'blackbox' contained in the driver which ties the hands of IHVs ...Even with those "low level" API, driver optimizations are still important. They need to know which use cases are more common and what could be the major bottlenecks. For example, if a commonly used operation is slow in some way, it won't perform to the full potential of the hardware. This is not necessarily a hardware limitations, but the knowledge of what's the most important operations to optimize is critical in both hardware and software designs.

An IHV today might frequently do some shader replacement to bypass the driver compiler or set an application to use an alternate API implementation path in the driver but the days of IHVs straight up fixing/working around buggy graphics code or replacing major section of a game's renderer are well behind us ...

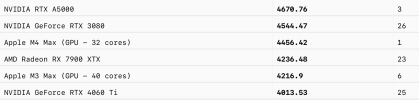

If specific modern API usage or patterns are found to be slow then it's either a case of genuinely mediocre hardware (HW functionality itself is slow) or complex API emulation in the driver (HW has no native functionality for API). If Apple wants to be more competitive in high-end graphics technology then they have to design their future graphics architectures in mind to be closer to AMD/NV (HW does MOST of the API work for them) or end up like the others (Intel/QCOM) because no PC/console developer will create an "Apple specific renderer" where they have to do content changes just to avoid the pitfalls their other rivals don't have since they downright have fast API/HW implementations that developers mostly stick to using! What *worked* for Apple in mobile land isn't going to work in console/desktop land ...