DavidGraham

Veteran

The M3 Ultra runs Assassin’s Creed Shadows at 45fps using 4K medium settings and upscaling at Performance (meaning 1080p).

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Is there some bigger compilation of Mx GPU performance in games to see how it compares to AMD/Intel/NVIDIA?The M3 Ultra runs Assassin’s Creed Shadows at 45fps using 4K medium settings and upscaling at Performance (meaning 1080p).

The M3 Ultra runs Assassin’s Creed Shadows at 45fps using 4K medium settings and upscaling at Performance (meaning 1080p).

I only posted this score because Assassin's Creed Shadows is a native apple port.I know the M-series silicon is highly performant on native workloads

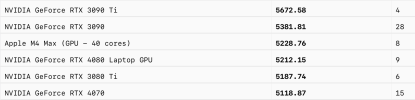

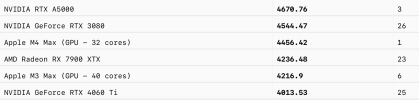

This is beyond bad. M4 Max scores worse than M3 Ultra by the way. This is how other GPUs perform at max settings.Is that even good? That’s a $4000 computer before any upgrades?

Unfortunately there is none. However you can do a little digging and find out. There are basically 3 other AAA Apple silicon native games implementations: Resident Evil 8, Resident Evil 4 Remake and Death Stranding.Is there some bigger compilation of Mx GPU performance in games to see how it compares to AMD/Intel/NVIDIA?

In the era of 'explicit' gfx APIs, driver app optimizations wouldn't truly serve as a sufficient reason to explain the performance gap that's larger than a factor of >2x. The performance gap is most likely down to their hardware design being suboptimal for AAA PC/console games since their graphics division prioritizes their resources towards enhancing mobile games or productivity applications rather than optimizing their hardware around PC/console graphics technology ...I guess it's common to underestimate the value of driver optimizations. The huge efforts made by both NVIDIA and AMD over the years are not trivial and can't be easily replicated. Intel's Arc is a good example (and they are already doing pretty well).

When doing simpler workloads such as running a LLM, a M3 Ultra is roughly equivalent to a 3090 or 3090 Ti (the much larger memory is of course invaluable), so I guess it's not unreasonable to expect a M3 Max (which is half of a M3 Ultra) to be something in the range of a 3060 Ti or 3070.

In the era of 'explicit' gfx APIs, driver app optimizations wouldn't truly serve as a sufficient reason to explain the performance gap that's larger than a factor of >2x. The performance gap is most likely down to their hardware design being suboptimal for AAA PC/console games since their graphics division prioritizes their resources towards enhancing mobile games or productivity applications rather than optimizing their hardware around PC/console graphics technology ...

Driver optimizations aren't as important as you would believe they are in this day and age. Applications are now expected to be designed to reach the "fast paths" in a driver/hardware and if they don't well then there's not a whole lot a 'thin' driver implementation can do to save them. Great power (pipeline states/persistent mapping/bindless/GPU-driven rendering) comes with great responsibility (barriers/PSOs/explicit memory management) since these features can frequently reduce the amount of 'blackbox' contained in the driver which ties the hands of IHVs ...Even with those "low level" API, driver optimizations are still important. They need to know which use cases are more common and what could be the major bottlenecks. For example, if a commonly used operation is slow in some way, it won't perform to the full potential of the hardware. This is not necessarily a hardware limitations, but the knowledge of what's the most important operations to optimize is critical in both hardware and software designs.

Shader replacement can't really explain the major gap and if a game is "poorly optimized" on their hardware, that's almost certainly because Apple GPU designs aren't used to PC/console graphics workloads (they don't see many releases of graphically high-end games so they hardly have any incentives to optimize their hardware around them) with deferred renderers, async compute, GPU-driven rendering, bindless, virtual texturing/geometry or all sorts of state of the art graphics technology to which mobile graphics technology haven't made those leaps yet ...I don't really see which part of what you said contradicts with what I said.The term "driver optimization" means many things, including what you said, such as shader replacements (which is probably the major part of today's driver optimizations).

We don't really know what's the problem (or problems) with Apple's poor performance on games, but it certainly not because the hardwares are too weak. Therefore, the most likely problems are probably either poorly optimized games, pooly optimized drivers (which includes the API layer), or some hardware inefficiencies. If I have to guess I'd say it's probably all of them. Years of experiences of writing drivers for those games also help when you need to diagnose what's the problem in a game's rendering pipeline. I doubt that Apple are able to provide the same level of support and insights to game developers as NVIDIA and AMD can.

Shader replacement can't really explain the major gap and if a game is "poorly optimized" on their hardware, that's almost certainly because Apple GPU designs aren't used to PC/console graphics workloads (they don't see many releases of graphically high-end games so they hardly have any incentives to optimize their hardware around them) with deferred renderers, async compute, GPU-driven rendering, bindless, virtual texturing/geometry or all sorts of state of the art graphics technology to which mobile graphics technology haven't made those leaps yet ...

Their shader architecture might look fine on the surface but what about their fixed function hardware for which they do very little disclosure of ? Tile based renderers (Apple designs belong in this family) aren't known to scale well with very high geometry density and quite a few implementations don't even feature hardware blending units either! Apple HW goes so far as to NOT implement industry standards compliant (D3D12/VK) texture units so we have to wonder if games had to do "performance degrading workarounds" since their API itself is deficient (by extension the HW design too) ...

In the past, Metal API had to support multiple vendors since Apple didn't offer in-house GPU solutions in the desktop space but that eventually changed too so now Metal and especially it's newer iterations should be a MUCH CLOSER fundamental reflection of their HW design!

GPU-driven rendering is still a pain point for mobile GPUs in general and many PC/console ports don't make good use of renderpass APIs too (because it's not required for D3D12!) so I believe we DO actually have a good idea of their source of bad performance and I think Apple's own engineers know as well since it's their job to performance profile these applications too ...You talked about a lot of possible reasons why Apple's hardware performs badly in those games, but you don't know which ones (if any) are actually the reason. That's exactly my point: both NVIDIA and AMD have years of experiences on supporting games so they know. Apple don't, so they probably don't know. If Apple had similar experiences they'll have hardware designs more suitable, or they'll know how to advise game developers to work around these issues.

I also don't think the games are very well optimized for Apple's hardware. There were some games ported to mobile phones and they don't run very well either, so they didn't sell very well. I don't think many game developers are willing to spend a lot of resources on optimizing for Macs, unfortunately.

GPU-driven rendering is still a pain point for mobile GPUs in general and many PC/console ports don't make good use of renderpass APIs too (because it's not required for D3D12!) so I believe we DO actually have a good idea of their source of bad performance and I think Apple's own engineers know as well since it's their job to performance profile these applications too ...

Sure it helps that AMD and Nvidia have more experience but they're first and foremost proactive about how they approach high-end graphics rather than Apple's proscriptive stance of preferring to do nearly NOTHING about their problems because they don't want to spend their resources on making TWO separate architectures (one for desktop & one for mobile) with different drivers. They're well AWARE that what they're doing for mobile graphics isn't translating well to desktop graphics ...

How can they not make separate architectures at this point ? Mobile graphics lives in an alternate reality where forward rendering is still the default lighting technique with questionable compute shader perf. Low geo density of mobile content and their carefully minimized renderpasses (goes out the window desktop style deferred) also works well for tile-based renderers ...I think if they wanted and they know how they don't even have to make two separate architectures. It's entirely possible to make something workable for both settings, but since Apple never actually like games (despite the fact that the majority of App Store apps are games), they only recently actively trying to push games on Macs. It's just not that easy to overcome these years of negligence.

I think what Apple should do (if they're truly serious about high-end graphics) is to establish an independent graphics division with their own management to be able to create D3D12/Windows compatible graphics cards so that way they'll have more freedom to compete directly against AMD/NV because right now Apple refuses to open themselves to competitive pressure in that segment ...Personally I'm not bullish on Mac games because Mac are expensive and many people bought them as work machines. The market share is just not big enough to justify the cost of development for many game studios (unless Apple's willing to subsidize them, which I believe is probably the case for many projects). Today's game developers already face huge hurdles optimizing their games for PC well, it just does not make much sense to do more works for a much smaller market.

The business practices of individual game companies are not really Apple's "business" as long as they play by the rules (given by society and the elected officials) but yes, they do make more money than Microsoft, Nintendo and Sony combined in the gaming segment. A lot of that segment with dubious practices also happens to be available on consoles and Android. App / Game stores are filled with gacha / gambling mechanics.Apple makes the most money on games from iOS, that is mobile games with things like loot boxes and other distasteful business models.

In fact, I believe Apple makes more money from games, if you can call mobile games which try to get addicts to spend a lot on micro transactions real games.

It's doubtful that they are interested in catering to the gaming market. Sure they will have demos at some keynotes but they know they're not selling a lot of M4 Max and M3 Ultra Macs to gamers.