Link to the tests?Not even close.

https://www.spec.org/gwpg/gpc.data/vp12.1/summary.html

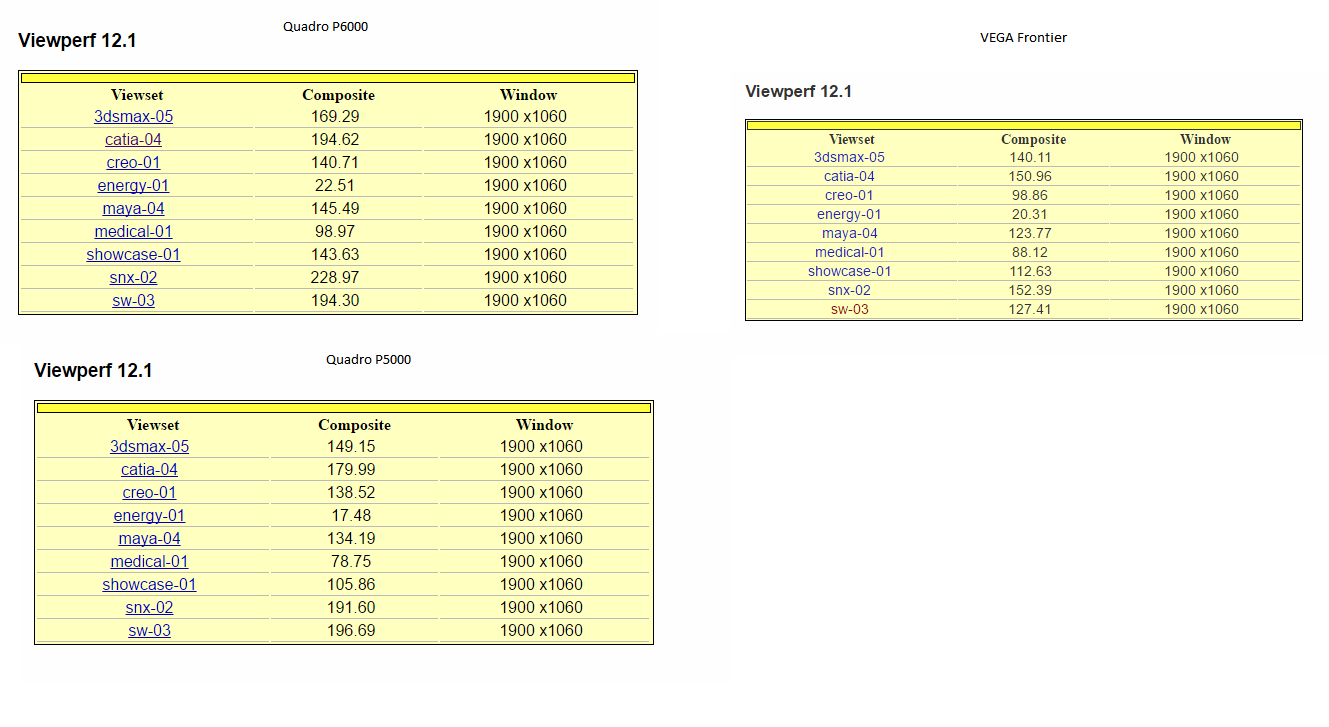

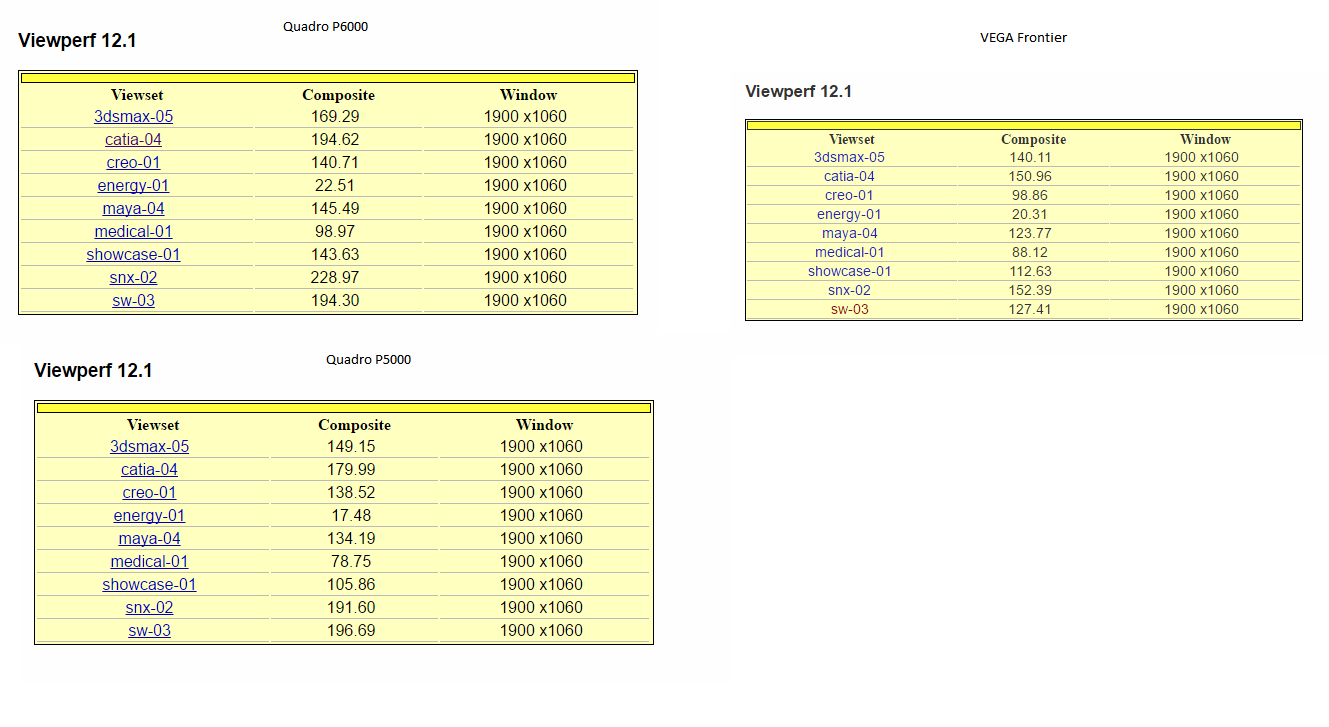

There seems to be 2 kinds of P6000 results (Filter), 2 are like the one you have there, 2 are lower, quite close to Vega. Same goes for P5000

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Link to the tests?Not even close.

I don't think the issues raised can be so readily dismissed.Lul...Is Pascal more bandwidth efficient than Polaris? Sure, no question about it.

Does this mean, that the Pascal color compression is "better"? Not necessarily (it's even difficult to define, what "better" means).....

*cough* Bullshit. *cough*If AMD's Polaris would have implemented the exact same compression scheme as Pascal, it would likely be slower and would consume more bandwidth than it does now.

Oh, I haven't had any problem comparing the two... YMMV.which makes it difficult to impossible to compare.

Delta compression is by its nature less efficient than whole color compression, topping out at just 2:1 compared to 8:1 for the latter.

...

The impact of 3rd generation delta color compression is enough to reduce NVIDIA’s bandwidth requirements by 25% over Kepler, and again this comes just from having more delta patterns to choose from. In fact color compression is so important that NVIDIA will actually spend multiple cycles trying different compression ratios, simply because the memory bandwidth is more important than the computational time.

Meanwhile, new to delta color compression with Pascal is 4:1 and 8:1 compression modes, joining the aforementioned 2:1 mode. Unlike 2:1 mode, the higher compression modes are a little less straightforward, as there’s a bit more involved than simply the pattern of the pixels. 4:1 compression is in essence a special case of 2:1 compression, where NVIDIA can achieve better compression when the deltas between pixels are very small, allowing those differences to be described in fewer bits. 8:1 is more radical still; rather an operating on individual pixels, it operates on multiple 2x2 blocks. Specifically, after NVIDIA’s constant color compressor does its job – finding 2x2 blocks of identical pixels and compressing them to a single sample – the 8:1 delta mode then applies 2:1 delta compression to the already compressed blocks, achieving the titular 8:1 effective compression ratio.

In addition to a larger L2 cache that allows more data to remain on the chip, Polaris has improved delta color compression (or DCC) capabilities that allow it to compress color data at 2:1, 4:1, or 8:1 ratios.

The lower P6000 results are from CPUs clocked @2.2GHz, you are really going to take those and compare them to a Vega with a CPU running @4GHz?There seems to be 2 kinds of P6000 results (Filter), 2 are like the one you have there, 2 are lower, quite close to Vega. Same goes for P5000

I don't dispute that such conditions are possible. I do have a problem with asserting that they are "likely", which is the basis of the entire argument. I mean the guy apparently can't even see the irony of contesting an unfounded claim (which I did not make btw), with another even more unfounded claim. He is essentially arguing with himself, so I have no idea why I need to be involved in the conversation at this point at all...3dilettante said:The threshold where the multiple attempts exceeds savings due to recalculating too many deltas may also be crossed more readily.

Obviously. It's not a strawman. I explained, why your claim regarding the color compression can't be made by looking just at the overall bandwidth efficiency. You somewhat agree and at the same time you called that explanation bullshit (I was not hostile, btw.).Why the strawman? I do not know why you have turned hostile toward me, but my perspective remains the same as it ever has.

Again, I never said otherwise, so why the strawman? I don't get it.

You stated quite clearly that you are interested in the overall bandwidth efficiency, I got that. But you took offense at my remark, that from the overall bandwidth efficiency one can't readily judge the quality of the color compression (as you did!), especially if there are pretty significant architectural differences between the compared GPUs. You could have just accepted that, or you could have engaged in a meaningful discussion to explore the nitty gritty details of that or also to refute my remark. You did neither.As for what I am interested in

No, you misinterpreted my claim (strawman), and continue to do so, but I digress....Gipsel said:I explained, why your claim regarding the color compression can't be made by looking just at the overall bandwidth efficiency.

GCN's L2 atomics have hardware that is tied to cache lines, and the DRAM devices themselves work at a burst granularity.Atomics exist that could bypass those lines. If paging registers it makes sense. Framebuffer less so as there won't be gaps. So yeah it won't work everywhere, but significant. If they did some variable SIMD design those cache sizes could change as well.

For Vega, it's the upper range of Pascal, which is starting to be phased out that highest end right now. Vega isn't fully out yet, and the start of the announcement/hype process for the next chips can start earlier than the full deployment.So by Pascal being replaced "soon" you mean "6-8 months from now"?

Here's the direct link:He posted pictures form The Witcher 3 running with maximum settings "HairWorks off" @1080p, the game was running at a 100fps or so, which is right in the ballpark of GTX 1080

http://www.guru3d.com/articles-pages/nvidia-geforce-gtx-1080-review,23.html

The lower P6000 results are from CPUs clocked @2.2GHz, you are really going to take those and compare them to a Vega with a CPU running @4GHz?

These are P6000/P5000 on a 3.6GHz CPU

https://www.spec.org/gwpg/gpc.data/...ults_20170210T1401_r1_HIGHEST/resultHTML.html

https://www.spec.org/gwpg/gpc.data/...ults_20170207T1002_r1_HIGHEST/resultHTML.html

Why? he is even forcing the clocks to stay @1600MHz all the time. So the card is operating at full power already.Lots of people are complaining that the guy is testing Vega FE on a PC with a 550w PSU and therefore everything he does is invalid.

If you don't recall it anymore, that was what I answered to:No, you misinterpreted my claim (strawman), and continue to do so

You did make that claim. I just argued that and why it is hard to judge just from overall bandwidth efficiency. Make whatever you want with that. I made my point.Yes, it just is not as good as Nvidia's.AMD does have color compression

The assumed pattern for ROP behavior is that tiles of pixels are imported in a pipelined fashion to the ROP caches, modified, and then moved back out in favor of the lines that belong to the next export.I don't dispute that such conditions are possible. I do have a problem with asserting that they are "likely", which is the basis of the entire argument.

I don't think his results are invalid. I just thought it was worth mentioning since many people (mostly AMD fans) refuse to accept the guy's results.Why? he is even forcing the clocks to stay @1600MHz all the time. So the card is operating at full power already.

If you don't recall it anymore, that was what I answered to:

You did make that claim. I just argued that and why it is hard to judge just from overall bandwidth efficiency. Make whatever you want with that. I made my point.

Performance per Watt has even more confounding factors than bandwidth efficiency.When Did NV really get their big perf per watt advantage

I think Kepler did okay, which compression may have helped with. Fermi had some other things going on, which just goes to show that the analysis is complicated.When Did NV really get their big perf per watt advantage was it when NV released their first product with colour compression (fermi as far as im aware) or was it when they released their first product with the ROP caches being L2 backed (maxwell).

Unless colour compression just sucked in fermi/kepler then magically became super awesome in maxwell because reasons.

Performance per Watt has even more confounding factors than bandwidth efficiency.

Did you ever stop to think that maybe... just maybe I had already taken into account everything you have written here before I made my original post? I'll guess no.Gipsel said:I just argued that and why it is hard to judge just from overall bandwidth efficiency.

Congrats on winning an argument with yourself I guess?Gipsel said:I made my point.

I don't recall giving a value for the amount color compression contributes to the overall bandwidth savings. I simply said Nvidia's was better than AMD's and gave a greatest lower bound for the total savings (from all sources) contribution to perf/watt of 25%. Thus the range for what I said is 0 < X < 25 where X is color compression. Of fucking course, I don't think that color compression is responsible for all of that.... but when has interpreting other people's words accurately ever been an issue here before...itsmydamnation said:Unless colour compression just sucked in fermi/kepler then magically became super awesome in maxwell because reasons.

Why? he is even forcing the clocks to stay @1600MHz all the time. So the card is operating at full power already.