ExecuteIndirect and MultiDraw (OpenGL) support indirect draw count, meaning that the CPU doesn't even know now many draw calls are being made. Draw arguments and draw count are written by GPU to a buffer that is only accessible by the GPU. CPU does however send GPU a command to start ExecuteIndirect/Multidraw, but doesn't know the draw count or the draw parameters.Except that in neither DX12 or Vulkan case shader is responsible for submitting draw calls. Shaders can prepare data for the draw calls and that data doesn't have to be shipped back to the CPU side, but the actual draw is still dispatched by the CPU.

These draws are made either by the GPU command processor (CP loop) or by a compute shader writing directly to GPU command buffer. Intel for example implements ExecuteIndirect with indirect draw count as compute dispatch that writes to command buffer. Nvidia's Vulkan device generated commands extension (https://developer.nvidia.com/device-generated-commands-vulkan) does the same. This extension can even change render state and shaders. AMD implements ExecuteIndirect in their command processor (no extra dispatch to setup draws).

CUDA and OpenCL have functionality to spawn shaders directly from compute kernels. You can write a lambda inside the shader and spawn N instances of it. The shader can then wait for completion and continue work from there, without any CPU intervention.

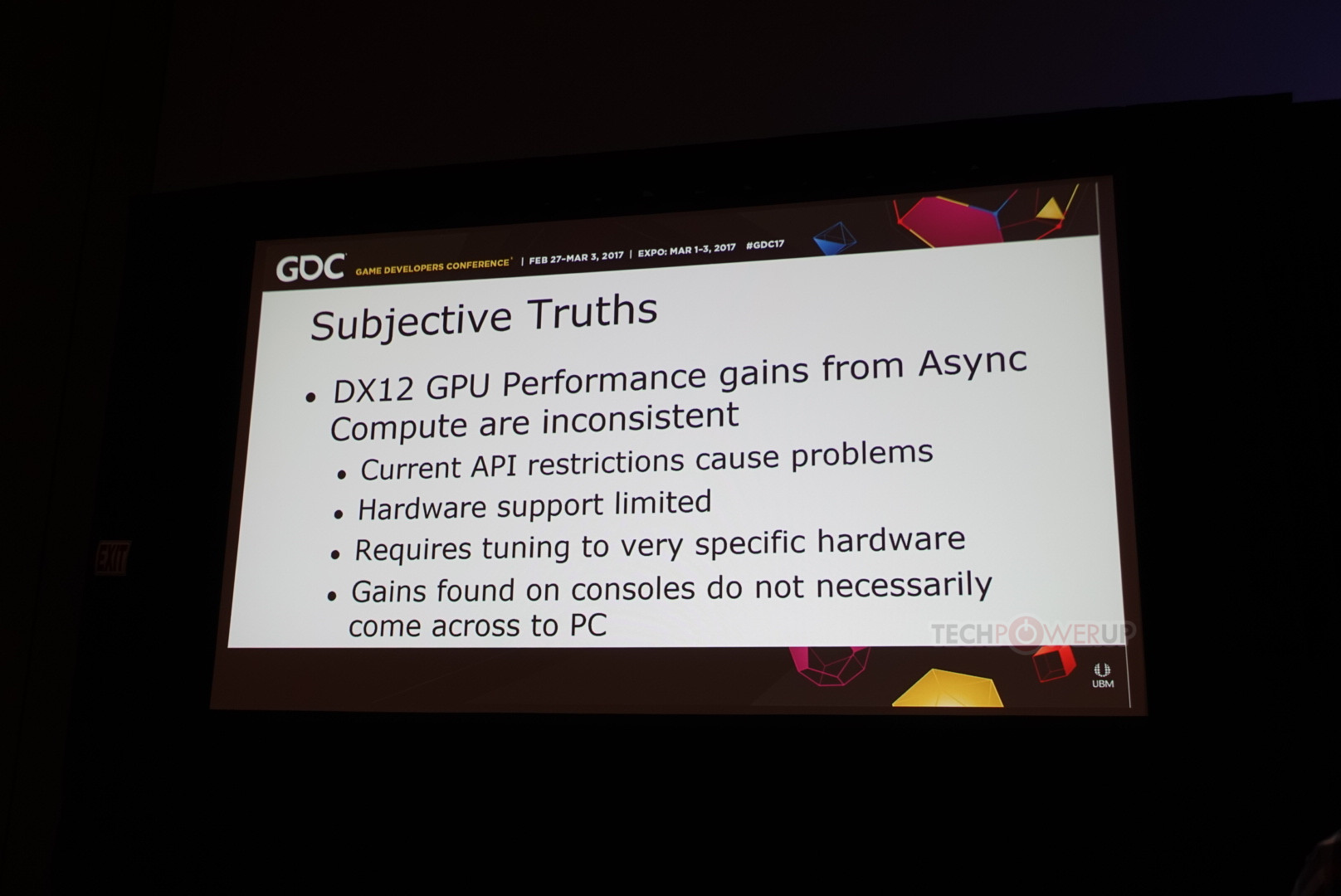

But the driver still is a big deal. The driver spawns the extra setup dispatches and decides how and when to run them (possibly concurrent to other tasks) in order to ensure that the data is ready in time, but the data generation doesn't block other tasks. DX12 barriers are still pretty high level construct. GPUs caches tend not to be fully coherent, so barrier might instruct flushes of some caches. Render targets might be in compressed format that need to be decompressed before sampling it. There might be temp resource allocations and preparation needed before tessellation, geometry shader and tiled rasterizer work can begin. All of these extra tasks can be executed in parallel to other work. It is the driver's responsibility to make this happen in the fastest safe way possible. Driver can also do stuff like combine cache flushes of the same type, and increase parallelism in cases where it knows that concurrent execution of some shaders is safe. Async compute opens up a whole new can of worms. Console devs are used to hard coding CU masks and wave limits, etc to fine tune the performance of concurrent work (neither queue starves). PC drivers need to do this without application level knowledge. This isn't an easy problem to solve. And most importantly, the driver isn't just the CPU code that is being run. The driver sends the microcode for all the GPU fixed function processors. Thus the driver is also fully responsible how the GPU command processor executes the commands and how indirect multidraw and spawning new shaders from kernels is implemented on the GPU side.

During the last year I rewrote Unreal Engine's 2x console backend resource transitions. There's just so many small things you can do differently to reduce stalls between draws & dispatches and to allow best possible overlap. But there are also so many corners cases that you need to take into account to prevent that data race that only occurs in some obscenely rare case. You would need to know what the shader does in order to write the best possible transition code. Priority #1 for any driver is to guarantee correctness. This unfortunately means that the default code path will likely be slightly slower than game specific code paths.