They clearly saw non-ML upscaling as the best fit for the hardware they had at the time. And if RDNA4 offers improved ML performance then ML upscaling will be the best fit there. That doesn't mean it will retroactively become the best fit on earliear hardware on which ML upscaling is more expensive. Performance isn't optional for upscaling to make sense.Instead of doing a non ML upscaling FSR 2 they should have gone just the right way and should have communicated that ML upscaling is the future and may be not as fast as on other hardware.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD RDNA4 potential product value

- Thread starter Potato Head

- Start date

Subtlesnake

Regular

So it's using the FSR 3.1 API, allowing RDNA 2/3 cards to fall back to FSR 3.1.

Sega_Model_4

Newcomer

Who told you this?

WMMA is a shader instruction. It most certainly does not contain any kind of matrix math core.

WMMA means Wave Matrix Multiply Accumulate.

Tensor Cores are programmable matrix multiply and accumulate units.

It's the same thing.

Sega_Model_4

Newcomer

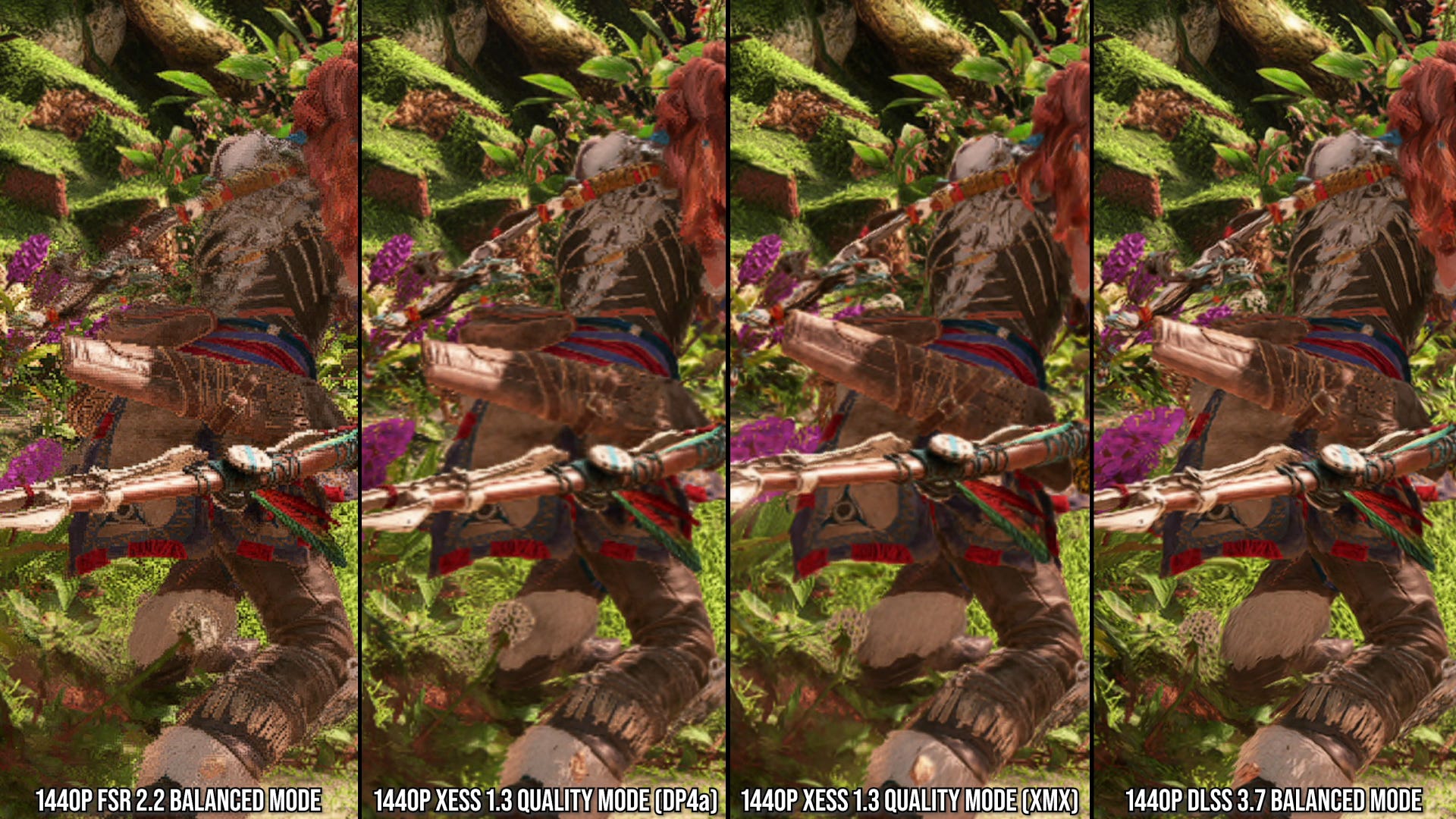

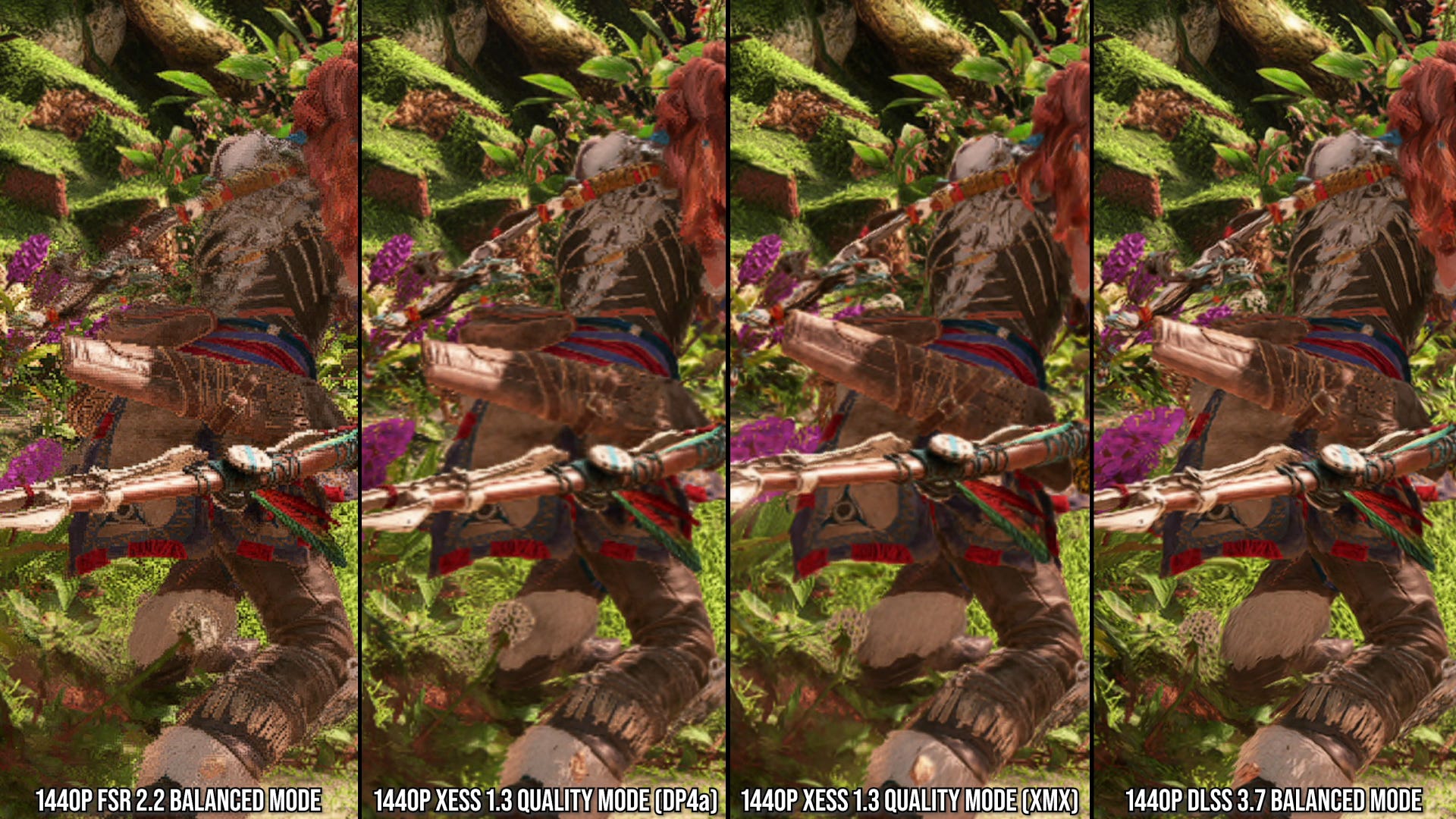

There is a possibility that FSR4 is something like Intel's XESS, which has two versions, a generalist version (DP4a) that works on any hardware and a higher quality version (XMX), which is exclusive to Intel GPUs.

..

I dont see how "non-ML upscaling" would be a better fit for a 7900XTX with a 300mm^2 5nm die when DLSS runs just fine on a cut down 300mm^2 16nm chip with 10.8 billion transistors (or 1/5 of RDNA3).They clearly saw non-ML upscaling as the best fit for the hardware they had at the time. And if RDNA4 offers improved ML performance then ML upscaling will be the best fit there. That doesn't mean it will retroactively become the best fit on earliear hardware on which ML upscaling is more expensive. Performance isn't optional for upscaling to make sense.

..

I dont see how "non-ML upscaling" would be a better fit for a 7900XTX with a 300mm^2 5nm die when DLSS runs just fine on a cut down 300mm^2 16nm chip with 10.8 billion transistors (or 1/5 of RDNA3).

Should AMD have gone back in time and redesigned RDNA 3 after they saw DLSS? Or are you just saying AMD missed the boat on ML upscaling and are playing catch up? We already know that.

Yes, they should have delayed RDNA3 for a year to make it better. Now they are selling even "new" APUs with RDNA3 without having access to FSR4.

But this dicussion was not about hardware. It was about their marketing stunt with FSR 2 to deflect from their outdated RDNA <=3 hardware. FSR 4 is a 180° turn.

But this dicussion was not about hardware. It was about their marketing stunt with FSR 2 to deflect from their outdated RDNA <=3 hardware. FSR 4 is a 180° turn.

Last edited:

The operation they perform is the same, but the fact that Tensor Cores are extra units in addition to the basic shader ALUs, with much higher throughput, is very significant. That isn't the case for WMMA, which just maximises utilisation of the shader ALUs.WMMA means Wave Matrix Multiply Accumulate.

Tensor Cores are programmable matrix multiply and accumulate units.

It's the same thing.

The ability to use inference to reconstruct frames has very little to do with die size and everything to do with what hardware is included on said die. RDNA3 doesn’t really have a tensor core equivalent.I dont see how "non-ML upscaling" would be a better fit for a 7900XTX with a 300mm^2 5nm die when DLSS runs just fine on a cut down 300mm^2 16nm chip with 10.8 billion transistors (or 1/5 of RDNA3).

Also, FSR2 predates RDNA3 and was really made for consoles I’d imagine.

what exactly would you have them do?But this dicussion was not about hardware. It was about their marketing stunt with FSR 2 to deflect from their outdated RDNA <=3 hardware. FSR 4 is a 180° turn.

Suffer their crimes for all of eternity, apparently. lolwhat exactly would you have them do?

Few people will argue that FSR4 isn't coming dang late, but in no way should something they said in marketing years ago hold them back from making progress eventually anyways. It's not like people bought RDNA3 specifically because they really wanted to invest in open source reconstruction like FSR super badly. It was a known compromise people were making, or just ignored entirely from the crowd of purists who believed(or still believe) it's sacrilegious to use anything but native resolution.

At the same time, it's also not wrong to want a good open solution to exist. And I'm still hoping that perhaps FSR4 wont be super closed. Like, I get it, if previous RDNA architectures straight up cant run it, or run it performantly, fine, but should they close it off to Nvidia and Intel users if those GPUs are capable of running it ok? I kinda feel this would be a mistake, just in terms of getting developer adoption. Perhaps if we were closer to a new generation of consoles that could utilize it, they could get away with keeping it fully proprietary, but otherwise, I'm not sure they're in a position to be pushing yet another reconstruction method specifically for the small subset of people who not only have an AMD GPU, but the even smaller subset with the specific type of AMD GPU required.

I know these techniques aren't super hard to implement anymore, but doing a decent implementation can still require a fair bit of testing and some tweaking of certain game aspects in order to make sure it works optimally in their title. And I'm not sure AMD cannot afford for devs to be pushing out careless, flawed FSR4 implementations because it was an afterthought.

I agree, but open source doesn't just mean 'runs on everything'. I think FSR4 being open sourced but has higher requirements to achieve a better resolve would be excellent and tbh I would probably choose to run it over DLSS simply to align with my own principles (in fact I'm almost ready to accept the lower quality of FSR2 just for the fact that I believe open computing is superior to proprietary computing, at least when it comes to software).At the same time, it's also not wrong to want a good open solution to exist.

Even if FSR4 is totally open I don't see either Intel or Nvidia implementing support and that's basically what it would take.

Direct ML isn't suitable otherwise that may have been a way to make it cross vendor.

All Intel and AMD can really push is for direct sr if their not getting direct support.

Don't think seen any game release with it yet though, which all things considered isn't a huge vote of confidence in the API. Not saying it's dead in the water or anything like that.

Direct ML isn't suitable otherwise that may have been a way to make it cross vendor.

All Intel and AMD can really push is for direct sr if their not getting direct support.

Don't think seen any game release with it yet though, which all things considered isn't a huge vote of confidence in the API. Not saying it's dead in the water or anything like that.

DegustatoR

Legend

It's not released yet.Don't think seen any game release with it yet though

Well that would explain it.It's not released yet.

It's taking its time to get released.

Thought it had released in 'preview' and was meant to fully release soon after.

Is there something inherent to ML-based upscaling that means any model it uses can only ever be used on a specific vendor's GPU architecture? Obviously we've really only got two examples to go by, DLSS and XeSS XMX(I guess technically three with PS5 Pro PSSR) which both do require vendor-specific hardware, but is this gonna be a hard rule going forward?Even if FSR4 is totally open I don't see either Intel or Nvidia implementing support and that's basically what it would take.

Is the idea of an 'open' ML upscaler usable by other vendors without explicit driver support and optimization impossible?

DegustatoR

Legend

It is in preview but nobody ships games with preview SDKs and there were no timeframe given for a possible release. IIRC they've said something about couple of years.Thought it had released in 'preview' and was meant to fully release soon after.

It's possible that the release will happen alongside RDNA4/Blackwell of course.

No real generic coding standard that suitable for it that would work on tensor and xmx etc. Based on what Tom Peterson said regarding xess fg ml running on other arch.Is the idea of an 'open' ML upscaler usable by other vendors without explicit driver support and optimization impossible?

If FSR4 was light enough to run via dp4a I fully expect they wouldn't have limit it to RDNA4.

Couple years, yikes.IIRC they've said something about couple of years.

It's possible that the release will happen alongside RDNA4/Blackwell of course.

Little reason to align with hardware launch. If that happened I'd hope it just happened to line up.

Last edited:

arandomguy

Veteran

Doesn't XeSS fallbacks (via DP4a and Compute) as a ML upscaler already work on other IHV hardware without explicit driver support and optimizations on the part of those vendors?

The thing is I'm not exactly clear why this should be an issue. What we should have is just to standardized a set of inputs and outputs, then let each IHV handle the inbetween how they choose. This is how currently graphics are done in general. If some IHVs develop a competitive advantage then so be it?

I know what people might really be afraid of is this changes the paradigm that the output is not identical among IHVs (putting us back to the days of analog outputs) and ruins the easy numbers based "apples to apples" comparisons. Again that's okay. If IHV 1 has better output but lower numbers that's okay, maybe people might need to put more thought into what's the better product than just "that product shoots out better numbers."

I also have this general issue in that I feel "open source" is more and more just a marketing term by companies and for some the public some sort of ideal concept rather than something practical. For example let's be more specific here in terms of an open source ML upscaler, what do people actually mean? Are you talking about the training, inference, and/or implementation to be all open sourced? Under what license? Who's going to handle all this, particurly improving the training?

The thing is I'm not exactly clear why this should be an issue. What we should have is just to standardized a set of inputs and outputs, then let each IHV handle the inbetween how they choose. This is how currently graphics are done in general. If some IHVs develop a competitive advantage then so be it?

I know what people might really be afraid of is this changes the paradigm that the output is not identical among IHVs (putting us back to the days of analog outputs) and ruins the easy numbers based "apples to apples" comparisons. Again that's okay. If IHV 1 has better output but lower numbers that's okay, maybe people might need to put more thought into what's the better product than just "that product shoots out better numbers."

I also have this general issue in that I feel "open source" is more and more just a marketing term by companies and for some the public some sort of ideal concept rather than something practical. For example let's be more specific here in terms of an open source ML upscaler, what do people actually mean? Are you talking about the training, inference, and/or implementation to be all open sourced? Under what license? Who's going to handle all this, particurly improving the training?

DegustatoR

Legend

Well, with FSR4 being RDNA4 exclusive and everything else continuing to rely on FSR3 (presumably) there's a reason - DirectSR would provide such routing for AMD "automatically" without any need for them to update the FSR SDKs with such functionality (would still be needed for non-Windows PCs though probably).Little reason to align with hardware launch.

DegustatoR

Legend

XeSS SR does but that version is significantly lower quality than the one which runs on XMX h/w. AMD doesn't need to copy that because they have FSR2/3 for that purpose already.Doesn't XeSS fallbacks (via DP4a and Compute) as a ML upscaler already work on other IHV hardware without explicit driver support and optimizations on the part of those vendors?

Also XeSS FG is locked to Intel WMMA h/w because implementing that via DP4a seemingly wasn't possible.

If FSR4 AI SR will aim at being close to DLSS SR then implementing a worse version a la XeSS seems pointless. They'd need all the power they can spend to reach DLSS IQ levels.

Similar threads

- Replies

- 166

- Views

- 11K

- Replies

- 85

- Views

- 16K

- Replies

- 3

- Views

- 737

- Replies

- 8

- Views

- 1K

- Replies

- 157

- Views

- 10K