Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Value of Hardware Unboxed benchmarking

- Thread starter Phantom88

- Start date

Gotta fit those numbers to their narrative, rightI have only seen now that Hardwareunboxed used V-Sync while measuring the latency hit with MFG:

Quality content here...

Is it wrong to use V-Sync with FG on a G-sync display? Reflex smartly limits the framerate just under monitor refresh rate so that V-sync doesn't add latency. Or maybe this incorrect and latency is added? If so, how much latency?I have only seen now that Hardwareunboxed used V-Sync while measuring the latency hit with MFG

Or maybe the correct way is to go beyond monitors refresh rate?

V-Sync enabled in the App and Reflex limits the FPS slighty under the V-Sync limit of the VRR display. Running into V-Sync means buffering not only the next new one but the generated one, too. So latency increases massively over Reflex and V-Sync. Digitalfoundry has done a video about it two years ago:

Does something indicate that Tim is hitting V-Sync limit and therefore drastically increasing latency?V-Sync enabled in the App and Reflex limits the FPS slighty under the V-Sync limit of the VRR display. Running into V-Sync means buffering not only the next new one but the generated one, too. So latency increases massively over Reflex and V-Sync.

I get very similar latency numbers in Cyberpunk as Tim is showing in his video when running 58 -> 116 FG on my 120hz display with a 4090 (57.7ms). This latency is similar with V-sync enabled or V-sync off but using Cyberpunk's internal fps limiter to stay just below 120. Therefore, I don't think Tim is hitting V-sync.

But maybe you have better information on this? What kind of latency number should we be seeing if Tim was testing properly?

Yeah, have tested it myself. Maybe not V-Sync.

But what he has done is just faking numbers. Limiting nativ 30 FPS and comparing this to nativ 120 FPS is nonsense and absolut dishonest. This even doesnt show the whole reality because the latency hit from MFG with an underutilized GPU is <= 1/5 (or around 6ms from baseline of 78ms+) of the frame. So MFG is basically free...

I can only repeat what i have said: This is a clickbait and hate channel.

But what he has done is just faking numbers. Limiting nativ 30 FPS and comparing this to nativ 120 FPS is nonsense and absolut dishonest. This even doesnt show the whole reality because the latency hit from MFG with an underutilized GPU is <= 1/5 (or around 6ms from baseline of 78ms+) of the frame. So MFG is basically free...

I can only repeat what i have said: This is a clickbait and hate channel.

Just for the record to dispell this lie about HUB-Tim using V-Sync to fudge numbers, Digital Foundry shows similar 50+ ms latency in Alan Wake 2 circa 60 -> 120. No reason to assume HUB's Their 30 -> 120 and 40 -> 120 latency numbers are fake either.

Faking numbers? Hate channel? Righto... I think the hate is coming from somewhere else, my friend.

Back to ignore list with you again. Keep well!

Faking numbers? Hate channel? Righto... I think the hate is coming from somewhere else, my friend.

Back to ignore list with you again. Keep well!

He is using base 30 FPS and compares it to nativ 120 FPS. This is basically faking. Like with the Raytracing video in which he has faked the stage so he can hate on Raytracing.

Nobody has ever advertised to limit the base FPS to 1/MFG-X and play with it. nVidia has compared the 5070 with MFG-4x to the 4090 with FG with DLSS performance in 4K.

Nobody has ever advertised to limit the base FPS to 1/MFG-X and play with it. nVidia has compared the 5070 with MFG-4x to the 4090 with FG with DLSS performance in 4K.

DegustatoR

Legend

The method used by HUB for their video is highly dubious when it comes to latency measurements.

They say (as I understand it at least) that Nvidia recommended this method to showase native/FG/MFG differences on YouTube.

But I doubt that Nvidia has anywhere recommended measuring latencies with the same limitations active.

They say (as I understand it at least) that Nvidia recommended this method to showase native/FG/MFG differences on YouTube.

But I doubt that Nvidia has anywhere recommended measuring latencies with the same limitations active.

GhostofWar

Regular

The bottom graph shows him hitting a vsynced 120hz to apparently compare latency at a comparable 120fps. If it was working properly with reflex active I would expect the frame gen fps cap to be like 117fps? The top graph is how DF tested latency and lines up, the bottom graph is useless at best and the only way to make that data useful is to tell the audience this is how not to use it.Does something indicate that Tim is hitting V-Sync limit and therefore drastically increasing latency?

Considering Tim should have access to higher refresh rate monitors using one of them might have actually represented a better end user case for x3 and x4 modes.

The bottom graph shows him hitting a vsynced 120hz to apparently compare latency at a comparable 120fps. If it was working properly with reflex active I would expect the frame gen fps cap to be like 117fps? The top graph is how DF tested latency and lines up, the bottom graph is useless at best and the only way to make that data useful is to tell the audience this is how not to use it.

Considering Tim should have access to higher refresh rate monitors using one of them might have actually represented a better end user case for x3 and x4 modes.

I hadn't really thought about this. I don't think you can disable reflex when frame gen is active, so the only think that makes sense is he had vsync disabled and a manual frame limit of 120. As long as you don't enable vsync, reflex won't automatically cap your framerate. That would have been an odd choice, but maybe it makes for better youtube presentation? Are you able to set frame limits in-game while frame gen is active?

Edit: The maybe worst answer is that the 120 average and base frame rate are made up because he didn’t want to explain why it was capped at 114 or something for all of them.

Last edited:

GhostofWar

Regular

He's done something weird to create that latency, When i've used framegen with an external limiter below 138fps the latency is horrendous. To do it right maybe he should have got a 120hz panel or set his display to 120hz if it's greater, looked at what reflex uses when working properly and then manually capped the native if needed but he should be using reflex on the native aswell.I hadn't really thought about this. I don't think you can disable reflex when frame gen is active, so the only think that makes sense is he had vsync disabled and a manual frame limit of 120. As long as you don't enable vsync, reflex won't automatically cap your framerate. That would have been an odd choice, but maybe it makes for better youtube presentation? Are you able to set frame limits in-game while frame gen is active?

Edit: The maybe worst answer is that the 120 average and base frame rate are made up because he didn’t want to explain why it was capped at 114 or something for all of them.

I don't have a blackwell card to do this properly but I tried to see how I went using lossless scaling in x3 mode to try give us a comparison and remember nvidia fg should work better than this. Set panel to 120hz on desktop fired up cyberpunk, it had FG on and everything from the way I normally play and capped itself to 117 with a latecy according to nvidia overlay of 51. I turned off FG left reflex on quit loaded lossless set it to x3 mode with vsync set to 1/4 so I get 120hz, loaded up cyberpunk turned on lossless. The nvidia overlay can't count ls frames so it reported 30fps but ls has its own readout 30/120 so it's working, latency of 64 doing exactly what Tim was trying to do.

He has screwed up somewhere if i've got it going with lossless with less latency doing what he was trying to do, on a lovelace card no less.

If he truly is hitting max refresh rate so that V-Sync adds latency, then I don't understand why he is reporting very similar latency numbers as I see with my 120hz display + correct Reflex capping.The bottom graph shows him hitting a vsynced 120hz to apparently compare latency at a comparable 120fps. If it was working properly with reflex active I would expect the frame gen fps cap to be like 117fps? The top graph is how DF tested latency and lines up, the bottom graph is useless at best and the only way to make that data useful is to tell the audience this is how not to use it.

In his 2022 FG video Alex showed ~50ms latency when V-Sync'd + limited to below monitor's refresh rate. He showed over 100ms when hitting V-sync.

Based on all this, I will assume that 120 number just indicates what Tim's display is capped at and that V-Sync isn't being used improperly in his testing. I'm going to need to see stronger evidence pointing to foul play/incompetence.

From Alex's video:

This is still using traditional vsync though, not reflex! An important distinction as NV would never recommend playing without it. Capping below with Vsync on is still going to be queing frames, which Reflex will not do. VRR is the only way to do this latency measurement right in the end with Vsync only at the top, potentially. SO Gsync + forced driver vsync + DLSS fg on in game and then somehow recording and measuring latency.If he truly is hitting max refresh rate so that V-Sync adds latency, then I don't understand why he is reporting very similar latency numbers as I see with my 120hz display + correct Reflex capping.

In his 2022 FG video Alex showed ~50ms latency when V-Sync'd + limited to below monitor's refresh rate. He showed over 100ms when hitting V-sync.

Based on all this, I will assume that 120 number just indicates what Tim's display is capped at and that V-Sync isn't being used improperly in his testing. I'm going to need to see stronger evidence pointing to foul play/incompetence.

From Alex's video:

View attachment 12961

With framegen, I think the reviewer community is at an impasse where it is extremely hard to communicate "what is acceptable latency" and actually show on screen what it looks like to play a game with frame-gen. Acceptable latency is a sliding scale from person to person, and each and every title has different latency even at the same frame-rate before frame gen is even active. Doom 2016 has like 2x the latency of a COD game from the same era at 60 fps on console, yet people like that game and do not complain about latency with it?

My opinion is that pausing the video on a generated frame is not super representative of showing the persistent errors frame-gen may have: that exaggerates things that your eyes cannot see and is more so academic than it is about the usecase. The errors one sees are those that persist over a greater arc of time, like 16-25 ms, not a one off thing at 4 ms.

edit: Reflex ONLY works if you have VRR on on your display and the game supports it.

Last edited:

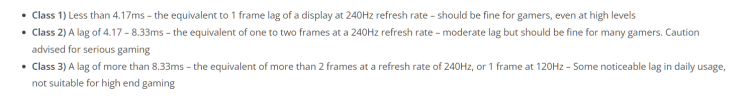

As fun as LDAT is, the tiers there seem arbitrary and beggar belief. 4-8 ms of added input latency requiring "caution for serious gaming"? I am not even sure fighter pilots could feel 4-8 ms of input latency differences.Easiest thing would be to use LDAT as a baseline for testing the various configurations so you get full pipeline details..

Then setup Tiers similar to what TFT central does:

View attachment 12963

As fun as LDAT is, the tiers there seem arbitrary and beggar belief. 4-8 ms of added input latency requiring "caution for serious gaming"? I am not even sure fighter pilots could feel 4-8 ms of input latency differences.

Yeah, my take is you take the raw data from ldat and build your own tiers. Just use it as a collection tool.

The average users wants to know which configuration is optimal for playing a certain games. So as a reviewer, you want to be able to show the responsiveness for various configuration.

Game A using reflex gives you a total system latency of xxx which translates to Tier B

Game A using uncapped fps with no vysnc and no reflex gives you a total system latency of xxx which translates to Tier A

There's an opportunity here for a tech publication to take the lead. The tools and methods exists. Turning it into something digestible for the end users is the missing step.

Last edited:

The point in bringing up your old video was to show how drastically hitting that V-Sync wall should cripple latency.This is still using traditional vsync though, not reflex! An important distinction as NV would never recommend playing without it. Capping below with Vsync on is still going to be queing frames, which Reflex will not do. VRR is the only way to do this latency measurement right in the end with Vsync only at the top, potentially. SO Gsync + forced driver vsync + DLSS fg on in game and then somehow recording and measuring latency.

I would assume, perhaps incorrectly, that If Tim has VRR & Reflex off and is hitting V-Sync wall, then we should expect to see much higher latency readings for his 60 -> 120 measurements (like ~100ms instead of 58ms). Instead Tim shows quite similar readings as we see in your guyses 5090 review's Alan Wake 2 section that shows ~50ms latency readings when 2x FG is circa 60 -> 120.

But I could be missing something here.

He is using the ingame frame limiter which limits the "nativ" frames and MFG is used to generate 2x, 3x or 4x more frames. He is not reaching the V-Sync limit.

But if he's capturing 4k120, the monitor must be set to 4k120, no? Refresh rates have to match? It's possible he has an fps limit with vsync off.

I haven't used capture cards. I guess it depends on how you're capturing? If you mirror, both the monitor and capture card would have to be the same resolution, refresh rate. If you have it in series, then it would be the same, no?

Similar threads

- Replies

- 135

- Views

- 6K

- Replies

- 135

- Views

- 17K

- Replies

- 10

- Views

- 1K

- Replies

- 3

- Views

- 480