DavidGraham

Veteran

It's the same arguments we keep circling back to, RT is not noticeable, RT costs too much performance, RT cards are expensive, RT is irrelevant and the loop goes on forever.

But we forget, we forget the times were 4X Anti Aliasing alone used to cut fps in half, we forget AAA games with advanced graphics running at 30 to 50fps on high end hardware.

Here is Doom 3 at max settings on the highest GPUs, 42fps!

Far Cry 1: max settings, 50 fps.

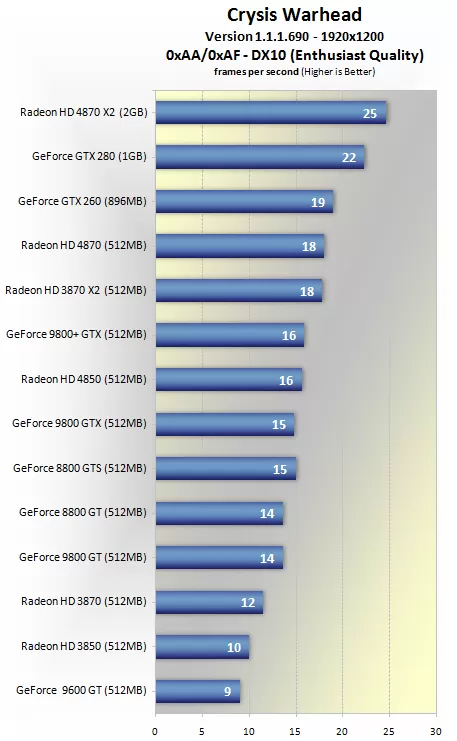

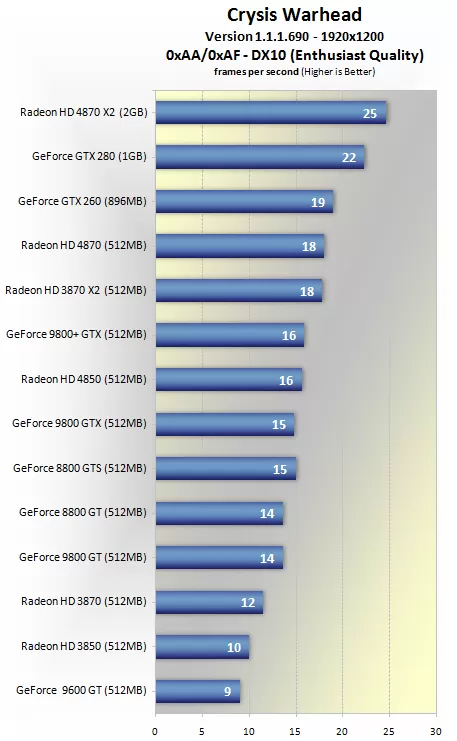

Crysis Warhead, max settings: 22fps!

F.E.A.R. running at max settings: 28fps!

Oblivion at max settings: 38fps!

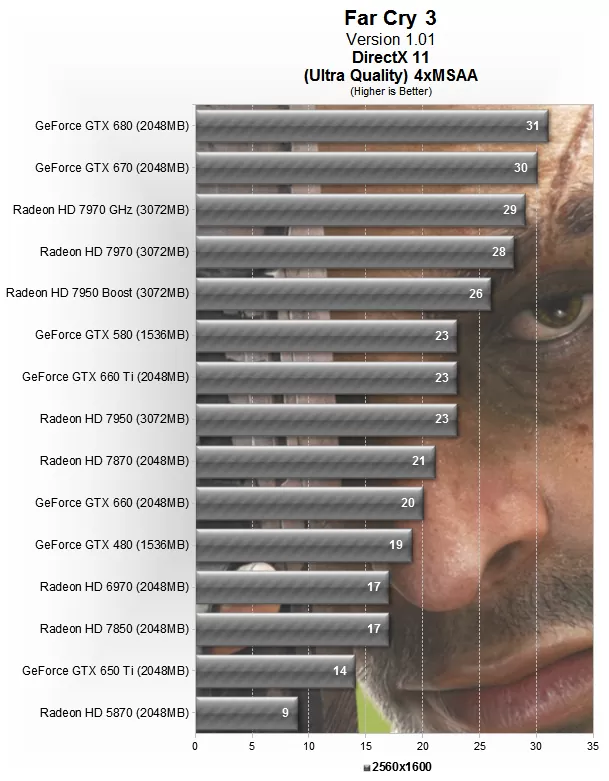

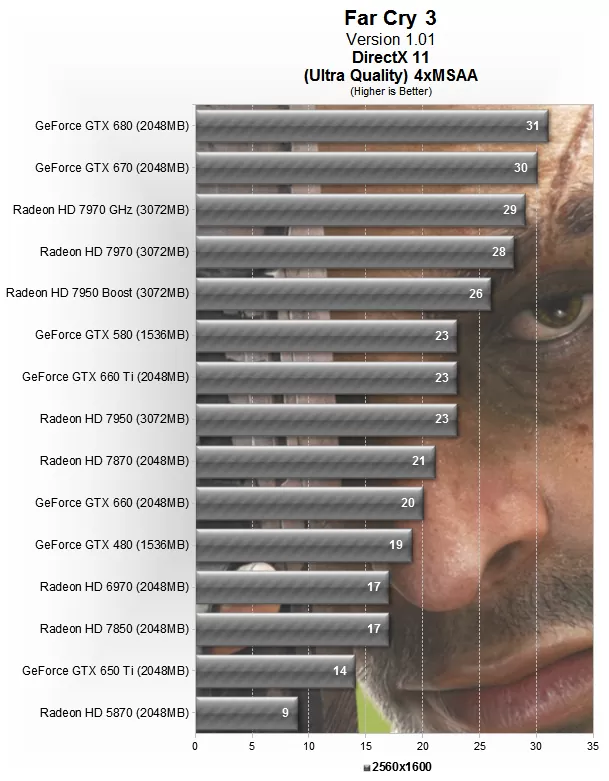

Far Cry 3 max settings: 31fps!

Metro 2033 max settings: 38fps!

And I could go on and on and on. But we forget, and so called YouTube "reviewers" want max graphics + 120fps + max native 4K resolution. It doesn't work like that, it never did work like that in PC gaming, and history remains the evidence for that.

But we forget, we forget the times were 4X Anti Aliasing alone used to cut fps in half, we forget AAA games with advanced graphics running at 30 to 50fps on high end hardware.

Here is Doom 3 at max settings on the highest GPUs, 42fps!

Far Cry 1: max settings, 50 fps.

Crysis Warhead, max settings: 22fps!

F.E.A.R. running at max settings: 28fps!

Oblivion at max settings: 38fps!

Far Cry 3 max settings: 31fps!

Metro 2033 max settings: 38fps!

And I could go on and on and on. But we forget, and so called YouTube "reviewers" want max graphics + 120fps + max native 4K resolution. It doesn't work like that, it never did work like that in PC gaming, and history remains the evidence for that.