VFX_Veteran

Regular

Alright graphics nerds! Let's define actual conditions in the rendering path that would constitute a game being fully-path traced regardless of noise. I started this thread so that we can all agree on the requirements to declare this. In truth, none of the games I've seen so far implement 100% of the requirements to define FPT. I also haven't played Portal or Quake or Minecraft, so I can't speak to those. I just want us on the same page on each of the conditions.

In the overall path-traced rendering path in VFX, we use the BRDF with PDF (probability distribution functions) and CDF (cumulative distribution functions) driven by importance sampling for each of the terms in the equation in order to compute a final color for a pixel.

What are they?

1) Direct Diffuse - This is the main component of the rendering equation and we usually see Lambert and Oren-Nayar. We all know that if you do direct path-traced lighting, then every light source will:

a) Cast a soft shadow with prenumbra behavior

b) Area light shape will be taken into account.

2) Indirect Diffuse - This is what we all see as the main signal of RTGI. It's gathering luminance from neighboring objects and therefore color 'bleeds' onto the surface making objects pick up tint. It also will create ambient occlusion from just the nature of checking to see around a hemisphere all colors that it can average. If it's blocked by another object, it will cast a shadow at that location.

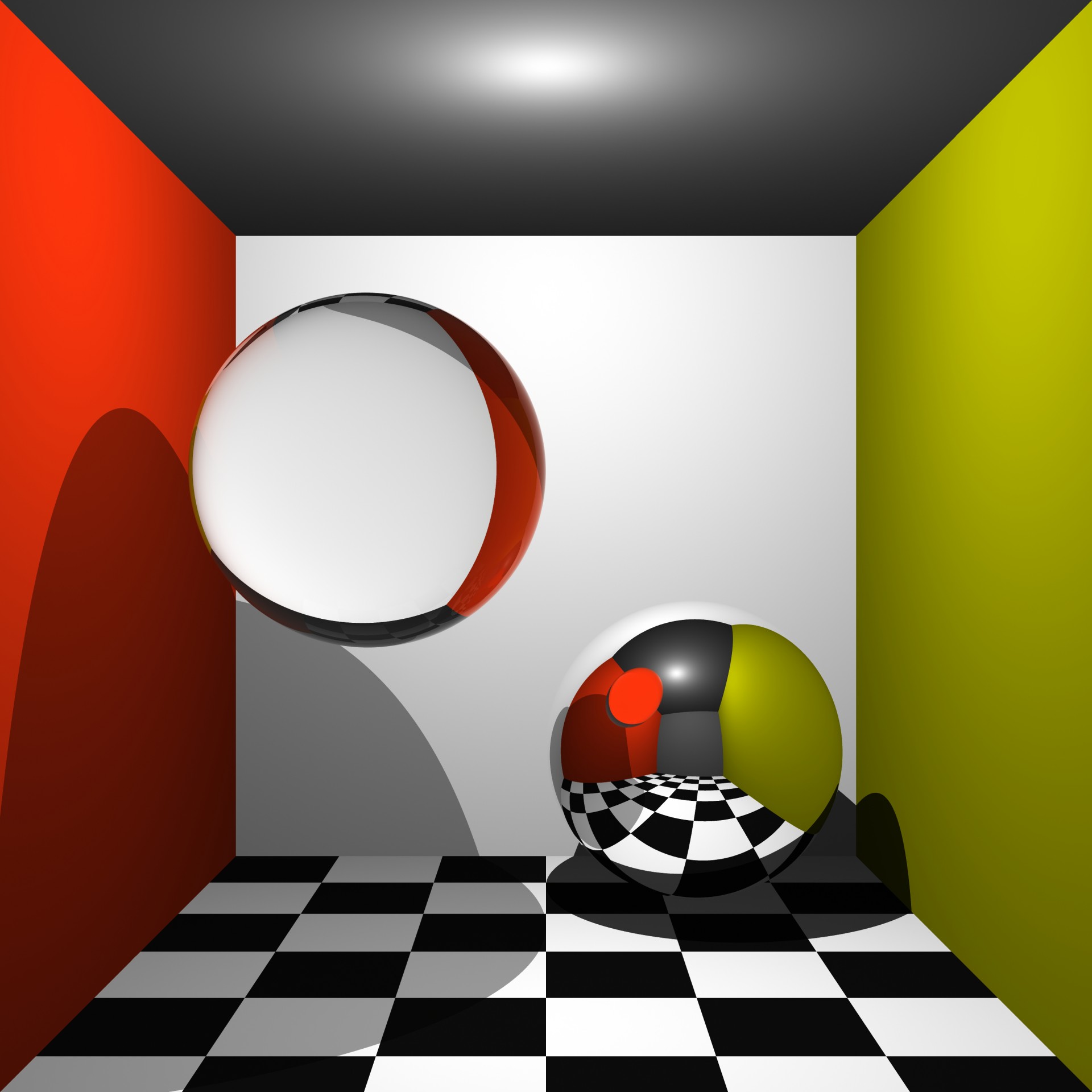

3) Direct Specular - This manifests itself with the specular highlight. In PT, it must pick up the exact same shape as the light source based on roughness of the material.

4) Indirect Specular - This is reflections and these will behave very similar with direct diffuse in that the reflection will be sharper at the source and spread out based on the distance from that source. Reflections will also happen with materials that aren't glass (i.e. roughness is < 0.1). We can also put refractions in this category that represents light rays that have been bent after traveling through a medium and showing objects that are distorted through the material.

5) Emissive - this is objects that are light sources themselves. Think of a black body material or something that glows due to heat.

What are the subsets?

1) Environment lighting - This is the sky. Mostly done using cube maps in rasterization. PT environment is literally sampling the sky texture and basing important rays near the highest luminance in the texture (i.e. the Sun). This also allows materials that are outside and in the shadow of the sun reflect the blue hue from the sky.

2) Subsurface Scattering - this is very difficult pass as it's computes several bounces inside of a material only to come out somewhere else on the material. The light exhibits scattering inside of a body and causing soft shadowing on the skin before reflecting the skin's tone. Also used for plants, wine bottles, etc..

3) Hair - another difficult pass. If done right, this is the most complicated PT feature as it will take every single strand of hair into account, run a full BRDF computation on it that's literally 4 components: (primary, secondary, backscattering, and interreflections). It will show itself as having great self-shadowing EVEN when the hair is blocked by Sun and still shows texture. Only 2 games that I have seen allow this: Indy and DD2-PT mode.

4) Participating media - clouds, smoke, and fog. This is probably by far the most expensive as not only do you run all the common computations, but using ray-marching (ala Batman AK) destroys performance.

If anyone care to mention anything I may have missed, it would be appreciated.

If we have this list, we can now determine what games come close to implementing ALL of these features. If there is none, that tells you how far we have to go with the hardware, on top of solving noise, to achieve true film quality games.

In the overall path-traced rendering path in VFX, we use the BRDF with PDF (probability distribution functions) and CDF (cumulative distribution functions) driven by importance sampling for each of the terms in the equation in order to compute a final color for a pixel.

What are they?

1) Direct Diffuse - This is the main component of the rendering equation and we usually see Lambert and Oren-Nayar. We all know that if you do direct path-traced lighting, then every light source will:

a) Cast a soft shadow with prenumbra behavior

b) Area light shape will be taken into account.

2) Indirect Diffuse - This is what we all see as the main signal of RTGI. It's gathering luminance from neighboring objects and therefore color 'bleeds' onto the surface making objects pick up tint. It also will create ambient occlusion from just the nature of checking to see around a hemisphere all colors that it can average. If it's blocked by another object, it will cast a shadow at that location.

3) Direct Specular - This manifests itself with the specular highlight. In PT, it must pick up the exact same shape as the light source based on roughness of the material.

4) Indirect Specular - This is reflections and these will behave very similar with direct diffuse in that the reflection will be sharper at the source and spread out based on the distance from that source. Reflections will also happen with materials that aren't glass (i.e. roughness is < 0.1). We can also put refractions in this category that represents light rays that have been bent after traveling through a medium and showing objects that are distorted through the material.

5) Emissive - this is objects that are light sources themselves. Think of a black body material or something that glows due to heat.

What are the subsets?

1) Environment lighting - This is the sky. Mostly done using cube maps in rasterization. PT environment is literally sampling the sky texture and basing important rays near the highest luminance in the texture (i.e. the Sun). This also allows materials that are outside and in the shadow of the sun reflect the blue hue from the sky.

2) Subsurface Scattering - this is very difficult pass as it's computes several bounces inside of a material only to come out somewhere else on the material. The light exhibits scattering inside of a body and causing soft shadowing on the skin before reflecting the skin's tone. Also used for plants, wine bottles, etc..

3) Hair - another difficult pass. If done right, this is the most complicated PT feature as it will take every single strand of hair into account, run a full BRDF computation on it that's literally 4 components: (primary, secondary, backscattering, and interreflections). It will show itself as having great self-shadowing EVEN when the hair is blocked by Sun and still shows texture. Only 2 games that I have seen allow this: Indy and DD2-PT mode.

4) Participating media - clouds, smoke, and fog. This is probably by far the most expensive as not only do you run all the common computations, but using ray-marching (ala Batman AK) destroys performance.

If anyone care to mention anything I may have missed, it would be appreciated.

If we have this list, we can now determine what games come close to implementing ALL of these features. If there is none, that tells you how far we have to go with the hardware, on top of solving noise, to achieve true film quality games.