Two things I would like to point out here:

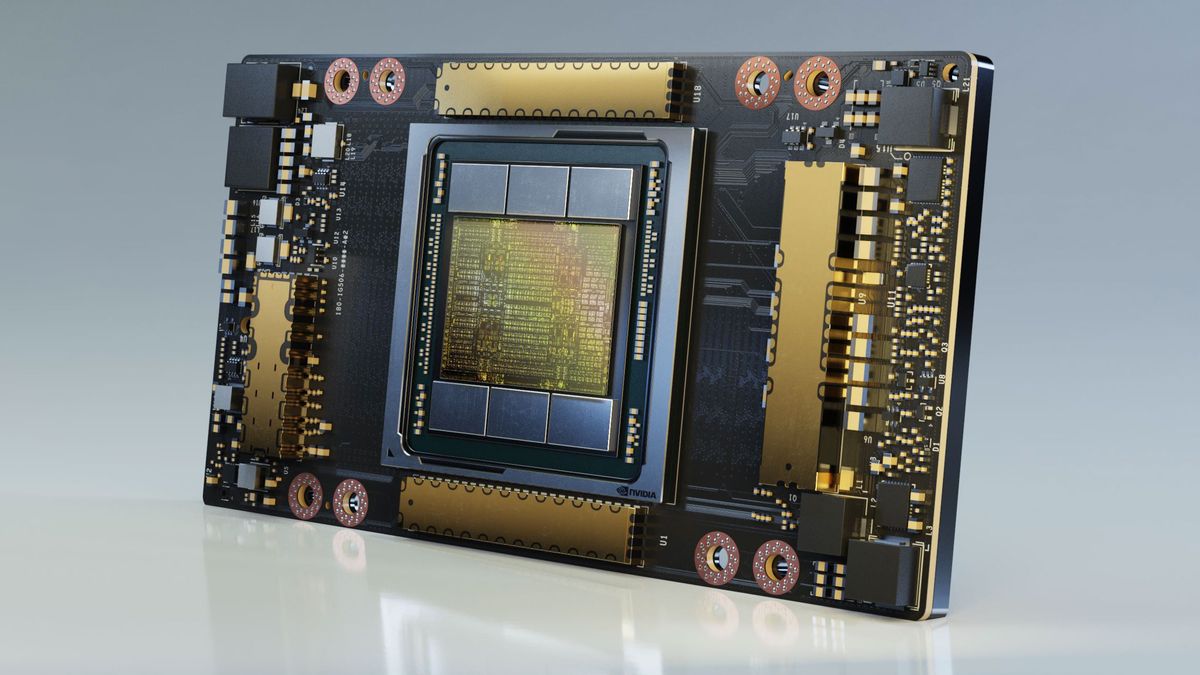

Ten years ago, a singular computer which could consume an entire gigawatt of power would've been monumental in size, to the point where it might as well be several hundred thousand square feet -- equal to the typical wing of a modern colocation datacenter itself. At such physical scale, it likely would've ended up being a clustered ecosystem of supercomputers, rather than "one supercomputer." Maybe the clustering comment is just semantics, "supercomputer" work isn't single threaded anyway. Today, with the newest commercial GPUs coming in at ~1KW each, we can do 150KW or more in single 42U rack without accounting for cooling. Napkin maths says around 5000 racks packed with GPUs should consume roughly 750MW and sit on ~40,000 sqft of raised floor, then add in aisleways between those rack rows, a campus-wide water loop, the bigass pumps for the chiller system, the thermal plates for "cooling capacitance" in the chiller loop, some quantity of DX cooling for non-loop-chilled workloads (standard CRAC stuff), a room for an operations center, the necessary electrical rooms, UPS gear, and a pair of reactor rooms, and you've probably hit the 1GW mark in a datacenter smaller than 100,000sqft. There's no way we were building this sort of facility a decade ago.

Secondly, a decade ago we had no reasonable way to send an entire gigawatt of power to a single building; there are moderately sized cities that don't run on a gigawatt of power. As a simple example: the Tennessee Valley Authority (TVA) generates and distributes power to all of the state of TN, a substantial portion of Mississippi, the northernmost ~20% of Alabama, the southwestern-most ~20% of Kentucky, and several hundred square miles of Georgia, Virginia and the two Carolinas. As I'm typing this, the weather in this area of the country is around 78*F and sunny, it's about 5:30pm, and TVA is generating 19.2GW for all of their coverage. (Link:

https://www.eia.gov/electricity/gridmonitor/dashboard/electric_overview/balancing_authority/TVA) Imagine sending 5% of that power to just a single building? Even today that sounds ludicrous.

Yet today, and for the past several years thanks to EPA authorizations and certifications, it's now commercially possible to drop one (or more) modular fission reactor(s) into a datacenter campus and achieve GW-class baseload at less than $0.20 kWh. This is crazy cheap for self-generation, especially when calculated over the decades-long lifespan of the unit even after considering fueling and maintenance costs.

Money isn't the only thing which has changed, so too has technology localized energy production.