How "straight to the metal" are the existing first-party bespoke game engines: Decima, Insomniac's engine, Santa Monica's engine, Naughty Dog's engine, and ForzaTech? All of these engines have been used for PC ports, but I doubt the console versions are leaving performance on the table for the sake of PC compatibility.

It's a good question, and I didn't articulate my stance well. To that point, I very much agree with your suggestion re: those engines were purpose built for the hardware of their respective consoles and accordingly should be able to showcase the "best" of a console's abilities. They're also purpose built for their own unique games, so they don't waste dev time on things not core to their gameplay. This allows for light, efficient code which only caters to the requirements of the game they support.

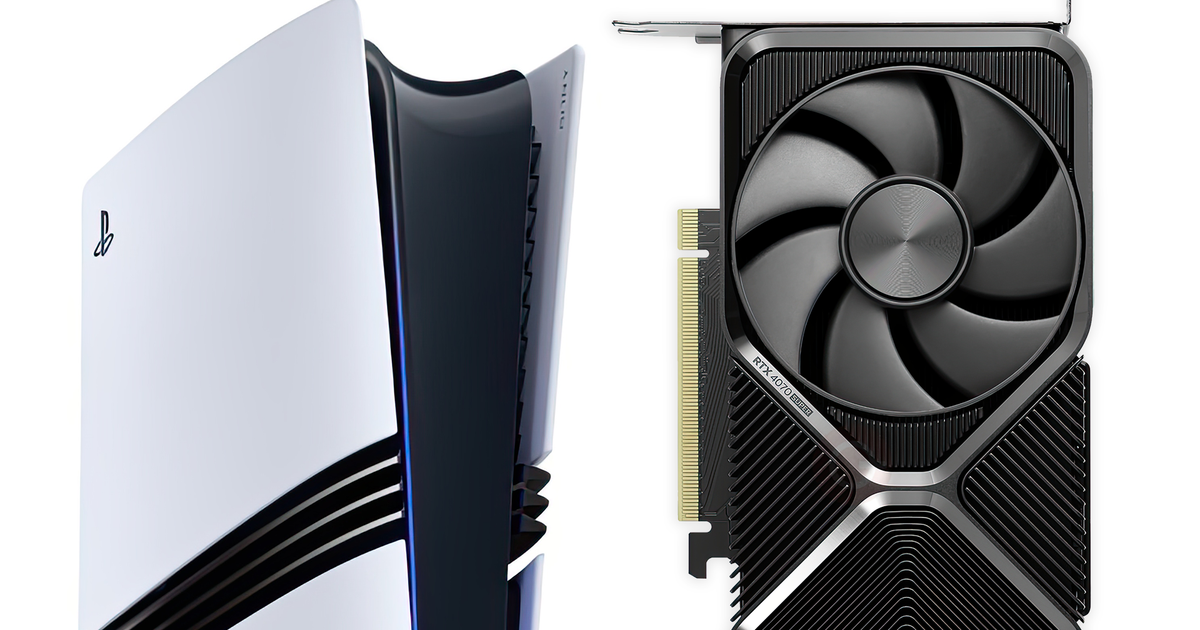

Whereas something like UE5 or Crysis or IDTech will necessarily cover so many processor generations, GPU generations, storage generations, and OS generations in order to serve the greatest catalog of both game genres and hardware + OS combinations. This is nice for a development shop who wants to "Author once and deploy to many" but always leaves something on the table in terms of utmost capability on any one specific hardware platform.

The core difference here, in my mind, is development effort spent on the general-purpose engines isn't lost on someone who moves over to write a custom engine for current-gen consoles. Foundationally, it's all the same x86-64 architecture and all the same AMD GPU architecture they've built "paths" for, now without the other cruft. Conversely, having to R&D a completely new chunk of computing silicon, to then debug it, and then learn how to write code to it, just tosses an entire toolbox of wrenches into the equations.

It's like English having become the "universal language" for most of the planet. It's not because English is the best (it isn't) or the most clear (it isn't) or the most concise (it isn't), but somehow it has become the language that most of the world can speak. Inventing a new language could result in some efficiency gain, perhaps a more precise way of speaking, perhaps provide a more evocative way of describing life, but it also means everyone has to learn the new language. Same goes for X86 today -- essentially every CompSci nerd speaks it, so why make it harder to get work done? Sure, you might save 10% of your compute cycles, but the R&D cost to create a whole new instruction set, debug it, build it at scale, and teach it to everyone is going to far outweigh the cycle loss of just using a common language.

Maybe ARM will be the next "big" change, in a generation or three.

Exploitation/employment opportunities is open to debate as we eschew ethics and just look at what's feasible. Once a business plan is drawn up, we can decide whether it's morally objectionable or not in another forum.