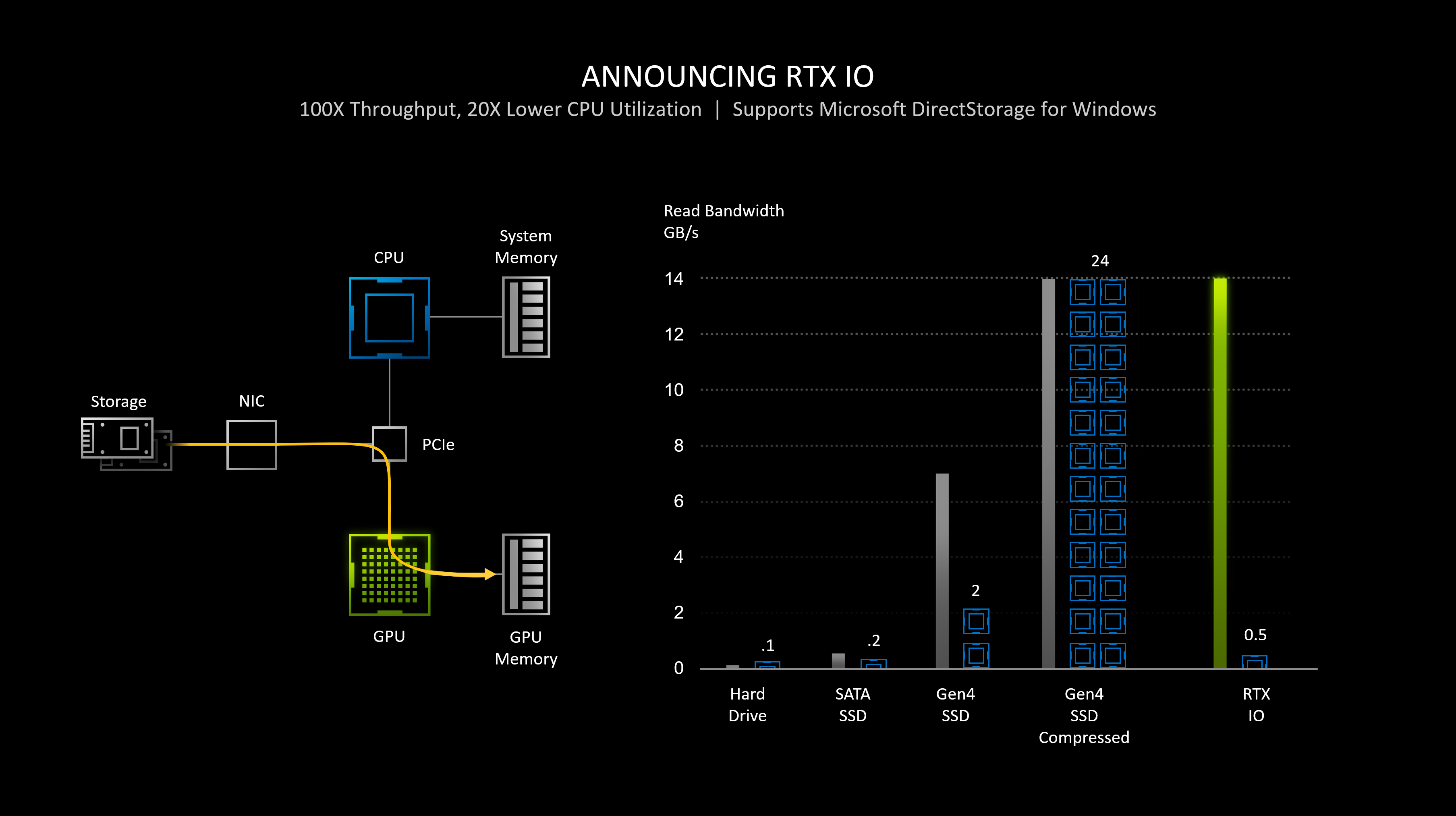

As someone who does high performance storage as a component of their job, I still see an outstanding problem that's hinted at in this picture here:

Several of you in this thread have been picking on the storage being on the "other side" of the NIC in this diagram. By keeping the storage on the "other side" of a network link, we avoid all conversations about how a filesystem abstraction has to be handled. This diagram really, truly has no bearing on how a consumer PC is constructed and the complexity therein.

Think about this: every file in a modern consumer-facing file system is a collection of hundreds, thousands, even millions of individual data blocks all mapped together by some sort of file system bitmap or journal index or similar. Basically, there's a master table somewhere in the filesystem inner workings which translates the operating systems' request for a file into the related zillions of pointers linking to the literal blocks inside a logical partition scheme. That single file access may very well live on multiple partitions simultaneously (spanned volumes in Windows are thing that does occur) and those partitions may span multiple underlying storage devices. These partitions then map downwards again into the physical storage layer, which sometimes has their own set of pointer remappings into even lower level storage devices (eg a RAID card.)

We also must consider access and auditing controls built into modern filesystems. Are you permitted to read this file? If you do read this file, doesn't the last access time need to be updated in the file system metadata? Does the file access itself need to be logged for security reasons? Someone brought up disk encryption and TPM was hand-waved off into the solution, however TPM solutions presume whole disk encryption. As it turns out, file system encryption is very much a thing, and isn't linked to TPM-based whole disk encryption.

All this to say: a native PCI-E transaction from disk to memory only works if you can fully map every single one of those pointers from the parent file descriptor at the file system level into the literal discrete blocks of physical storage attached to the PCIE bus, only after determining you're allowed to make that read, possibly in parallel with still having to update the file system metadata and logging needs, and assuming a file system encryption scheme (read also: Microsoft EFS) isn't being used.

Flowing storage through a NIC as a data stream (presumably NVMEoF) completely removes all of this complexity.

Transferring raw block storage into memory pages is actually quite simple. Making a GPU call translate into a full-stack file system read access is not the same at all.