Since around 2011, AMD and Intel began incorporating south-bridge and north-bridges controllers on the main CPU die itself. The bus controllers very much still exist and their features still advance filling the need to support various new I/O models. The distinct logic blocks and interconnects continue still exist on-die, i.e. there are still but controllers for the different devices that can be connected. The integration was why CPU pin-counts exploded because the motherboard suddenly had a lot of more signals crowding into one chip.

edit: deleted some errant text.

There is no southbridge between a NVME SSD and the CPU/GPU. AMD FCH/Intel PCH services SATA and different ports but a nvme SSD or a discrete gpu don't go over a southbridge (they have direct links to the memory controller hub).

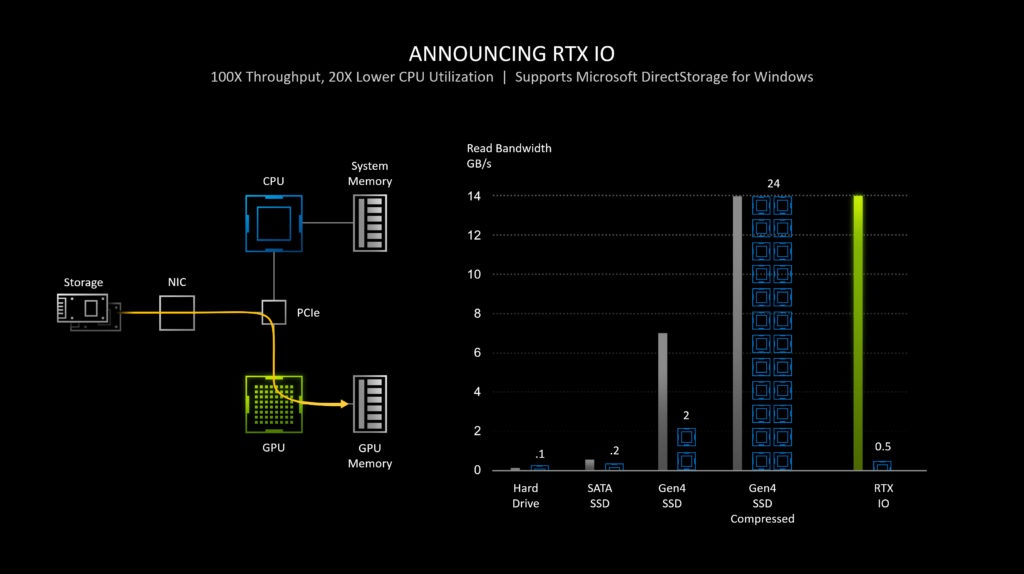

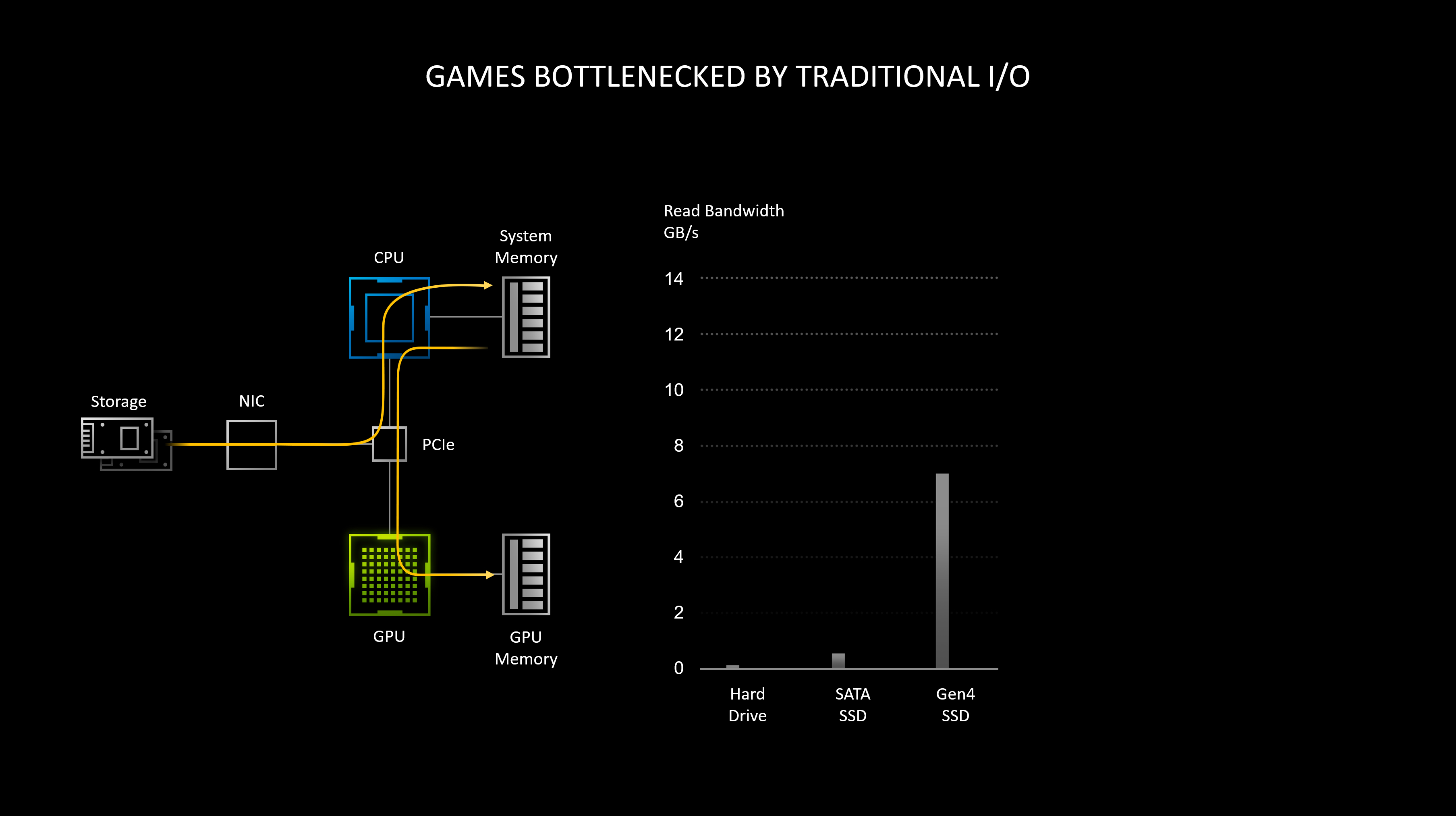

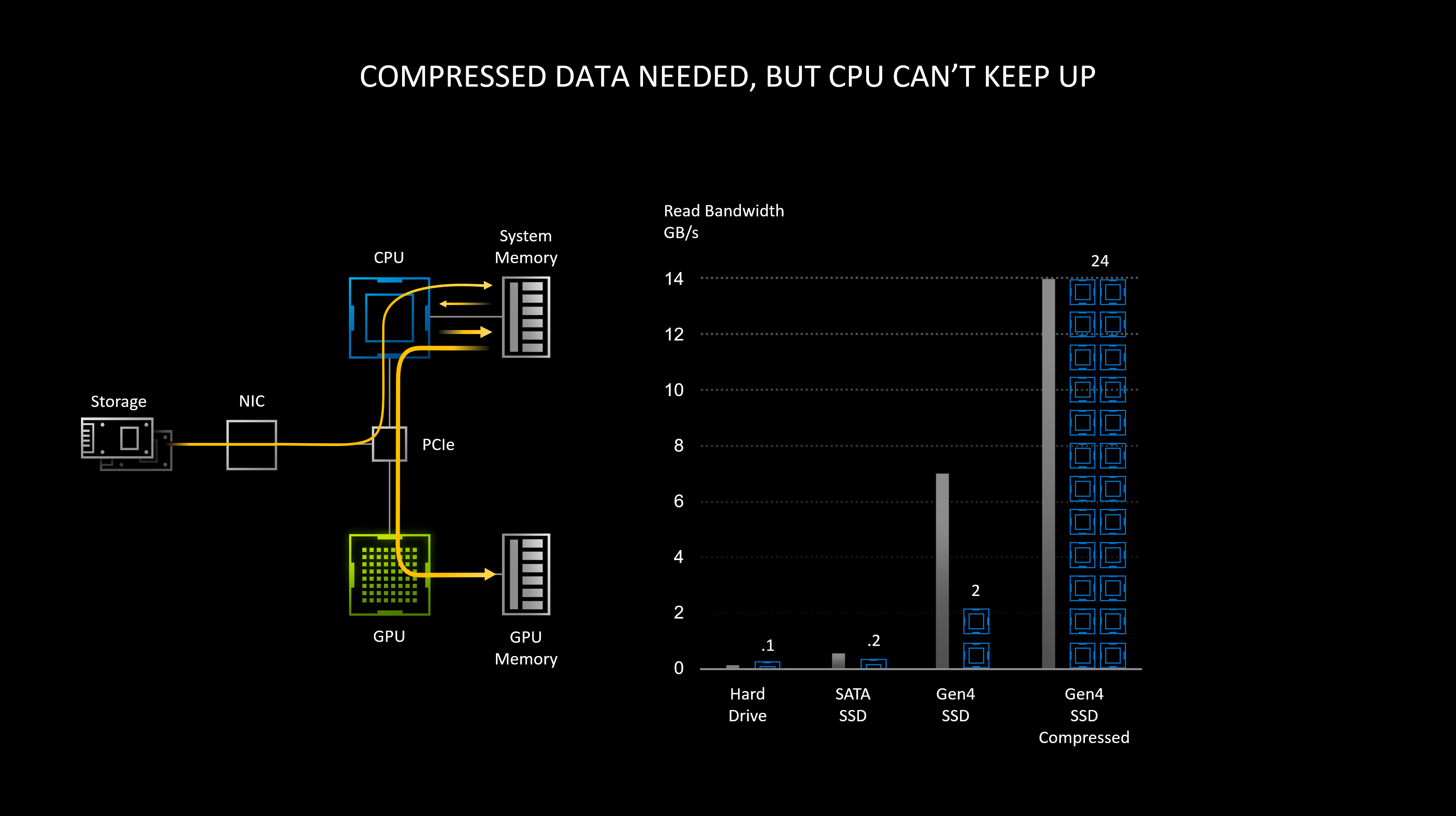

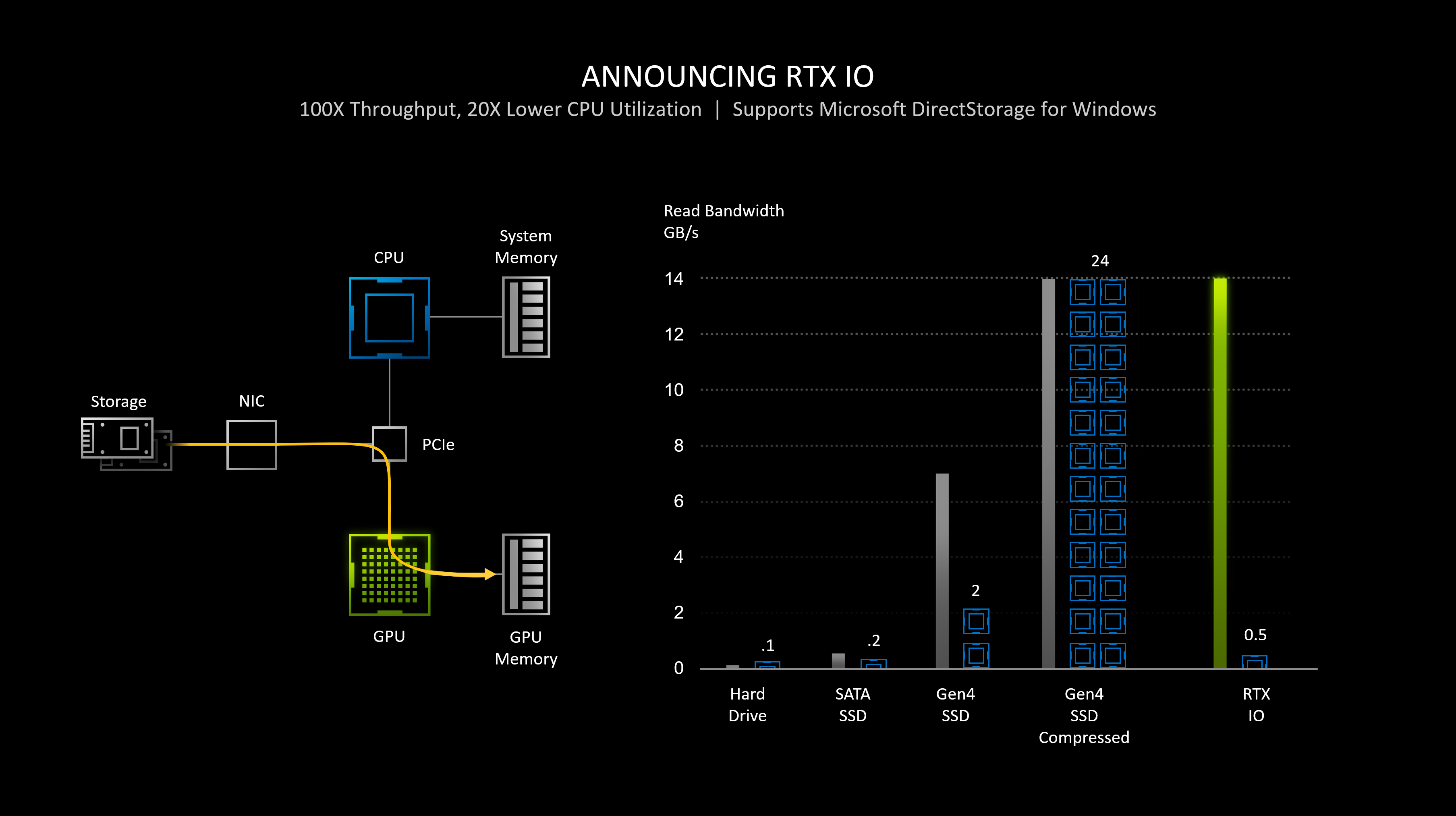

Or.......Nvidia have just rebranded GPUDirect Storage (From 2019) in to RTX I/O.

The system diagrams are an exact match.

It also explains why that NIC block is present as GPUDirect Storage uses NIC's for server and drive access which is why it's there in the diagram.

GPUDirect Storage also uses a PCIEX switch which is what that PCIEX block in the RTX I/O slide is, it's not to show it doesn't go through the CPU as everyone thought.

It's an actual, physical and separate PCIEX switch.

So it seems that whole slide is a waste of time.

GPUDirect and RTX IO share the same philosophy but aren't the same thing. That being said, RTX IO is probably a derivative of GPUDirect. However, GPUDirect doesn't need DirectStorage for functionality (RTXIO does) and is incompatible with Windows. So, RTX IO used in conjunction with DirectStorage allows GPUDirect functionality on a Windows machine.

Last edited: