D

Deleted member 11852

Guest

I don't know but I don't think it's necessary given how Cerny explained it. If your compute job is critical you give it the highest priority and you're guaranteed compute time unless you're flatly prioritising everything. But that's be silly.Is it even possible to allocate CUs to dedicated, independent jobs?

EDIT: From the Gamasutra interview:

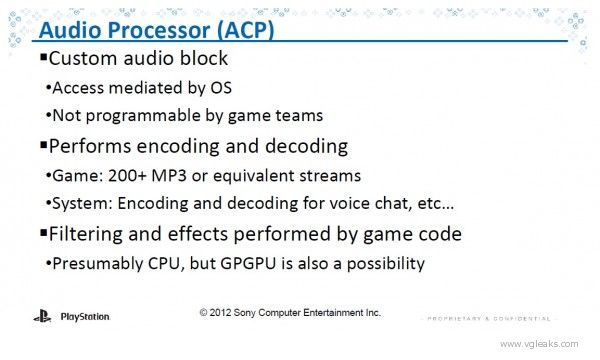

Mark Cerny said:Thirdly, said Cerny, "The original AMD GCN architecture allowed for one source of graphics commands, and two sources of compute commands. For PS4, we’ve worked with AMD to increase the limit to 64 sources of compute commands -- the idea is if you have some asynchronous compute you want to perform, you put commands in one of these 64 queues, and then there are multiple levels of arbitration in the hard- ware to determine what runs, how it runs, and when it runs, alongside the graphics that's in the system."

"The reason so many sources of compute work are needed is that it isn’t just game systems that will be using compute -- middleware will have a need for compute as well. And the middleware requests for work on the GPU will need to be properly blended with game re- quests, and then finally properly prioritized relative to the graphics on a moment-by-moment basis."