You are using an out of date browser. It may not display this or other websites correctly.

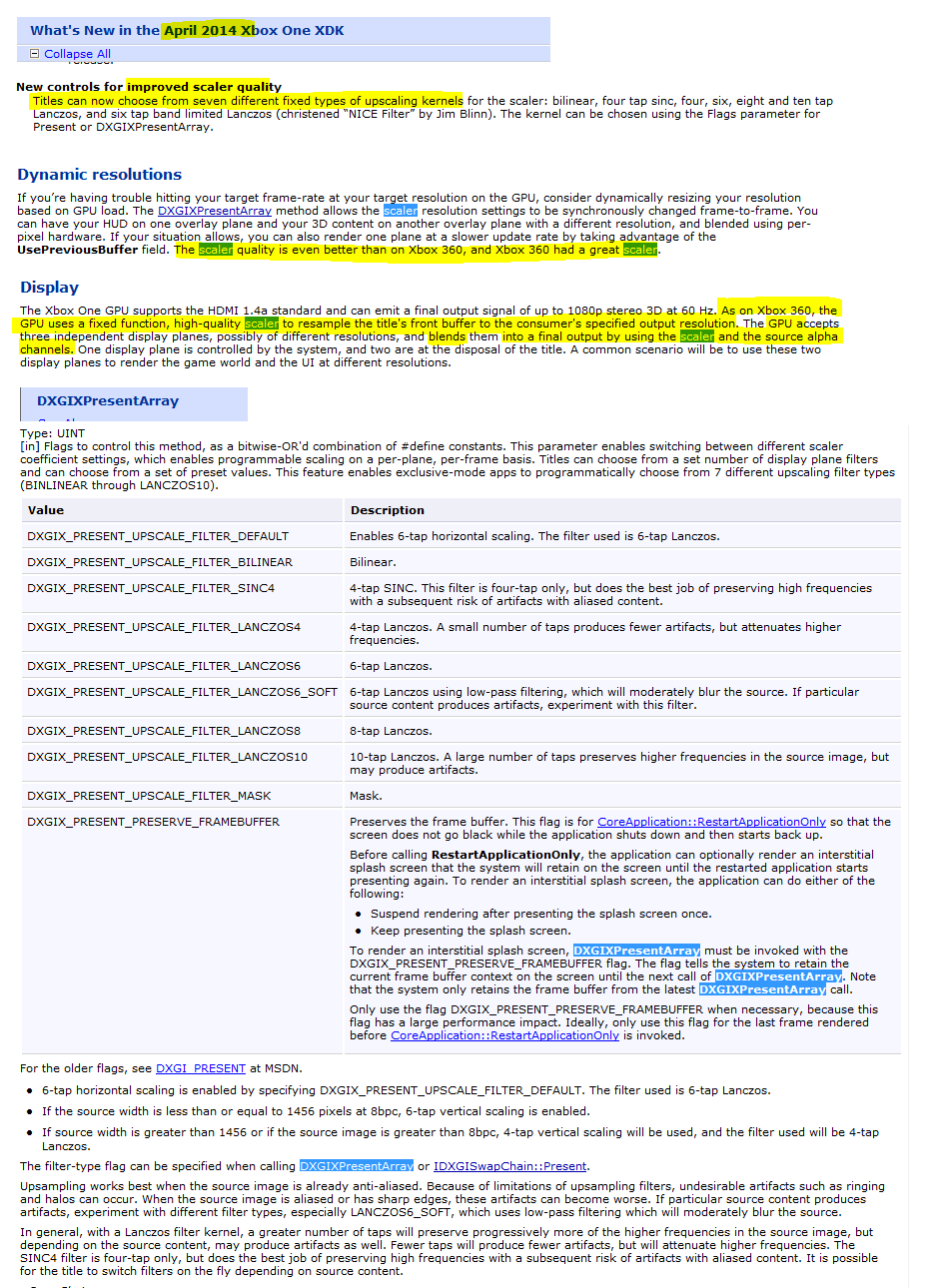

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Xbox One November SDK Leaked

- Thread starter DieH@rd

- Start date

What are the use cases to justify the extra bandwidth there?

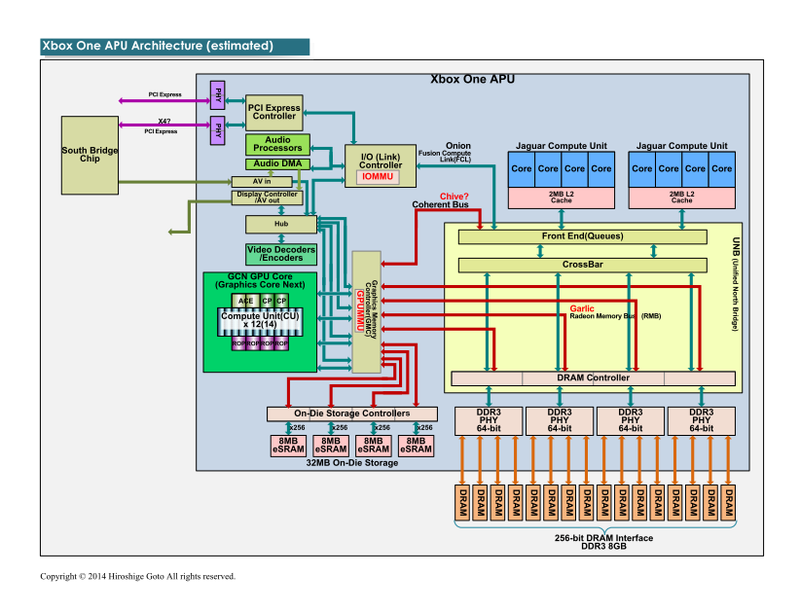

The Vgleaks diagrams had a PCIe, audio, and other blocks hanging off the coherent northbridge with bandwidth of 9GB/s, so roughly the difference between the CPU cluster bandwidth and the total.

I forget at this point which things hung off that one.

There was Kinect, audio processing, and HDMI passthrough seems to require keeping the APU active, so maybe that.

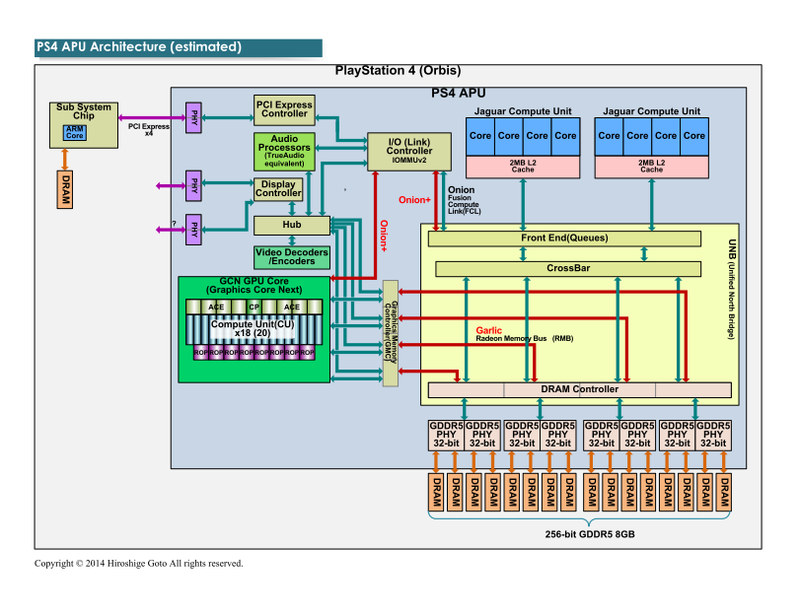

I'm pretty sure the PS4 also has a similar IO hub, but Orbis was noted as taking a hit to its on-die bandwidth during its evolution, and it doesn't seem like that particular part of the architecture was as ambitious as Microsoft's.

I wonder if a game dev shouldn't expose these in retail in an options menu. Let the user play around with it like a TV setting (showing in-game result).

I think we're at the point where there isn't much harm in having some "advanced" settings under options. We don't need anything as extreme as settings on PC games, but a few visual options to disable post processing you don't like, or change scaling filters, or whatever would be nice. I'd really love to turn off lens flares.

I think we're at the point where there isn't much harm in having some "advanced" settings under options. We don't need anything as extreme as settings on PC games, but a few visual options to disable post processing you don't like, or change scaling filters, or whatever would be nice. I'd really love to turn off lens flares.

Indeed...Well, we did get a few games early last gen with TV-type presets (e.g. PDZ), post-filters (e.g. Gears 1, Mass Effect 1, Uncharted 2), and V-sync (e.g. Bioshock). Oh well.

Why foot the bill and effort in collaboration and have your competitor take one of your off the shelf components and wreck your home.

MSFT were very proud of the X360/PS3 era: they used all the jointly developed hardware at zero cost.

TheWretched

Regular

It supports Lanczos scaling... that's pretty good, imho. It's my favourite scaling method on my HTPC. Makes me wonder why a lot of games are so extremely oversharpened if they use the scaler, though.

Also... exposing these methods to the user would be a big win!

Also... exposing these methods to the user would be a big win!

liquidboy

Regular

I wonder if a game dev shouldn't expose these in retail in an options menu. Let the user play around with it like a TV setting (showing in-game result).

I think we're at the point where there isn't much harm in having some "advanced" settings under options.

I sort of agree in exposing these as advanced settings BUT in this particular case im torn becase as a developer myself I would rather control this then let the user set it..

If I create a Title game

1. Display plane 1 = HUD - 1080p

2. Display plane 2 = 3D world - 900p -1080p

and I dynamically scale the resolution of the 3D world based on GPU load (in big fight scenes with dozens of bosses on screen ill render lower res) then I may want to programmatically apply a different 'upscale filter' at that time ?!

So I'd rather leave this to the developer to control

liquidboy

Regular

It supports Lanczos scaling... that's pretty good, imho. It's my favourite scaling method on my HTPC. Makes me wonder why a lot of games are so extremely oversharpened if they use the scaler, though.

Also... exposing these methods to the user would be a big win!

From the look of the api call, I could be totally wrong here BUT it looks like it's really only useful when games take advantage of multiple display planes ?!

It could be that the filter options available at the time were those more prone to ringing (i.e. sinc or the high-tap lanczos).Makes me wonder why a lot of games are so extremely oversharpened if they use the scaler, though.

I wonder if a game dev shouldn't expose these in retail in an options menu. Let the user play around with it like a TV setting (showing in-game result).

Think about this further, it would be nice if you could override scaling with your preferred type in the Xbox system settings. You know, choose, "Let application decide" or your particular favourite. Anyway, off topic.

It supports Lanczos scaling... that's pretty good, imho. It's my favourite scaling method on my HTPC. Makes me wonder why a lot of games are so extremely oversharpened if they use the scaler, though.

Also... exposing these methods to the user would be a big win!

Are a lot of games extremely overcharged? I remember COD Ghosts and Killer Instinct having that problem. Maybe Dead Rising. After that, I don't remember it being an issue.

I'd also support the idea that maybe filter options have increased as the SDK has advanced. I'll have to look through the monthly release notes again.

TheWretched

Regular

Well, it used to be the case, at least around launch and some time later. It might be, as someone above me wrote, that the only options around launch were unsharp masking the upscale and there were no other options.

If we're to allow users to set their own settings... would it be better to hide the setting and only enable it via code (Konami Code for example)? At least this way, most people who don't know what those settings are don't get confused.

I usually prefer to get as many options as possible. Most games are only giving us the bare standard. GT5 allowed you to change the AA method, FOV and a lot of other things (sadly, the FOV slider only went down, and not up). I really prefer a very wide FOV in games. On consoles, it's less of an issue for me (big tv, further away), but on PC, I get nauseous with games like Metro 2033. But not just graphics. Up until recently, GTA5 had a horrible deadzone, at least on PS4. It was probably a non-issue on PS3 for me, because the controller itself is already just a big deadzone. But the DS4 is just miles better and just has the accuracy to just disable deadzone and offer controls to the player.

If we're to allow users to set their own settings... would it be better to hide the setting and only enable it via code (Konami Code for example)? At least this way, most people who don't know what those settings are don't get confused.

I usually prefer to get as many options as possible. Most games are only giving us the bare standard. GT5 allowed you to change the AA method, FOV and a lot of other things (sadly, the FOV slider only went down, and not up). I really prefer a very wide FOV in games. On consoles, it's less of an issue for me (big tv, further away), but on PC, I get nauseous with games like Metro 2033. But not just graphics. Up until recently, GTA5 had a horrible deadzone, at least on PS4. It was probably a non-issue on PS3 for me, because the controller itself is already just a big deadzone. But the DS4 is just miles better and just has the accuracy to just disable deadzone and offer controls to the player.

I sort of agree in exposing these as advanced settings BUT in this particular case im torn becase as a developer myself I would rather control this then let the user set it..

If I create a Title game

1. Display plane 1 = HUD - 1080p

2. Display plane 2 = 3D world - 900p -1080p

and I dynamically scale the resolution of the 3D world based on GPU load (in big fight scenes with dozens of bosses on screen ill render lower res) then I may want to programmatically apply a different 'upscale filter' at that time ?!

So I'd rather leave this to the developer to control

mmm... Fair enough. Didn't think aboot that. What sort of filter did you have in mind though? I guess I was sort of thinking about the ringing/halos that might appear stronger on some TVs than others just because of calibration issues, so in effect it's like giving the user options on sharpening. *shudder*

...actually, it is more likely that MS and Sony paid AMD to make custom design changes/additions, which basically meant free R&D for AMD to use later in their products (i.e. TruAudio looks similar to PS4 audio).

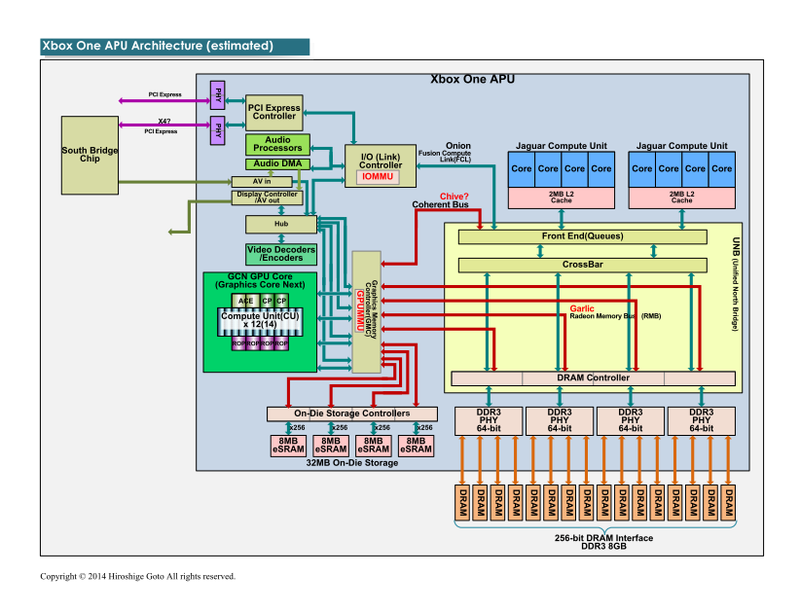

For what I have understood, current AMD APUs use Garlic/Onion bus, whereas XB1 uses a somewhat different approach - which we might see in future on AMD GPUs.

R&D costs, and most managers dont invest enough in it, trying to monetize in the short/mid term for their bonuses/division balance in health.

XB1 also uses Garlic and Onion bus, (but it seems that they used different coherent bus between CPU and GPU (with different technology). Joe Macri (Corporate VP & Product CTO of AMD Global Business Unit) talked about Chive bus which is similar to Onion+ since both of them are coherent buses between CPU and GPU, but Chive uses different technology.

http://pc.watch.impress.co.jp/docs/column/kaigai/20140129_632794.html

Also, the same site speculated that XB1 is using Chive instead of Onion+ in their architecture diagrams:

XB1 also uses Garlic and Onion bus, (but it seems that they used different coherent bus between CPU and GPU (with different technology). Joe Macri (Corporate VP & Product CTO of AMD Global Business Unit) talked about Chive bus which is similar to Onion+ since both of them are coherent buses between CPU and GPU, but Chive uses different technology.

http://pc.watch.impress.co.jp/docs/column/kaigai/20140129_632794.html

Also, the same site speculated that XB1 is using Chive instead of Onion+ in their architecture diagrams:

Where is the block of mysterious sram that the die shots of the XB1 show?

D

Deleted member 11852

Guest

In bet MisterXMedia knows

Similar threads

- Replies

- 7

- Views

- 6K

- Replies

- 126

- Views

- 48K