I think this was always the much safer bet to make; the visible optimism of recent years in Intel's Foundry's staff doesn't translate to a competitive market position. Not in this industry.this also has negative implications about the viability of 18A process, it looks like we are locked in a TSMC monopoly for a long time.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Intel Execution in [2025]

- Thread starter Cyan

- Start date

Would TSMC go for such joint venture when Intel needs to own 50.01% minimum of it? (Or if made public TSMC couldn't get over 35%?)Yesterday was a rough night for Intel, the NY Times, Reuters and Bloomberg reported that Intel wants TSMC to manage it's foundry business through a stake partnership. This could be the reason why Intel let go of Pat, this also has negative implications about the viability of 18A process, it looks like we are locked in a TSMC monopoly for a long time.

DavidGraham

Veteran

The drama around Intel is intensifying, it is now reported that Intel board wants to sell the company to unlock shareholder values. Other news are reporting a consortium of companies that includes TSMC, Broadcom and Qualcomm will get the deal. Jim Keller stepped in today to express his disappointment over this news.

Last edited:

Yeah, it's exactly what it sounds like. Turn a profit for those people who have no long term investment or interest in the company, because capitalism.

Publically traded companies are rife with this shit, and it drives me nuts. Why invest in the long-term sustainability of a business when you can make really short-sided ill-advised decisions which only ever serve to make the very next quarter's earnings release look better on paper? Why do we care about literally anything else other than those people who bought some of our shares to get that extra 1/8th of a percent return? We OWE them because... something something invisible hand of the market or some shit.

This gripe isn't limited to Intel of course, yet it seems like just another example of leadership making a decision which will certainly make them another few hundred million dollars right as they cash out and leave the corpse (and all the employees) to rot.

Publically traded companies are rife with this shit, and it drives me nuts. Why invest in the long-term sustainability of a business when you can make really short-sided ill-advised decisions which only ever serve to make the very next quarter's earnings release look better on paper? Why do we care about literally anything else other than those people who bought some of our shares to get that extra 1/8th of a percent return? We OWE them because... something something invisible hand of the market or some shit.

This gripe isn't limited to Intel of course, yet it seems like just another example of leadership making a decision which will certainly make them another few hundred million dollars right as they cash out and leave the corpse (and all the employees) to rot.

arandomguy

Veteran

I believe the reports are centering around splitting parts of the company, specifically with making the design and foundry side separate. Intel's vertical model isn't going to be viable without essentially being at least a near monopoly and causing anticompetitive issues for the industry as whole.

I believe the reports are centering around splitting parts of the company, specifically with making the design and foundry side separate. Intel's vertical model isn't going to be viable without essentially being at least a near monopoly and causing anticompetitive issues for the industry as whole.

You don’t think they could operate like Samsung foundry with internal and external clients if their manufacturing tech was competitive?

And again I'll remind that Intel needs to own 50.01% of privately held Intel Foundry daughter company (which is already happening) and if it's taken public, no outside investor can hold over 35 %.I believe the reports are centering around splitting parts of the company, specifically with making the design and foundry side separate. Intel's vertical model isn't going to be viable without essentially being at least a near monopoly and causing anticompetitive issues for the industry as whole.

Those were dictated by US Government last year when Intel got their CHIPS money

DavidGraham

Veteran

I think they can't do that with existing process tech, Intel uses custom and proprietary libraries (PDKs, short for Process Design Kit) that aren't compatible with industry standards EDAs (Electronic Design Automation). All Intel old processes (14/10/7nm) are incompatible, only the new ones (3/18A) are.You don’t think they could operate like Samsung foundry with internal and external clients if their manufacturing tech was competitive?

Ian Cutress talked about this several times.

On another note, Raja Koduri wrote an extensive blog post criticizing the current Intel management, their cancel culture and the Intel "snakes", he proposes a company wide painful transformation to get rid of the bureaucrats, stop needless acquisitions, cease cancelling projects, and "increase the coder-to-coordinator ratio by 10x". He stresses that the company needs a big target to rally every corner of the company around, like beating the next NVIDIA chip by 2027.

Last edited:

I think they can't do that with existing process tech, Intel uses custom and proprietary libraries (PDKs, short for Process Design Kit) that aren't compatible with industry standards EDAs (Electronic Design Automation). All Intel old processes (14/10/7nm) are incompatible, only the new ones (3/18A) are.

Ian Cutress talked about this several times.

On another note, Raja Koduri wrote an extensive blog post criticizing the current Intel management, their cancel culture and the Intel "snakes", he proposes a company wide painful transformation to get rid of the bureaucrats, stop needless acquisitions, cease cancelling projects, and "increase the coder-to-coordinator ration by 10x". He stresses that the company needs a big target to rally every corner of the company around, like beating the next NVIDIA chip by 2027.

someone please copy paste the post here if you can

Possibly this link?

Edit- Clicking the date seems to work

I think Raja got a point here. Running a foundry is very challenging for Intel because of its higher cost. Even TSMC has a hard time pushing down cost of its US fab.

The magic recipe of Intel's past success was not that its processes were cheap. It's because they were the best and thus able to support (arguably) the best CPU in the world, so everyone buys Intel CPU and thus there's a huge demand on its fabs. People were willing to pay a premium for the CPU so the cost of their fabs hardly mattered.

The problem today is that ether the market of CPU is no longer that huge and also AMD is nibbling away Intel's market share, thus Intel now have trouble filling up its fabs. This is a downward spiral because once you don't have enough money to invest into new process you'll soon be left behind. Intel had IMHO two chances but missed both, the first one mobile CPU market, and the second one AI accelerators.

There's still a slim chance that Intel could catch up with (or even surpass) TSMC but cost is still a big issue. If your process is just slightly better, people are not going to pay a huge premium for it (even with the help of tariffs). It's just easier if Intel have good products and fill up their fabs with their own products.

The magic recipe of Intel's past success was not that its processes were cheap. It's because they were the best and thus able to support (arguably) the best CPU in the world, so everyone buys Intel CPU and thus there's a huge demand on its fabs. People were willing to pay a premium for the CPU so the cost of their fabs hardly mattered.

The problem today is that ether the market of CPU is no longer that huge and also AMD is nibbling away Intel's market share, thus Intel now have trouble filling up its fabs. This is a downward spiral because once you don't have enough money to invest into new process you'll soon be left behind. Intel had IMHO two chances but missed both, the first one mobile CPU market, and the second one AI accelerators.

There's still a slim chance that Intel could catch up with (or even surpass) TSMC but cost is still a big issue. If your process is just slightly better, people are not going to pay a huge premium for it (even with the help of tariffs). It's just easier if Intel have good products and fill up their fabs with their own products.

If Intel were putting out datacenter products that were competitive with NVIDIA the cost wouldn't be such a huge issue. Hell if Intel fabbed for NVIDIA cost wouldn't be a huge issue (for DC products).I think Raja got a point here. Running a foundry is very challenging for Intel because of its higher cost. Even TSMC has a hard time pushing down cost of its US fab.

The magic recipe of Intel's past success was not that its processes were cheap. It's because they were the best and thus able to support (arguably) the best CPU in the world, so everyone buys Intel CPU and thus there's a huge demand on its fabs. People were willing to pay a premium for the CPU so the cost of their fabs hardly mattered.

The problem today is that ether the market of CPU is no longer that huge and also AMD is nibbling away Intel's market share, thus Intel now have trouble filling up its fabs. This is a downward spiral because once you don't have enough money to invest into new process you'll soon be left behind. Intel had IMHO two chances but missed both, the first one mobile CPU market, and the second one AI accelerators.

There's still a slim chance that Intel could catch up with (or even surpass) TSMC but cost is still a big issue. If your process is just slightly better, people are not going to pay a huge premium for it (even with the help of tariffs). It's just easier if Intel have good products and fill up their fabs with their own products.

arandomguy

Veteran

You don’t think they could operate like Samsung foundry with internal and external clients if their manufacturing tech was competitive?

There's a problem with this from 2 angles.

I don't feel that they could operate like Samsung as things stand. Samsung's chip design business in terms of relevance for the overall company is nowhere near that of Intel's. The level of integration as things stand at least from the outside seems several levels of scope apart. Samsung would likely be perfectly happy if say they won external contracts even if they directly compete with their chip design such as from Qualcomm, give them priority, and then just have their consumer devices use Qualcomm with it's Exynos SoCs on the backburner. I believe the above situation even has been reported on in the past in terms of how Samsung tries to court Qualcomm (including factoring that into SoC inclusion on their devices). I'm not sure how such a scenario would play out for Intel and just seems like it would be messy.

There's also the other side in that I'm not sure if operating like Samsung would ultimately be what all the parties are referring to, which I think is that they want is Intel's foundry to be challenging TSMC for leadership.

It's worth noting also that logic is only an aspect of Samsung's foundry business, HBM issues aside they are the market share leader for both DRAM and NAND.

DavidGraham

Veteran

someone please copy paste the post here if you can

Intel had a tough 2024. Wishing Intel a wonderful and productive 2025 and a path forward. A lot has been written about Intel lately.

Largely doom and gloom. Many in the industry, folks at intel and friends and family circles have reached out. Some don't see any hope of turn around, others wondering if this is the low point and if they should invest now.

"Intel is so far behind on AI and have no strategy", "they are still many years behind TSMC on process technology" and the list goes from the Intel bears, who seem the majority now. Bulls have "hope" and the bears counter with "hope is not a plan".

I am in the bulls camp, and rest of the article outlines my perspective and opinion, which is the basis of my hope. My central thesis is that Intel needs to set itself an audacious product target that inspires their whole engineering to rally behind.

To achieve these targets the entire technology stack that includes transistor physics, advanced packaging, silicon design and software architecture need to take shared risks.

There is chatter about splitting process technology and product engineering into separate companies- this could be counter productive.

Creating an arm's length foundry relationship now risks crippling the only company theorectically capable of innovating across the entire stack – from fundamental physics (atoms) to software (python).

Intel snakes and treasures

Many innovations have been sitting on shelf. These innovations span across process technology, advanced packaging, optics, advanced memories, thermals, power delivery, CPU, GPU, and much more.

Some of these innovations could give intel products an order of magnitude improvements in Performance, Performance/$ and Performance/Watt, the metrics that determine the ultimate leadership in all computing domains - across data centers, edge and personal devices.

At its core, Intel's DNA is built on performance leadership – the relentless pursuit of benchmark-breaking excellence. Every aspect of its business model, from marketing to sales, is calibrated for being the undisputed leader in its chosen segments. NVIDIA shares this performance-first DNA, evident in their relentless pursuit of benchmark supremacy at any cost. "Performance DNA" companies also build products ahead of customers needs. They are always ahead of the curve. Neither company thrives as a "value or a services player" – they're not built to compete primarily value metrics like performance/$ or delivering services per customer requests. While value/service-oriented companies can be tremendously successful, transforming a performance-focused company into a value player requires major cultural surgery.

The reverse transformation is far more natural. Running foundry service will be a challenging transition for Intel. Licensing partnerships with companies that are already in the foundry services business could be a more pragmatic approach.

The "spreadsheet & powerpoint snakes" – bureaucratic processes that dominate corporate decision-making – often fail to grasp the true cost of surrendering performance leadership. They optimize for minimizing quarterly losses while missing the bigger picture.

These processes multiply and coil around engineers, constraining their ability to execute on the product roadmap with the boldness it requires. A climate of fear surrounds any attempt at skunkworks initiatives outside established processes – one misstep, and the bureaucratic snakes strike. This environment has bred a pervasive "learned helplessness" throughout the engineering ranks, stifling the very innovation culture that built Intel's empire.

Learned helplessness is a set of behaviors where we give up on escaping a painful situation, because our brain has gradually been taught to assume powerlessness in that situation.

In the cutting-edge world of technology, engineers need more than just resources – they need an inspiring, almost audacious target to pursue. The ideal target should be simultaneously intimidating and inspiring: intimidating because it pushes the boundaries of what's possible, inspiring because it represents a leap forward for computing. Leadership's role isn't just to set these targets – it's to provide the tools, show the path forward, and get their hands dirty alongside the team in the trenches.

The pursuit of a formidable challenge – a "big bad monster" – has universal appeal, regardless of experience level. In today's AI computing landscape, what could serve as that inspiring yet daunting target? Let's begin with the hardware challenge

Yet NVIDIA commands this premium because the NVL72 stands alone in delivering this combination of generality and performance.

Lets capture the pico-joules-flop (Pj/Flop) of the NVL72 system as that will be handy later.

NVL72PjFlop=(132,000∗1012)/(360∗1015)≈0.4

Largely doom and gloom. Many in the industry, folks at intel and friends and family circles have reached out. Some don't see any hope of turn around, others wondering if this is the low point and if they should invest now.

"Intel is so far behind on AI and have no strategy", "they are still many years behind TSMC on process technology" and the list goes from the Intel bears, who seem the majority now. Bulls have "hope" and the bears counter with "hope is not a plan".

I am in the bulls camp, and rest of the article outlines my perspective and opinion, which is the basis of my hope. My central thesis is that Intel needs to set itself an audacious product target that inspires their whole engineering to rally behind.

To achieve these targets the entire technology stack that includes transistor physics, advanced packaging, silicon design and software architecture need to take shared risks.

There is chatter about splitting process technology and product engineering into separate companies- this could be counter productive.

Creating an arm's length foundry relationship now risks crippling the only company theorectically capable of innovating across the entire stack – from fundamental physics (atoms) to software (python).

Intel Treasures and Snakes

Intel snakes and treasures

Intel Treasures

Intel still has a ton of IP and technology. These are gems that many in the eco-system envy.Many innovations have been sitting on shelf. These innovations span across process technology, advanced packaging, optics, advanced memories, thermals, power delivery, CPU, GPU, and much more.

Some of these innovations could give intel products an order of magnitude improvements in Performance, Performance/$ and Performance/Watt, the metrics that determine the ultimate leadership in all computing domains - across data centers, edge and personal devices.

Intel Snakes

The tragedy of Intel's treasures lies in their delayed or deferred deployment. For over five years, the company's product roadmap – the vital pipeline for bringing these innovations to market – has been clogged by manufacturing challenges. While the troubles began with 14nm, the 10nm node became an unprecedented bottleneck that cost Intel half a decade of leadership. However, manufacturing delays tell only part of the story. Deeper issues, rooted in culture and leadership, prevented Intel from making pragmatic decisions – such as timely adoption of external manufacturing capabilities like TSMC when internal solutions faltered.At its core, Intel's DNA is built on performance leadership – the relentless pursuit of benchmark-breaking excellence. Every aspect of its business model, from marketing to sales, is calibrated for being the undisputed leader in its chosen segments. NVIDIA shares this performance-first DNA, evident in their relentless pursuit of benchmark supremacy at any cost. "Performance DNA" companies also build products ahead of customers needs. They are always ahead of the curve. Neither company thrives as a "value or a services player" – they're not built to compete primarily value metrics like performance/$ or delivering services per customer requests. While value/service-oriented companies can be tremendously successful, transforming a performance-focused company into a value player requires major cultural surgery.

The reverse transformation is far more natural. Running foundry service will be a challenging transition for Intel. Licensing partnerships with companies that are already in the foundry services business could be a more pragmatic approach.

The "spreadsheet & powerpoint snakes" – bureaucratic processes that dominate corporate decision-making – often fail to grasp the true cost of surrendering performance leadership. They optimize for minimizing quarterly losses while missing the bigger picture.

These processes multiply and coil around engineers, constraining their ability to execute on the product roadmap with the boldness it requires. A climate of fear surrounds any attempt at skunkworks initiatives outside established processes – one misstep, and the bureaucratic snakes strike. This environment has bred a pervasive "learned helplessness" throughout the engineering ranks, stifling the very innovation culture that built Intel's empire.

Learned helplessness is a set of behaviors where we give up on escaping a painful situation, because our brain has gradually been taught to assume powerlessness in that situation.

Transformation

Having witnessed companies rise from the ashes before, I know transformations are possible, even from the depths of despair. While financial engineering provides essential sustenance for development, it alone cannot ignite the spark that drives engineers to build something truly revolutionary.In the cutting-edge world of technology, engineers need more than just resources – they need an inspiring, almost audacious target to pursue. The ideal target should be simultaneously intimidating and inspiring: intimidating because it pushes the boundaries of what's possible, inspiring because it represents a leap forward for computing. Leadership's role isn't just to set these targets – it's to provide the tools, show the path forward, and get their hands dirty alongside the team in the trenches.

The pursuit of a formidable challenge – a "big bad monster" – has universal appeal, regardless of experience level. In today's AI computing landscape, what could serve as that inspiring yet daunting target? Let's begin with the hardware challenge

The Big Bad Monster NVL72

Consider NVIDIA's NVL72, the current apex predator in AI computing:- 360 PFLOPS of raw FP8 compute (no sparsity)

- 576 TB/Sec of HBM Bandwidth at 18.8 TB Capacity

- 130 TB/Sec of GPU-GPU Bandwidth through NVLink

- ~ $3M Price

Yet NVIDIA commands this premium because the NVL72 stands alone in delivering this combination of generality and performance.

Lets capture the pico-joules-flop (Pj/Flop) of the NVL72 system as that will be handy later.

NVL72PjFlop=(132,000∗1012)/(360∗1015)≈0.4

DavidGraham

Veteran

Inspiring 2027 Target

Here's what I propose as Intel's moonshot system targets:- 1 ExaFlop of raw FP8/INT8 compute performance

- 5 PB/Sec of "HBM" bandwidth at 138 TB Capacity

- 2.5 PB/Sec of GPU-GPU bandwidth

- All while maintaining a 132 KW power envelope

- At $3M price

- 3X leap in compute performance

- 10X revolution in memory bandwidth and capacity

- 20X breakthrough in interconnect bandwidth.

- All while maintaining the same power envelope and cost

It's also important to mention that the specs above will translate to very compelling systems at 1 Petaflop (132W) and 100 Teraflop(13W) ranges as well, giving Intel an excellent leadership stack from mobile, mini-PC, Desktop to Data Centers. Intel will have the ability to offer single stack from device to DC to deploy excellent open models like Deep Seek efficiently to consumers and enterprises.

A single system that can productively host the whole 670B parameter DeepSeek model under $10K is very much in Intel's realm.

There's a "deepseek" moment in cost within the next 3-5 year horizon. What gives me this optimism? One should go down to first principles and look at the following factors -

- How many logic and memory wafers do we need for the specs above

- The price of these wafers

- Pflops-per-mm2

- Gbytes-per-mm2

- Wafer yields

- Assembly and rest-of-system overheads

- Margin

( I have simple GPUFirstPrincipleCost web app in the works where you can feed your input and assumptions and the app calculates the cost. Will share when done)

Let us now look at Pj/Flop derived from the ambitious targets above

Intel2027PjFlop=(132,000∗1012)/(1000∗1015)≈0.1

Achieving this target requires a 4X reduction in Pj/Flop – a daunting challenge in the post-Moore's law era. However, Intel's Lunar Lake silicon already demonstrates promising efficiency, delivering ~100 INT8 TOPS (GPU+NPU) at ~20W, or ~0.2 Pj/op. This baseline proves Intel possesses IP capable of competitive efficiency.

4 key connected challenges to overcome

1. Find another 2X efficiency to get to 0.1 Pj/Flop

2. While scaling compute 10,000X to get to Exaflop (including the cost for interconnect)

3. While delivering 10X near memory bandwidth

4. while staying compatible with the existing Python/C/C++ GPU software (ie; no esoteric diversions like quantum, neuromorphic , logarthmic and other ideas being pursued by a few startups )

3 key contributors for on-chip power (In Femto (F) joules )

- Math ops: ~8 Fj/bit

- Memory: ~50 Fj/bit

- Communication: ~100 Fj/bit/mm

At IEDM recently nVidia published the below picture.

that can usher in the era of near memory computing. Some hints linked below

https://www.tomshardware.com/news/intel-patent-reveals-meteor-lake-adamantine-l4-cache

Whether it's their homegrown technology or from a "tight" partnership with DRAM industry, there are 10X bandwidth increase opportunities in the 3-4 year horizon.

Whoever takes the first risks and executes can be far ahead of the rest. Interestingly the technologies that help deliver 10X memory bandwidth also help with the communication bandwidth target of 20X. The key is to free up more of the chip perimeter for chip-to-chip communication.

Intel also has excellent Silicon Photonics technology, which won't amount to anything if it isn't integrated into products to start the learning loop. All technologies and IP are perishable goods with expiry date. Consume them before it's too late.

Let's talk about Intel's scalability and software now. Recently I got access to Intel PVC 8-GPU system on their Tiber cloud. I also add access 8-GPU setups from AMD and Nvidia. All three systems are floating point beasts. Here are there FP16/BF16 specifications

- Nvidia 8xH100 - 8 PF

- AMD 8xMI300 - 10.4 PF

- Intel 8xPVC. - 6.7 PF

I noticed that majority of the performance is dominated by sequence of matrix multiplies, all of them generally large matrices (4K and above). I also wanted to exercise these system with PyTorch - standard PyTorch, no fancy libraries or middleware. My thesis is that the quality, coverage and performance of standard PyTorch is a good benchmark for AI software developer productivity on different GPUs.

Software observations.

The install and getting things to "work" first time was more steps with both AMD and Intel. It involved interactions with engineers at both companies before I got going. Nvidia was straightforward.

But I have to acknowledge that both AMD and Intel made a ton of progress in making Pytorch easy to use, compared to where we were 2 years ago. Intel's driver install and Pytorch setup was a bit less friction than AMD.

AMD supports torch.cuda device directly and with intel you need to map to torch.xpu device. So, there is a bit of code adjustments I needed to make for Intel, but was not too painful. Intel "sunset" PVC GPU last year and from what I have heard the AI software team was busy with Gaudi for past few years. My expectations of compatibility and performance on Intel were very low.

I was pleasantly surprised that I was to run my tests to completion - not only 1 GPU, but also all the way up to 8 GPUs. Below are the results for 8X GPUs.

Across the sweep of different matrix shapes and sizes

- Nvidia 8xH100 - 5.3 PF (67% of peak)

- AMD 8xMI300 - 3.1 PF (30% of peak)

- Intel 8xPVC - 2.7 PF (40% of peak)

Some observations

- Easy to see why Nvidia is still the darling of everyone. This is H100. Blackwell will move the bar up even more

- From the semi-analysis article, understand AMD has new drivers coming that seem to improve GEMM numbers substantially. which is good news for AMD. This article is not about AMD or NVIDIA.

- The surprise here is the abandoned PVC that is even this close to the top GPUs . PVC is a generation behind MI300X in terms of process technology. Majority of PVC silicon is on Intel 10nm, which is ~1.5 nodes behind TSMC N4. The GPU-to-GPU bandwidth through XeLink seems to be performing better than AMD xGMI solution.

- There are definitely software optimizations left on the table. They should be able to get to 60% of peak. You can see the impact of software overhead on Intel in the case of smaller matrix dimensions.

- Intel cancelled the follow-on to PVC called Rialto Bridge in Mar' 2023 (

https://www.crn.com/news/components...ter-gpu-road-map-delays-falcon-shores-to-2025

)

- This chip was ready for tape-out in Q4'22 and would have been volume production in 2024 and was speced to deliver more than H100.

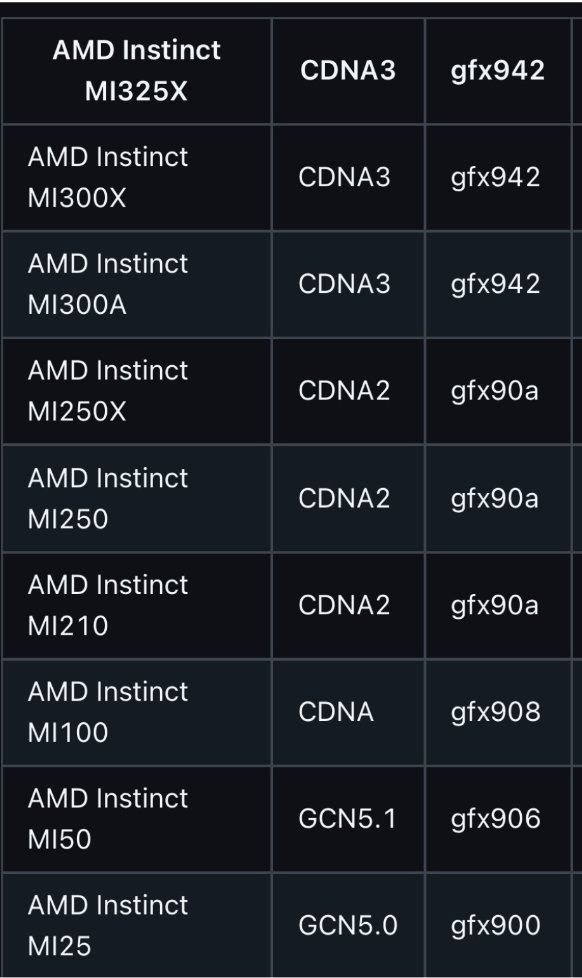

- AMD began there iteration loop with advanced packaging and HBM with Fiji in 2015, followed it with Vega in 2017. MI25, MI50, MI100, MI200, MI250 followed and eventually MI300. MI300 is AMD's first GPU to cross $1B in revenue. You only learn by shipping.

Getting back to the main thread. The data points above show that Intel has foundations to be able to compete with the best. They need to be actively playing the game and not thrash the roadmap. And stop snatching defeats from jaws of victory.

None of the this is going to be easy. All layers of Intel have to go through painful transformations. Just the executive leadership musical chairs are insufficient.

"Let chaos reign and then rein in chaos."

This is a famous quote by Andy Grove ( probably the last CEO of Intel that understood every layer of the company's stack very intimately. I often wondered what Andy would do now..)Let's dissect this a bit. Why would you let any chaos reign? Isn't all chaos bad? the answer is no. There is good chaos and bad chaos. Good chaos forces you to invent and change. Major tech and industry transitions are good chaos. Internet, WiFI, Cloud, Smartphone, AI are some examples of transitions than can lead to good chaos. Intel benefitted from some of these transitions when it was able to "rein in". Good chaos generally comes in from external events. Bad chaos comes from internal issues. I like to call bad chaos "organizational entropy". This is the higher order bit that decays the efficiency of companies.

https://pdfs.semanticscholar.org/8655/f1d23285639d5833ff4fa0ea4632856011cf.pdf

.

When entropy crosses a certain threshold, the leadership loses control of the company. No amount of executive thrash can fix this situation, until you reduce this entropy.

My humble suggestions for whoever takes the leadership mantle at intel

- Increase the coder-to-coordinator ration by 10x. This is likely the most painful thing to do, as it could result in massive reduction in head count first and some rehiring. Give re-learning opportunity to folks stuck in co-ordination tasks to get back to coding or exit the company. AI tools are a great enablers for seniors to get back into hands on work.

- Organize the company around product leadership architecture. Intel can build the whole stack of products from 10W to 150KW with <6 modular building blocks (/chiplets) that are shared across the whole stack. Splitting the company around go-to-market boundaries is preventing them to leverage their leadership IP up and down the stack (eg:- Lunarlake SOC energy efficiency on Xeon will be awesome, but Xeon energy efficiency is far from leadership today). With leverage of leadership IP across the whole stack Intel can field top performing products across client, edge and data centers and get a healthy share of >$500B TAM accessible to them.

- Cancel the cancel culture. The legacy of Intel is built on relentless iteration. Iteration cycles to 90% yields of new process technologies every 18 months. Tick-tock model of execution. Stop the "cancel culture". You achieve nothing.

- Bet on generality and focus in performance fundamentals. Ops/clk, Bytes/Clock, Pj/Op, Pj/Bit etc. The boundaries are not CPU, GPU and AI Accelerators. The workloads are an evolving mix of scalar, vector and matrix computations demanding increasing bandwidth and memory capacity. You have the unique ability to deliver these elements in ratios that can delight your customers and destroy your competitors.

- Make a ton of BattleMage and PVC GPUs available to open source developers worldwide friction free. Selling a ton of Battlemage GPUs is a good step to achieve this. Don't worry about the margins on them. This is the most efficient way to get into hearts and minds of AI developers, while delighting millions of gamers worldwide. Battlemage is a great example of the benefit of iteration. Very measurable gains in software robustness and performance since Alchemist in 2022. They will be on path to leadership if they iterate again and launch Celestial in the next 12 months. Make all inventory of PVC (including ones in the Argonne Exascale installation) available to Github developers with no "cloud friction". It should be a single click connect to cloud GPUs from any PC/Mac in the world. Intel GPUs are the most compatible (amongst other intel choices) with Pytorch/Triton AI developer eco-system. This effort will help immensely with the leadership 2027 system launch, where more software will be functional on Intel day one.

If Intel were putting out datacenter products that were competitive with NVIDIA the cost wouldn't be such a huge issue. Hell if Intel fabbed for NVIDIA cost wouldn't be a huge issue (for DC products).

Yes if Intel's doing their own products, but if fabbing for NVIDIA I think cost will still be an issue unless their process is much better than everyone elses', otherwise NVIDIA would just shopping around and find a more cost effective fab.

I think this is kind of a blessing and curse of vertical integration. Vertical integration allows one to overlook some cost inefficiencies in the supply chain, but on the other hand, it also might cause the supplier (which they own) becoming too inefficient because they have a guaranteed customer.

Intel is always a process first company. In a sense most of its problems can be pointed at the process: if they have a world leading process most of these problems would just disappear or at least not as serious as it is now.

I was thinking if TSMC was at capacity, NVIDIA could have Intel produce for them even if it's more expensive. The cost wouldn't be a huge deal for DC chips that sell for tens of thousands dollars.Yes if Intel's doing their own products, but if fabbing for NVIDIA I think cost will still be an issue unless their process is much better than everyone elses', otherwise NVIDIA would just shopping around and find a more cost effective fab.

I think this is kind of a blessing and curse of vertical integration. Vertical integration allows one to overlook some cost inefficiencies in the supply chain, but on the other hand, it also might cause the supplier (which they own) becoming too inefficient because they have a guaranteed customer.

Intel is always a process first company. In a sense most of its problems can be pointed at the process: if they have a world leading process most of these problems would just disappear or at least not as serious as it is now.

The flaw in my logic is that these chips probably can't be ported between fabs like they are making toasters. I'd imagine even if Intel had a node that was comparable in performance to 4N, it would be a lot of work to port GB200 to it. Maybe this could change; I saw Intel was working with ASML but I dunno how long that's been going on.

I was thinking if TSMC was at capacity, NVIDIA could have Intel produce for them even if it's more expensive. The cost wouldn't be a huge deal for DC chips that sell for tens of thousands dollars.

The flaw in my logic is that these chips probably can't be ported between fabs like they are making toasters. I'd imagine even if Intel had a node that was comparable in performance to 4N, it would be a lot of work to port GB200 to it. Maybe this could change; I saw Intel was working with ASML but I dunno how long that's been going on.

Yeah that's an issue. As other mentioned Intel's process used to use very different design rules from others. TSMC on the other hand is the industry standard for quite some time. In the past Intel didn't care about this because they basically only fab their own products, but now since they seriously want to be a foundry they are on the way to be compatible with others. How well it's another question, but at least Samsung did that to the extent that NVIDIA was willing to use Samung to fab their GPU at the same time using TSMC for their accelerators, so I think it's possible.

Sega_Model_4

Newcomer

Intel Appoints Lip-Bu Tan as Chief Executive Officer

www.intc.com

www.intc.com

Intel Appoints Lip-Bu Tan as Chief Executive Officer

Similar threads

- Replies

- 10

- Views

- 549

- Replies

- 0

- Views

- 403

- Replies

- 0

- Views

- 375