I actually think with the advent of LLMs and machine learning we have a shot at reaching photorealism quickly, AI will be the shortcut here.

As we've seen with the videos showing gaming scenes converted with AI to photorealsitic scenes full of life like characters, hair physics, cloth simulation, realistic lighting, shadowing and reflections, we have a glimpse into the future. There are many shortcomings of course, but they will be fixed when the AI is closely integrated into the game engine.

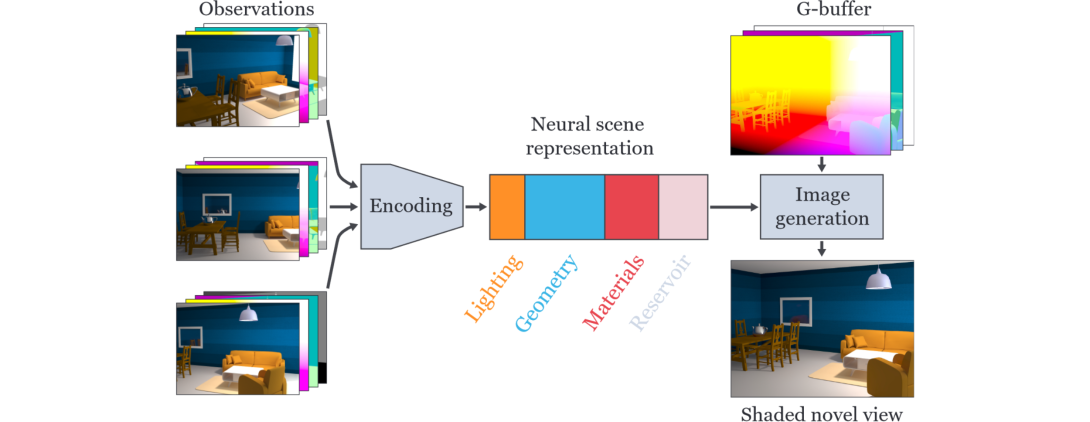

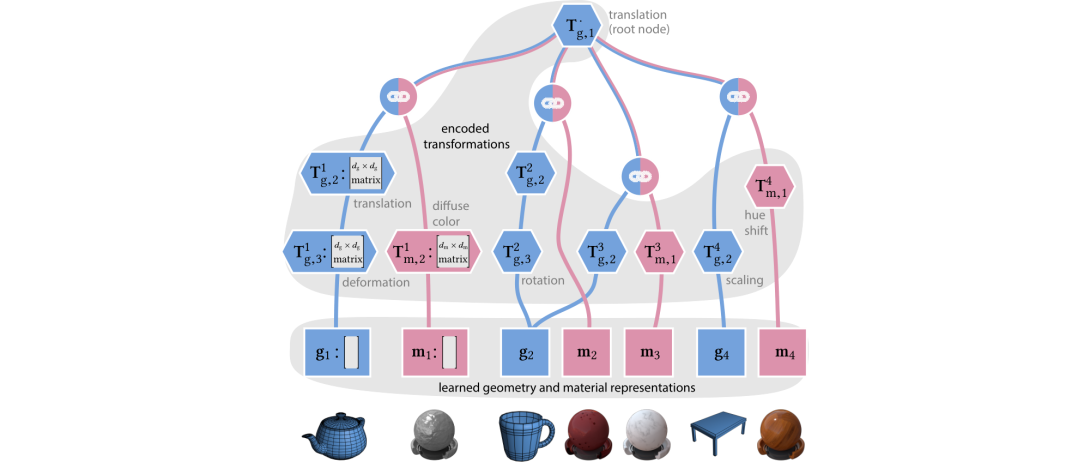

The AI model will have acess to 3D data, full world space coordinates, lighting information and various details instead of the 2D video data, this will be enough to boost it's accuracy and minimize the amnout of inference it has to do. We will also have faster and smarter models requiring less time to do their thing.

I can see future GPUs having much larger matrix cores، to the point of out numbering the regular FP32 cores, CPUs will also have bigger NPUs to assist, this would be enough to do 720p @60fps rendering, maybe even 1080p30 or 1080p60 if progress allows it.

Next، this will be upscaled, denoised and frame generated into the desired fidelity.

All in all, this path is a much quicker path -at least in theory- than waiting for traditional rendering to be mature and fast enough, which is becoming much harder and requires longer times, we simply lack the transistor budget to scale up the required horse power for traditional rendering to reach photorealism and do so at the previously feasible economic levels.

Even now traditional rendering faces huge challenges, chief among them is the code being limited by the CPU, and the slow progress of CPUs themselves, something has to give to escape these seemingly inescapable hurdles that existed for far too long.

So, playing with the ratios of different portions of these transistors budgets to allow for bigger machine learning portion than the traditional portion would be the smart thing to do, especially when it allows access to entirely new visual capabilities.